Submission ID: cef99452

Topology Learning Network (TLNet)

Processed: 24-04-16. Download link: cef99452ccf317dbdaee1a2c6fed84b3-rootsift-upright-2k.json

This page ranks the submission against all others using the same number of keypoints, regardless of descriptor size. Please hover over table headers for descriptions on metrics and full scene names.

- Phototourism dataset: Stereo track / Multiview track

- Prague Parks dataset: Stereo track / Multiview track

- Google Urban dataset: Stereo track / Multiview track

Metadata

- Authors: szw (contact)

- Keypoint: sift-lowth

- Descriptor: rootsift-upright (128 float32: 512 bytes)

- Number of features: 2048

- Summary: RootSIFT upright with 2048 features, NN matching, TLNet as outlier filter, cv2-usacmagsac-f.

- Paper: N/A

- Website: N/A

- Processing date: 24-04-16

Phototourism dataset / Stereo track

mAA at 10 degrees: 0.47625 (±0.00000 over 1 run(s) / ±0.16073 over 9 scenes)

Rank (per category): 50 (of 87)

| Scene | Features | Matches (raw) |

Matches (final) |

Rep. @ 3 px. | MS @ 3 px. | mAA(5o) | mAA(10o) |

| BM | 2048.0 | 2048.0 | 219.3 | 0.458 Rank: 49/87 |

0.877 Rank: 61/87 |

0.10000 (±0.00000) Rank: 75/87 |

0.23333 (±0.00000) Rank: 66/87 |

| FCS | 2048.0 | 2048.0 | 156.9 | 0.375 Rank: 57/87 |

0.701 Rank: 82/87 |

0.39469 (±0.00000) Rank: 71/87 |

0.50531 (±0.00000) Rank: 71/87 |

| LMS | 2048.0 | 2048.0 | 181.1 | 0.469 Rank: 12/87 |

0.739 Rank: 2/87 |

0.61128 (±0.00000) Rank: 21/87 |

0.72308 (±0.00000) Rank: 23/87 |

| LB | 2048.0 | 2048.0 | 94.9 | 0.443 Rank: 16/87 |

0.823 Rank: 1/87 |

0.39167 (±0.00000) Rank: 75/87 |

0.52500 (±0.00000) Rank: 49/87 |

| MC | 2048.0 | 2048.0 | 102.5 | 0.429 Rank: 27/87 |

0.925 Rank: 15/87 |

0.63077 (±0.00000) Rank: 75/87 |

0.70000 (±0.00000) Rank: 1/87 |

| MR | 2045.9 | 2046.4 | 130.6 | 0.459 Rank: 9/87 |

0.852 Rank: 67/87 |

0.24906 (±0.00000) Rank: 42/87 |

0.40000 (±0.00000) Rank: 14/87 |

| PSM | 2048.0 | 2048.0 | 163.1 | 0.381 Rank: 3/87 |

0.470 Rank: 70/87 |

0.19412 (±0.00000) Rank: 40/87 |

0.27353 (±0.00000) Rank: 48/87 |

| SF | 2048.0 | 2048.0 | 142.3 | 0.395 Rank: 11/87 |

0.770 Rank: 61/87 |

0.40707 (±0.00000) Rank: 57/87 |

0.54058 (±0.00000) Rank: 58/87 |

| SPC | 2048.0 | 2048.0 | 111.4 | 0.433 Rank: 19/87 |

0.685 Rank: 76/87 |

0.25297 (±0.00000) Rank: 75/87 |

0.38541 (±0.00000) Rank: 76/87 |

| Avg | 2047.8 | 2047.8 | 144.7 | 0.427 Rank: 22/87 |

0.760 Rank: 67/87 |

0.35907 (±0.00000) Rank: 48/87 |

0.47625 (±0.00000) Rank: 50/87 |

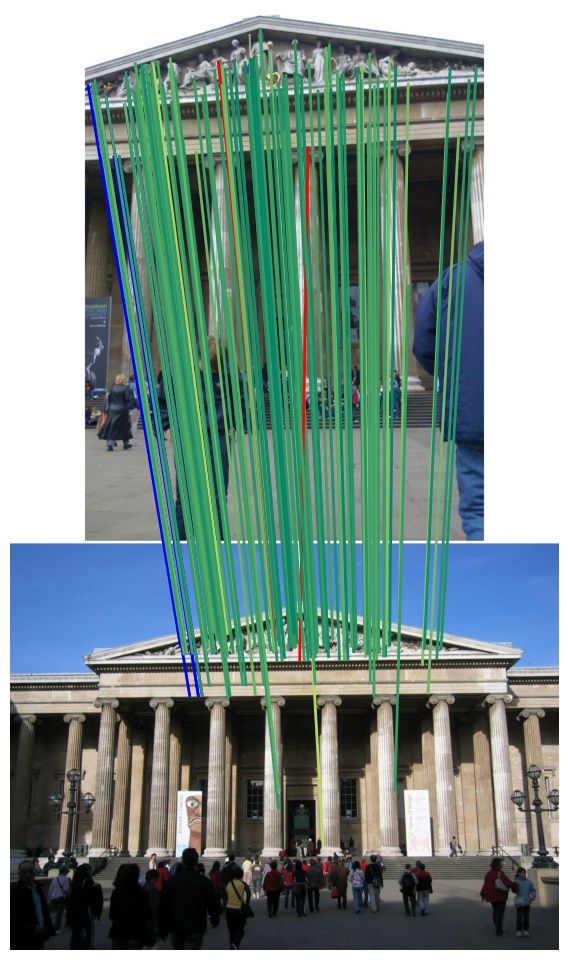

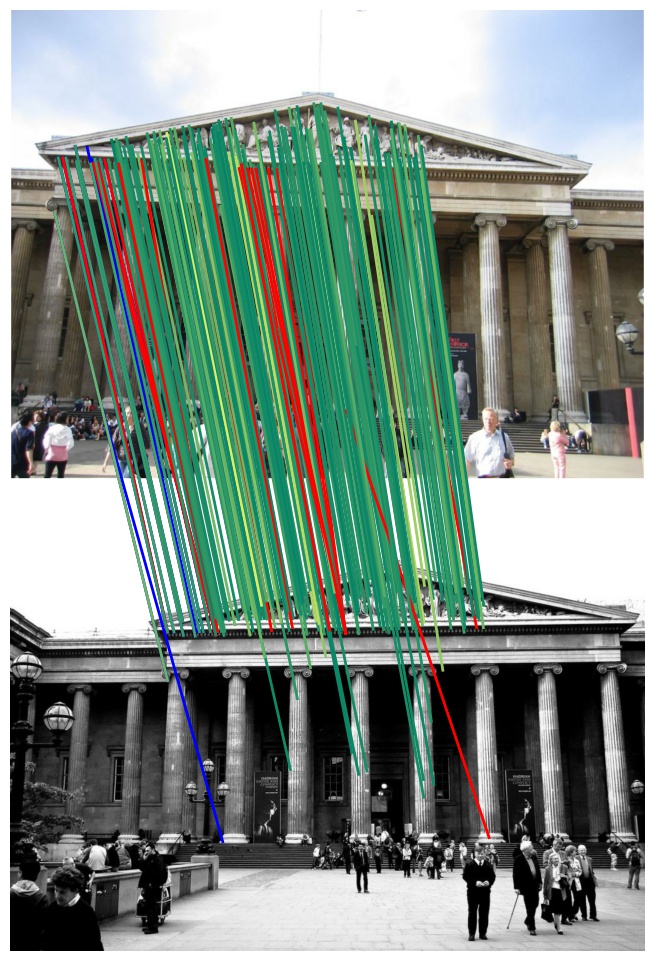

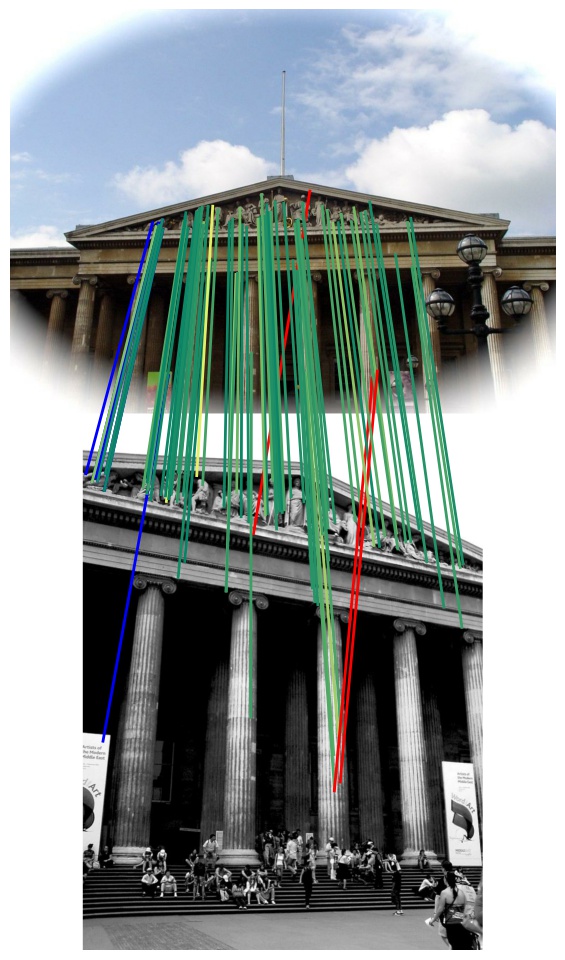

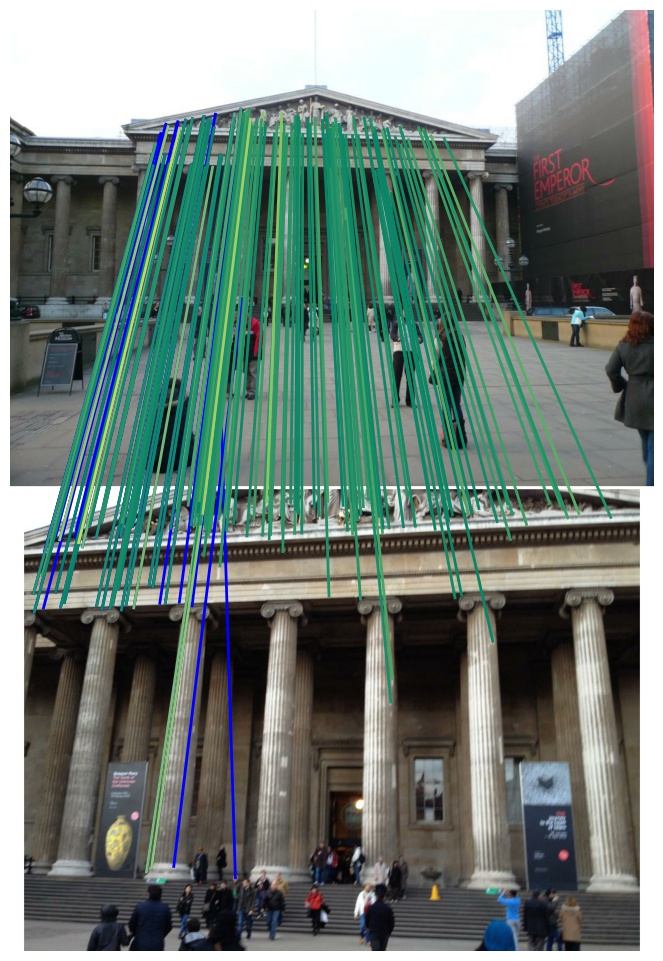

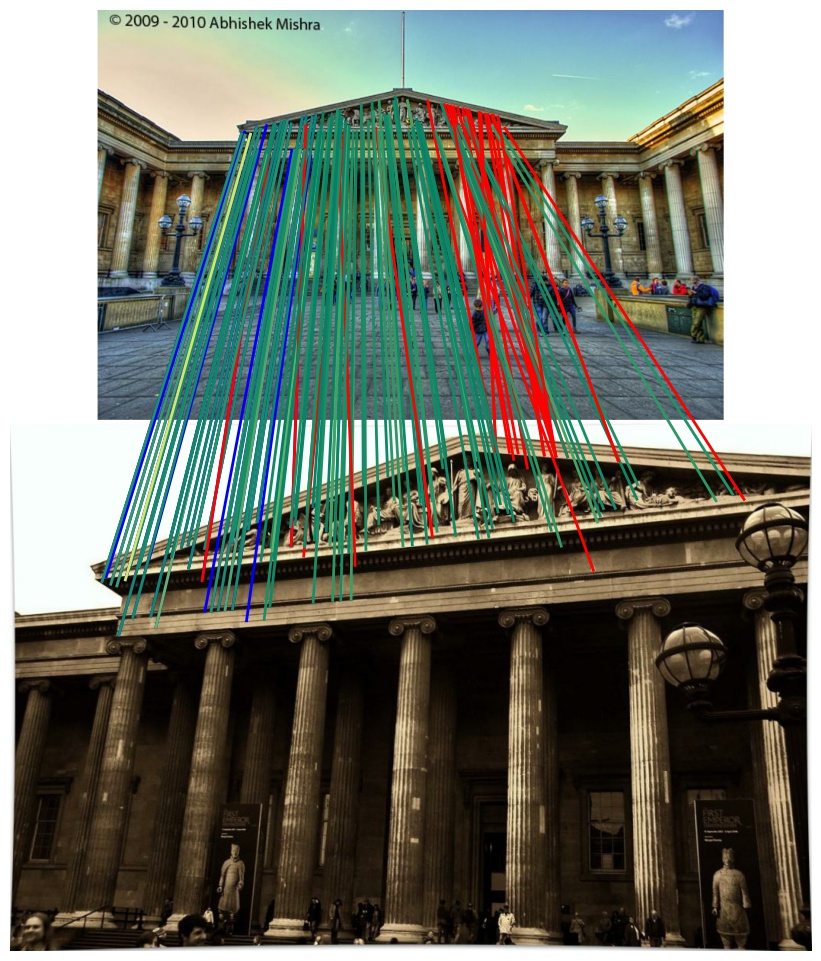

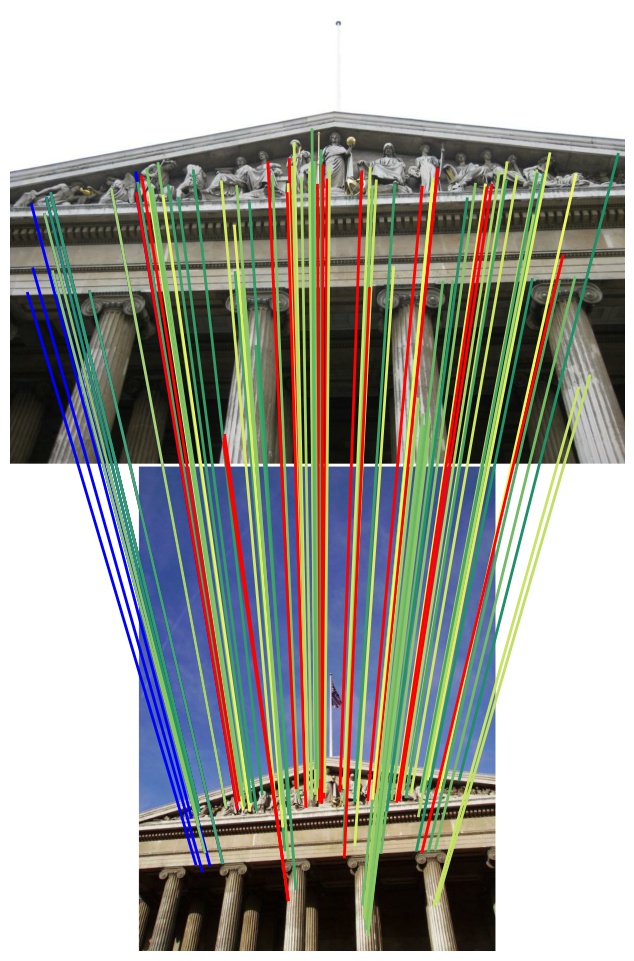

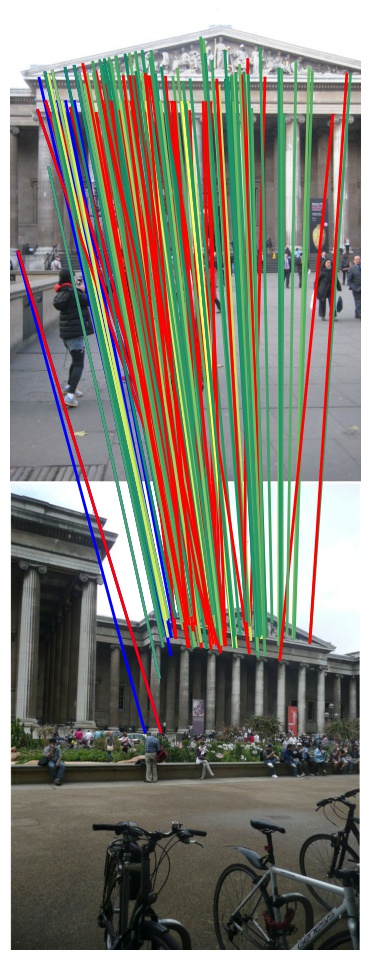

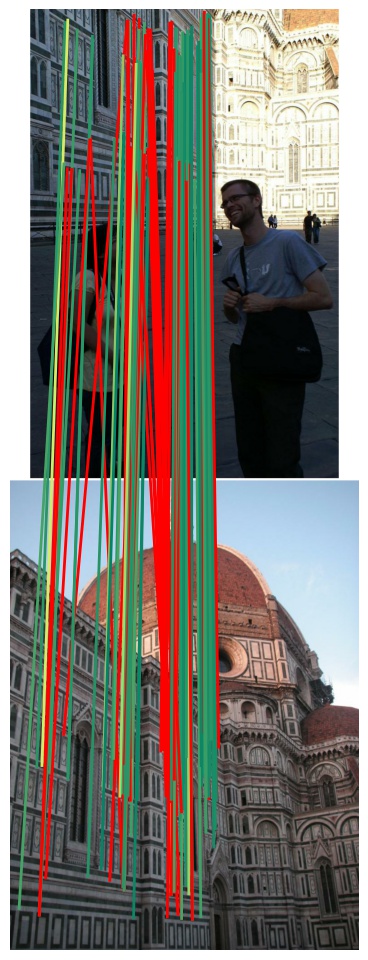

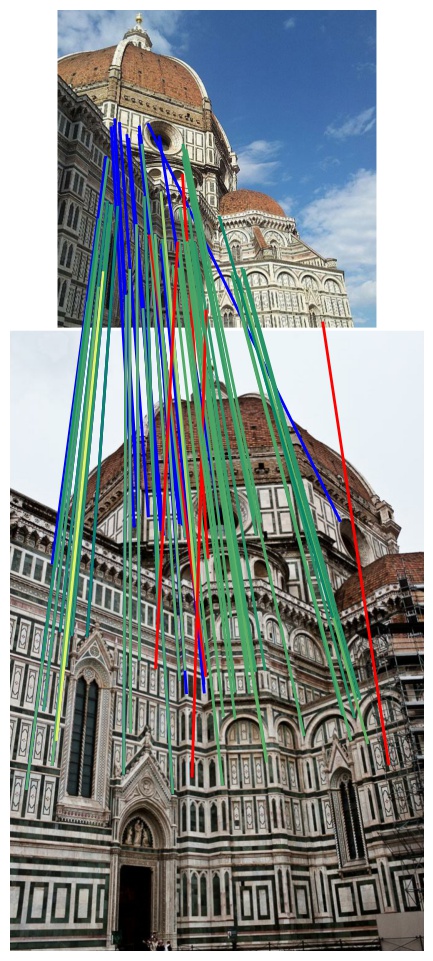

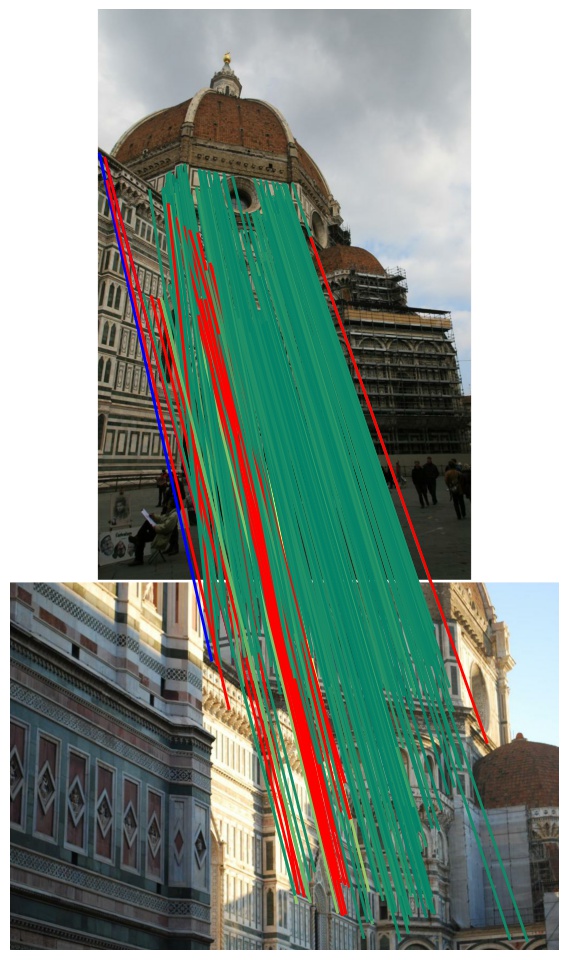

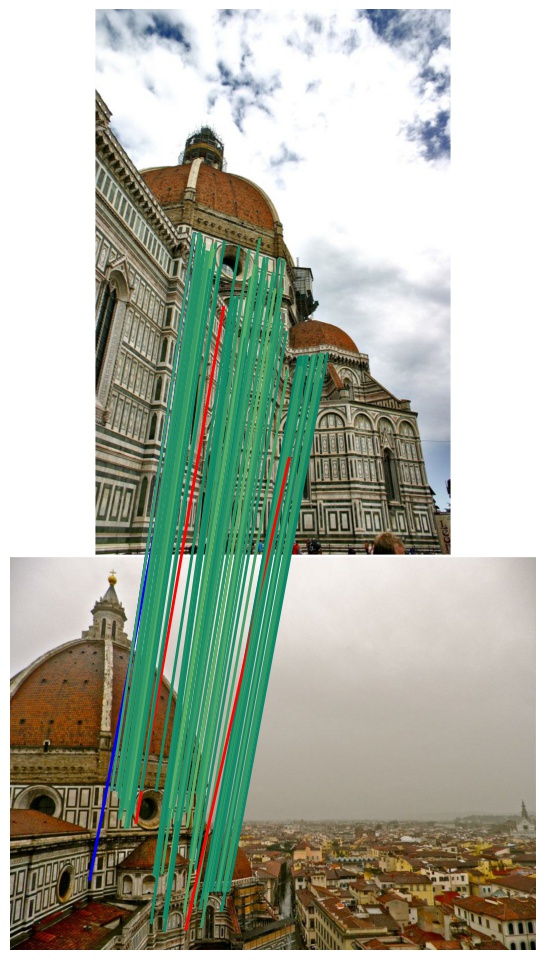

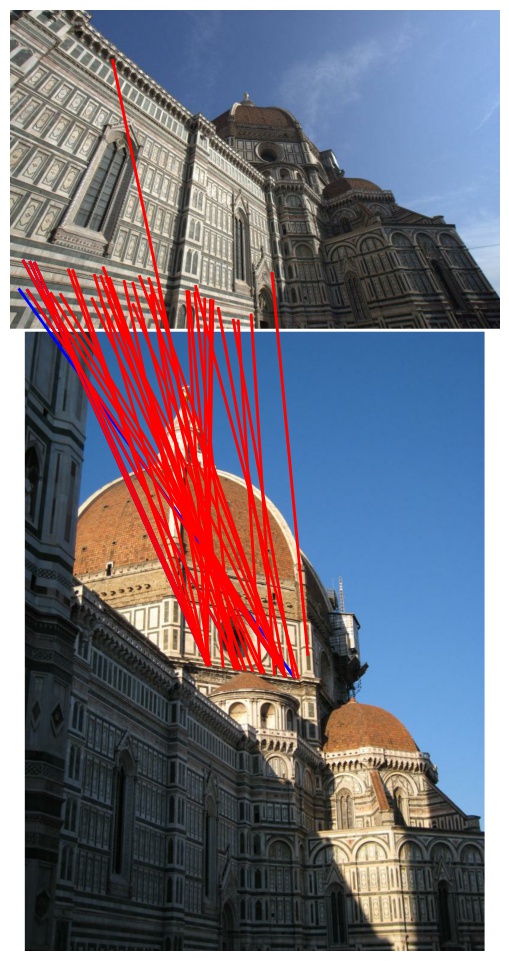

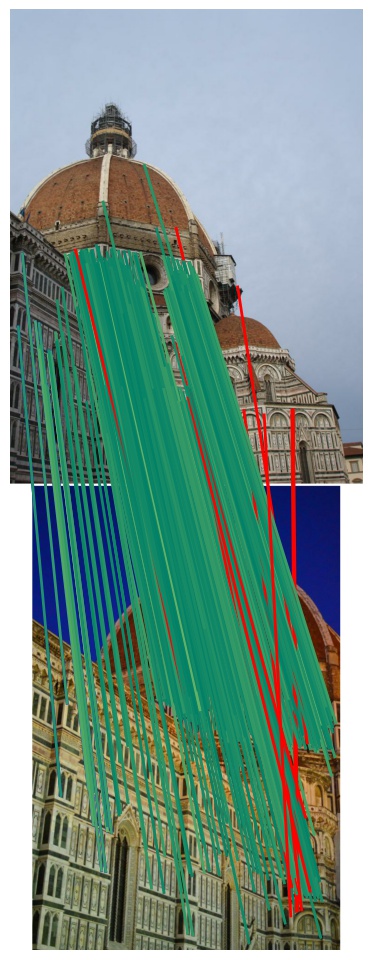

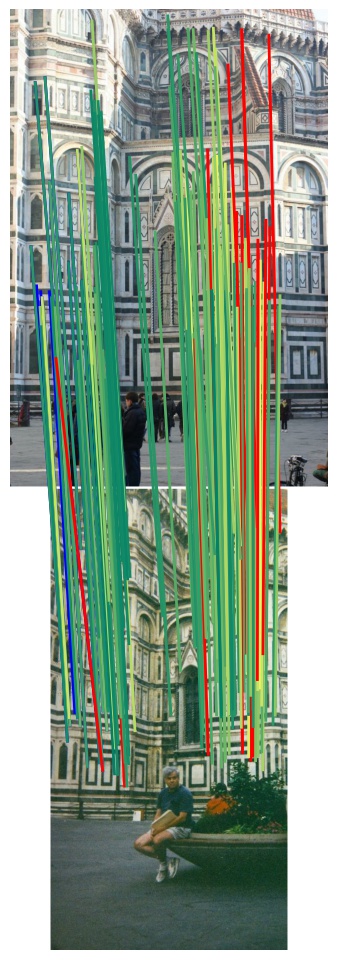

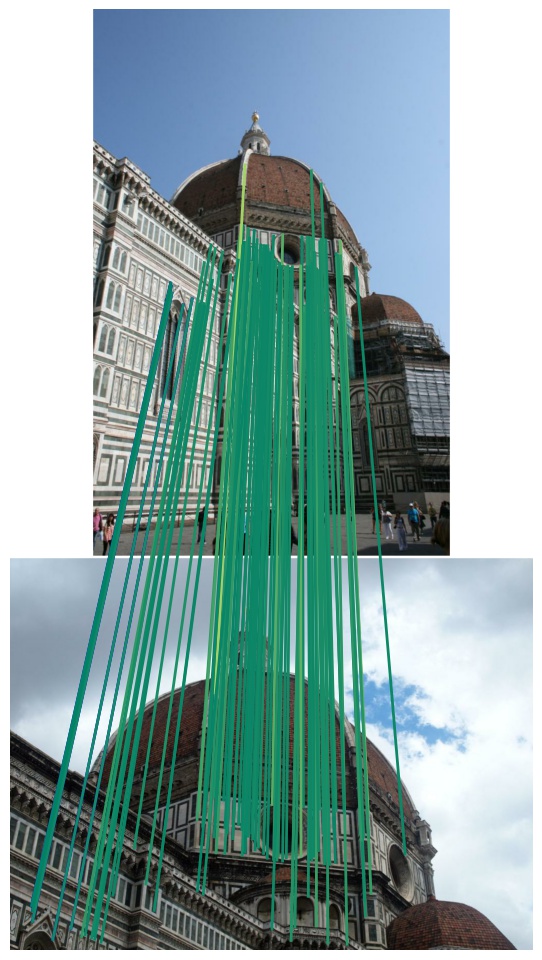

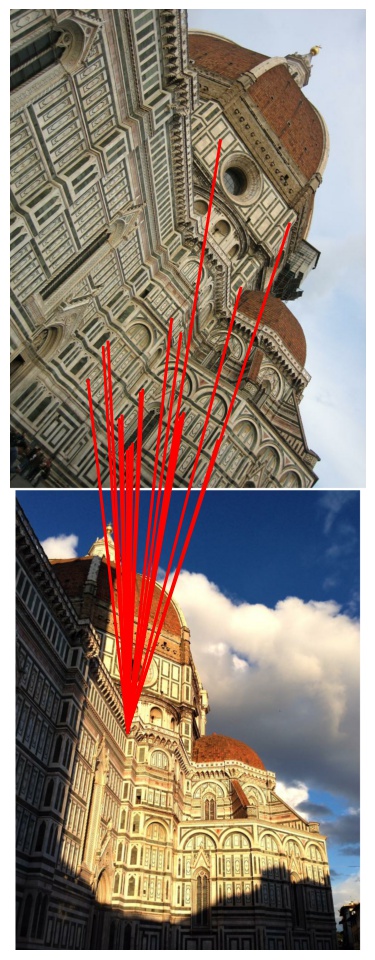

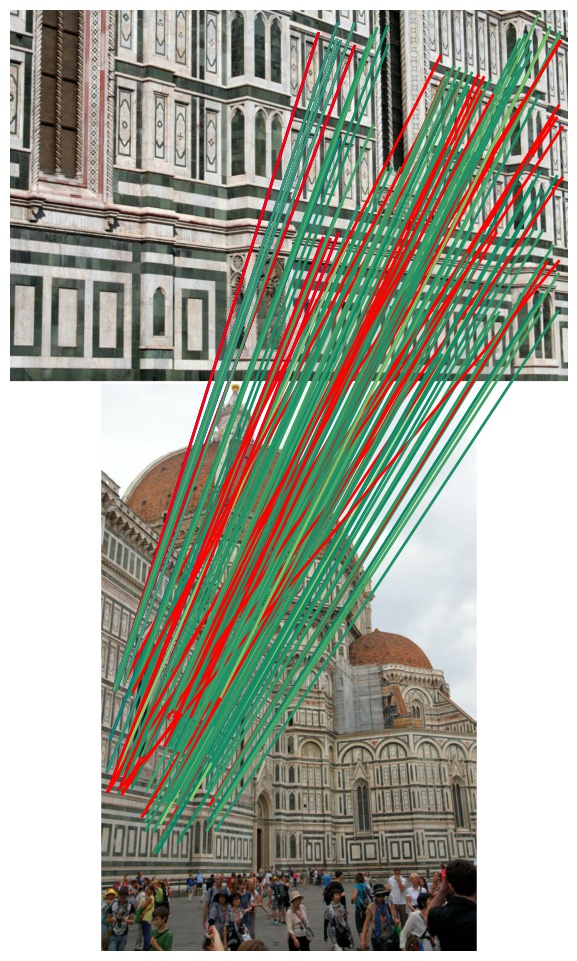

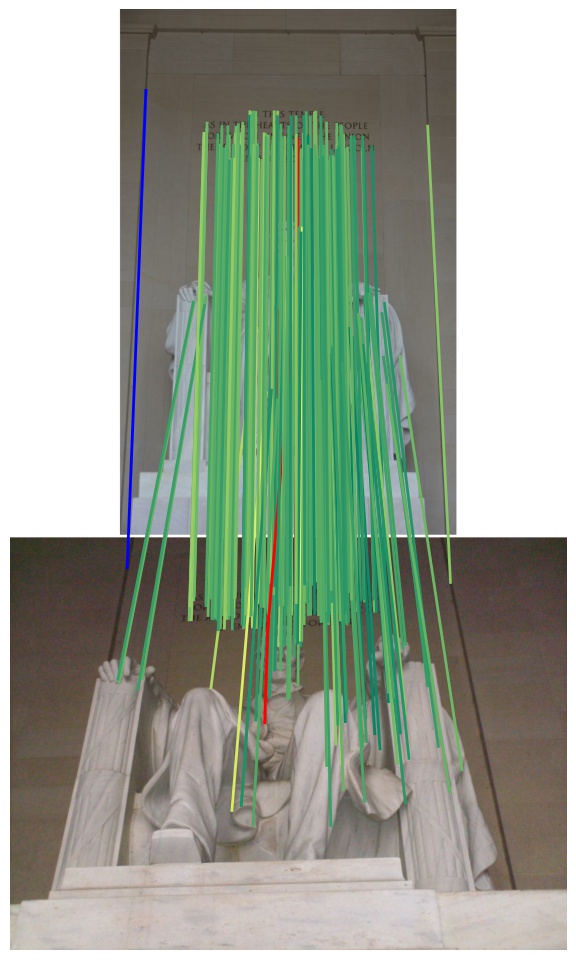

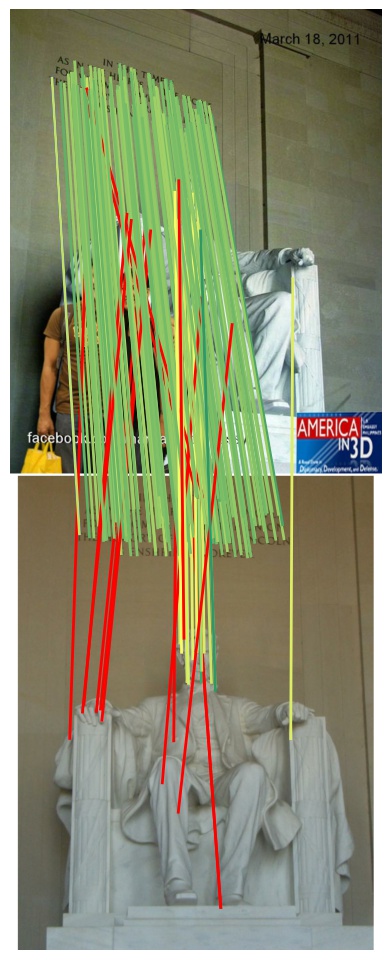

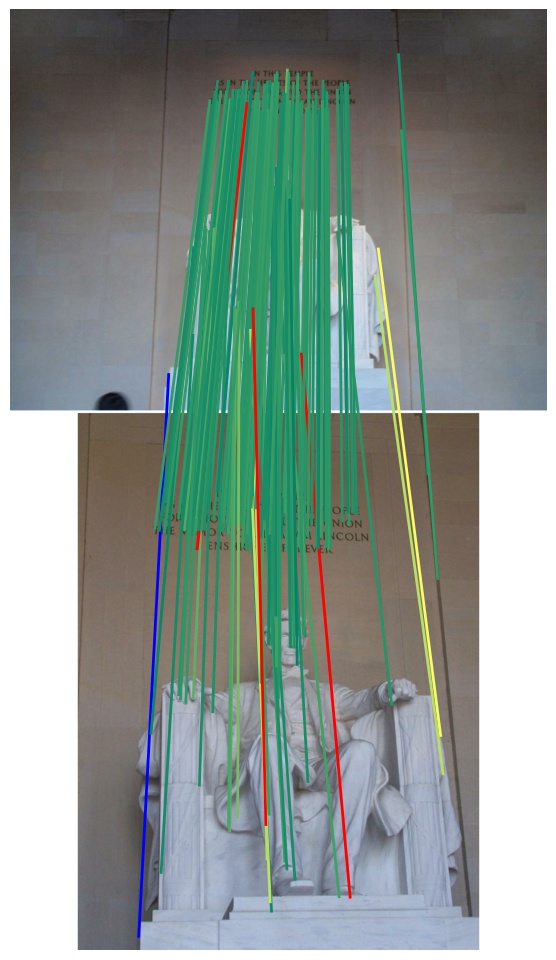

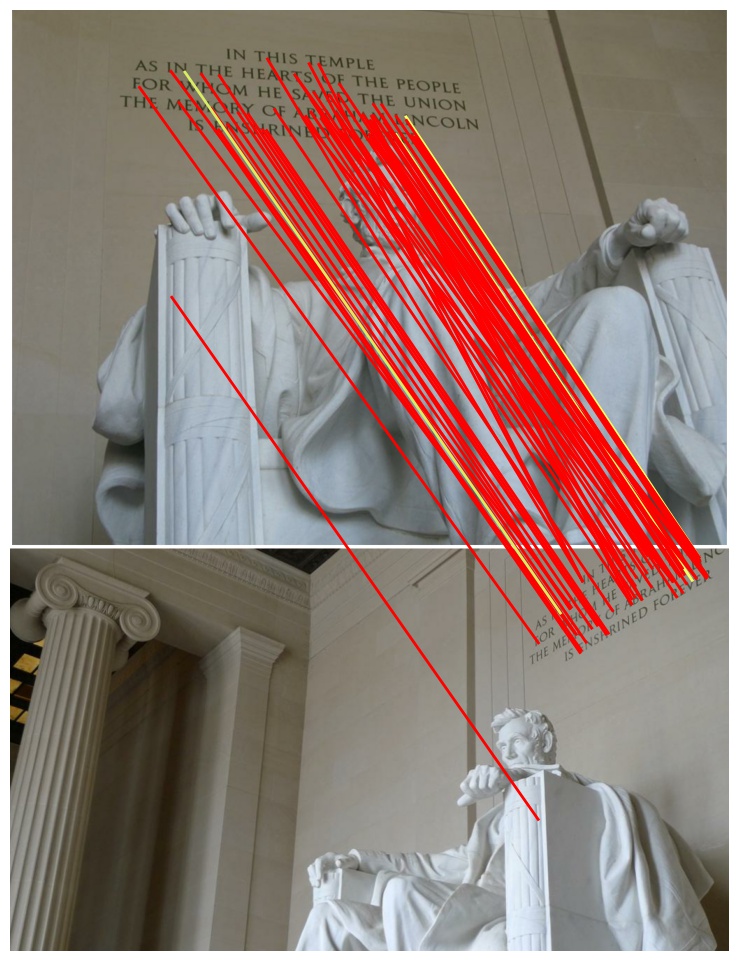

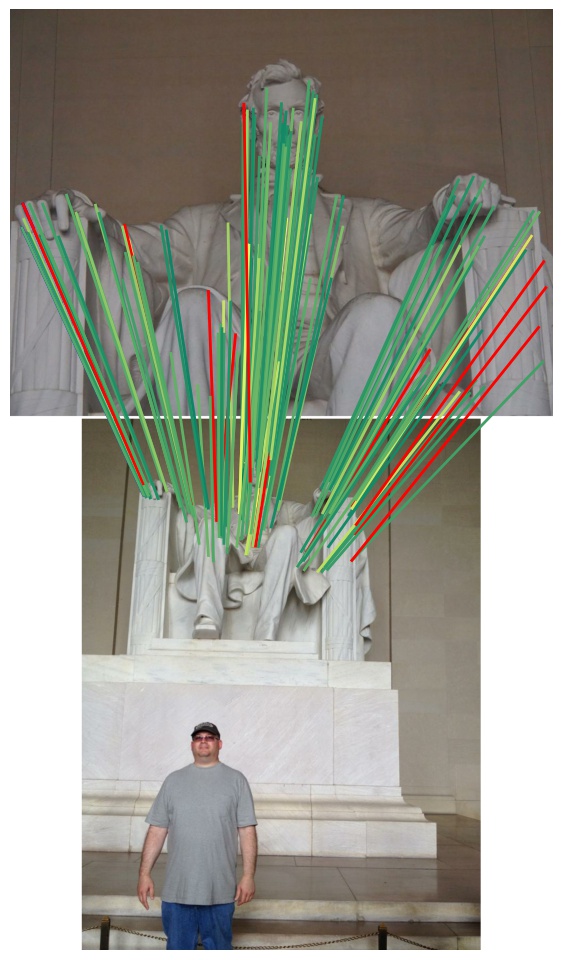

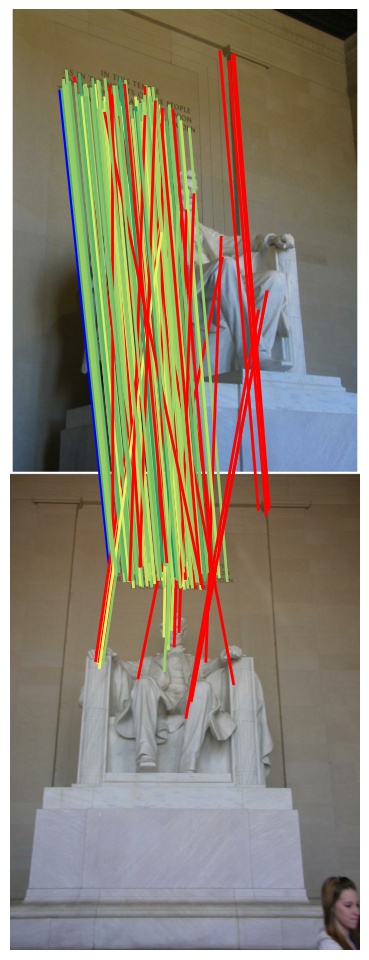

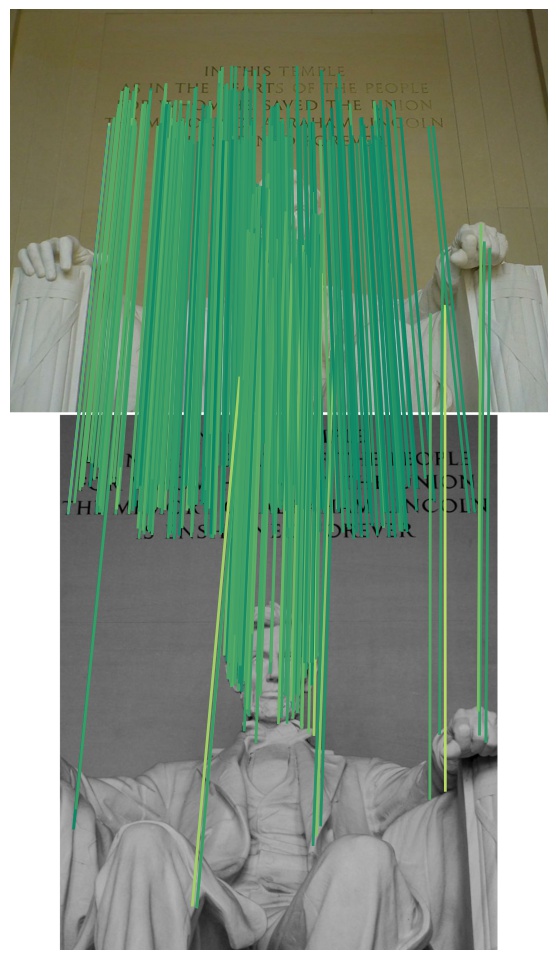

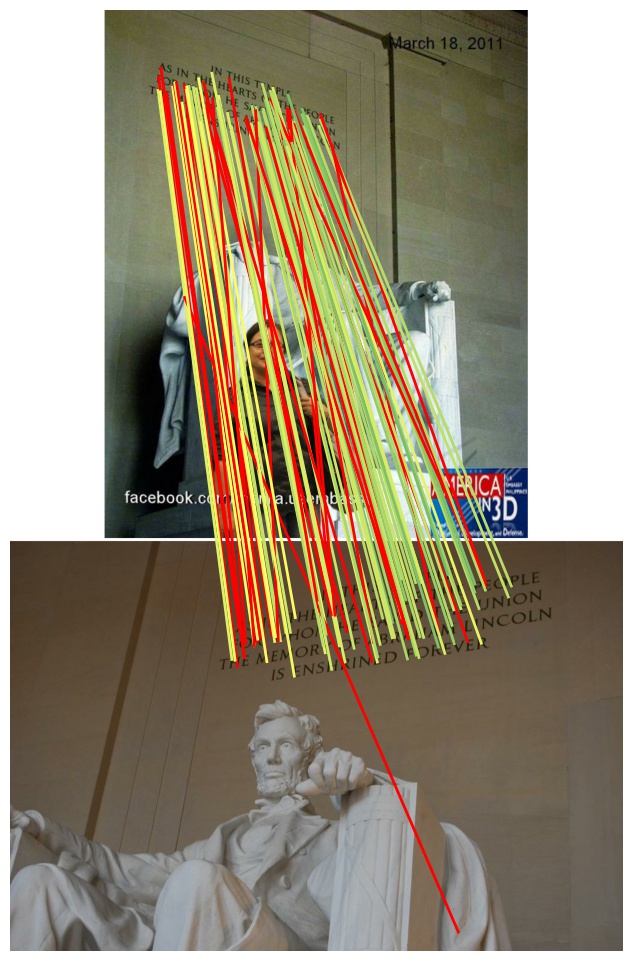

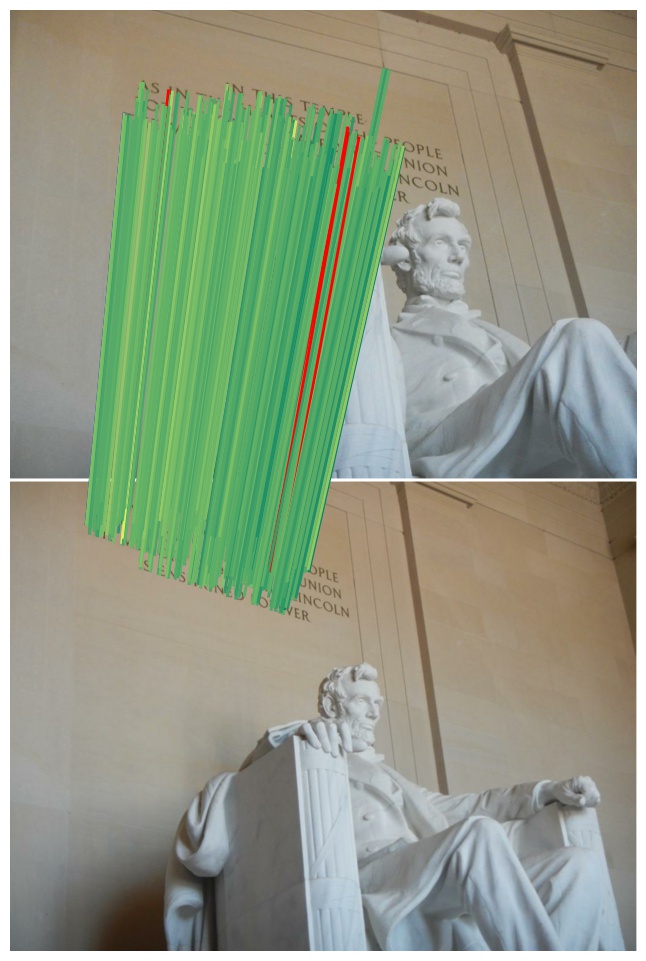

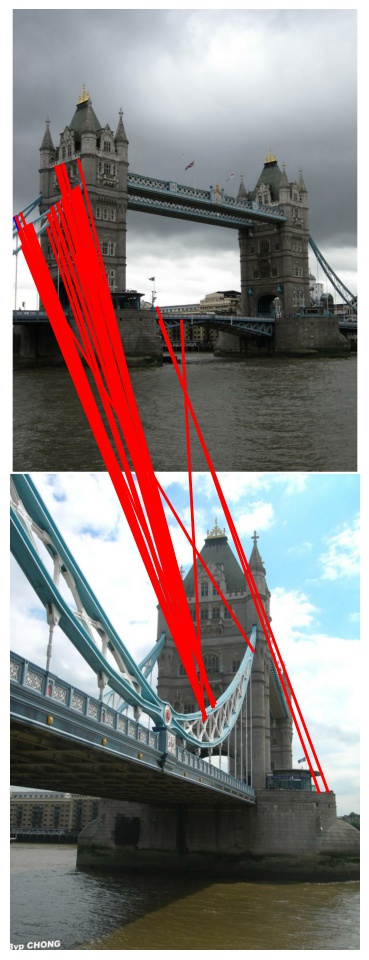

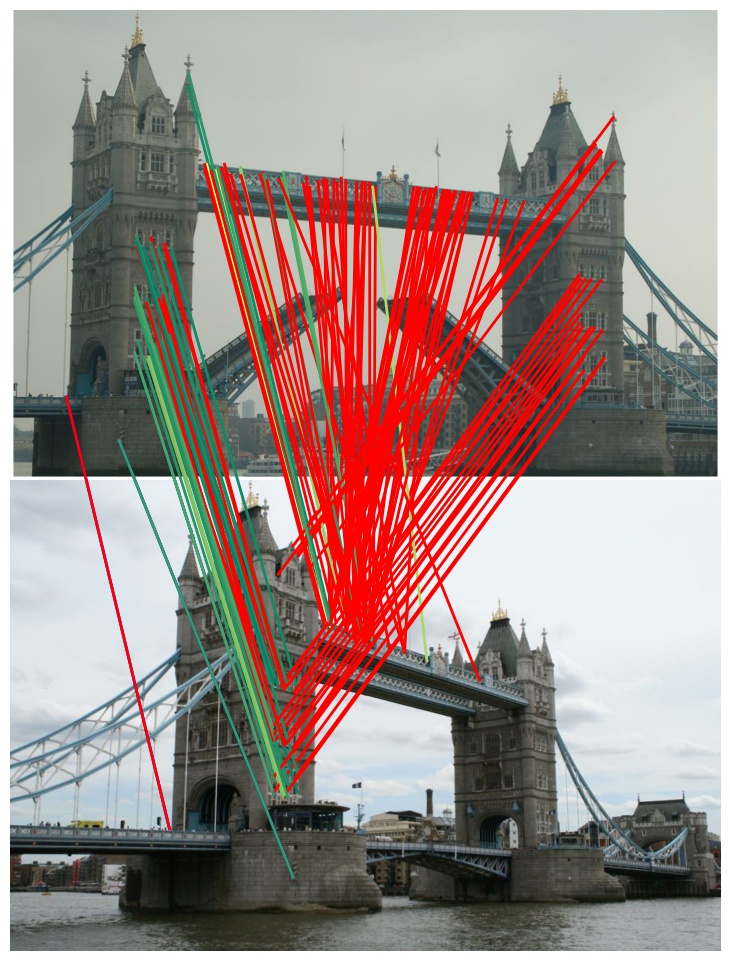

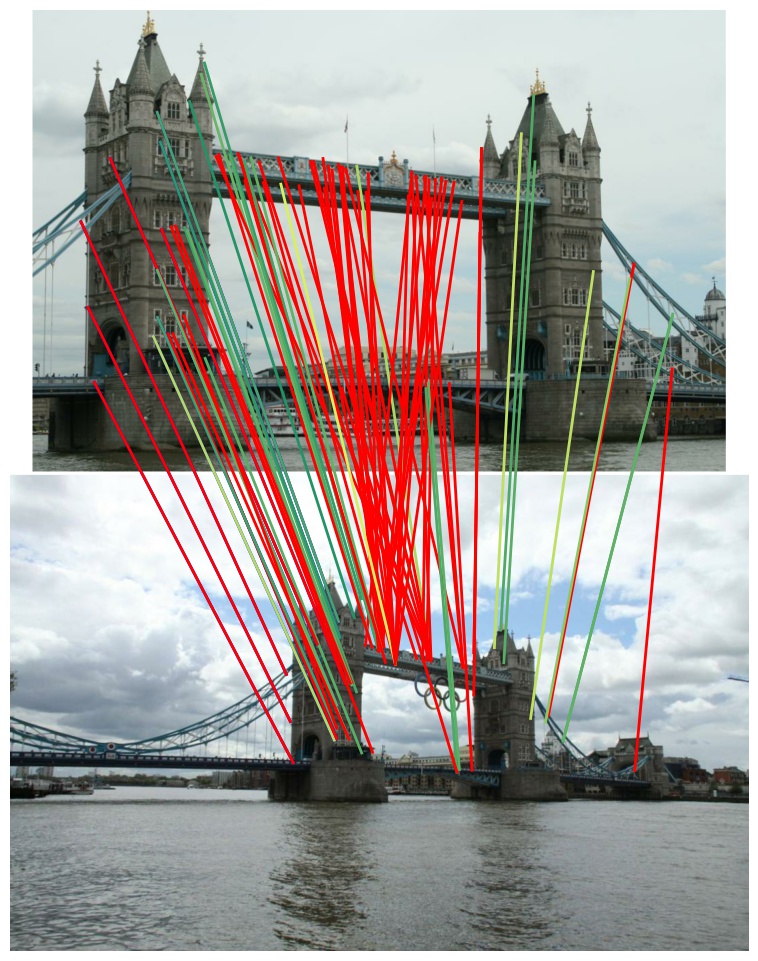

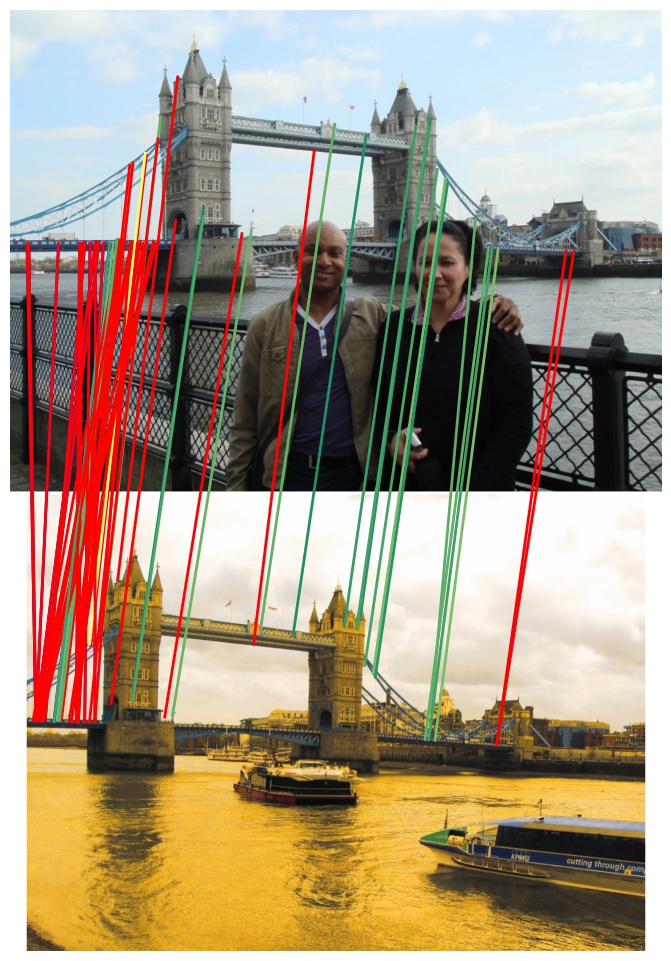

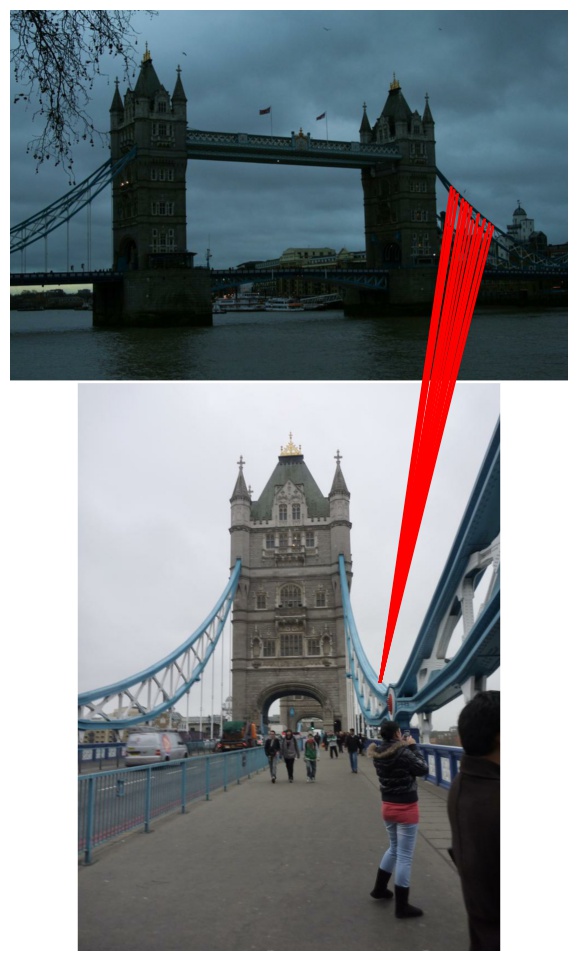

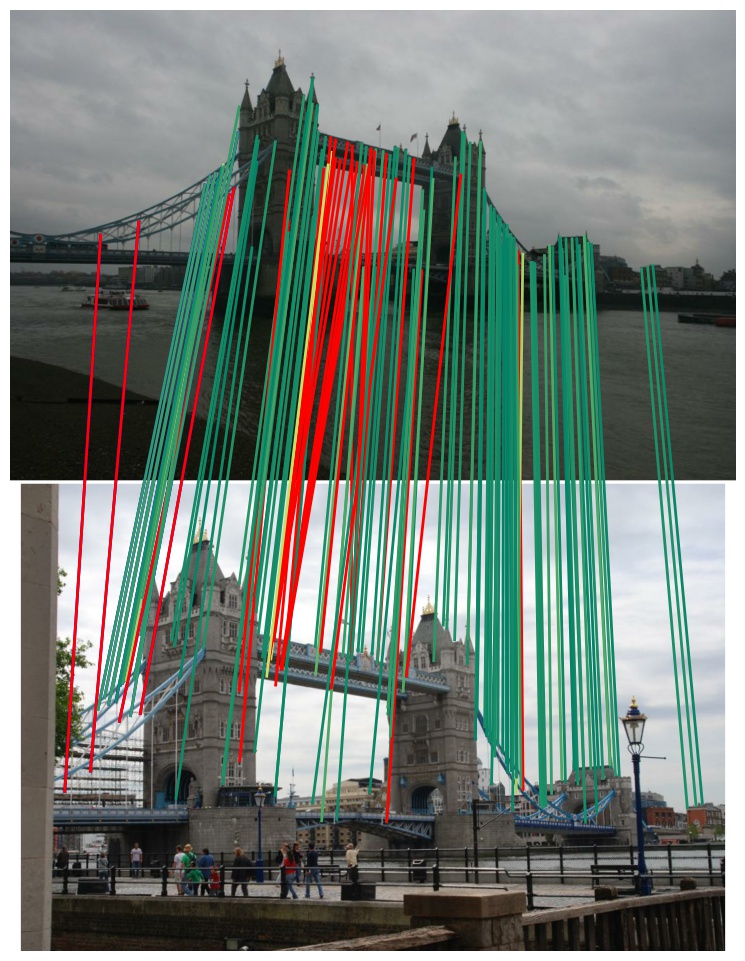

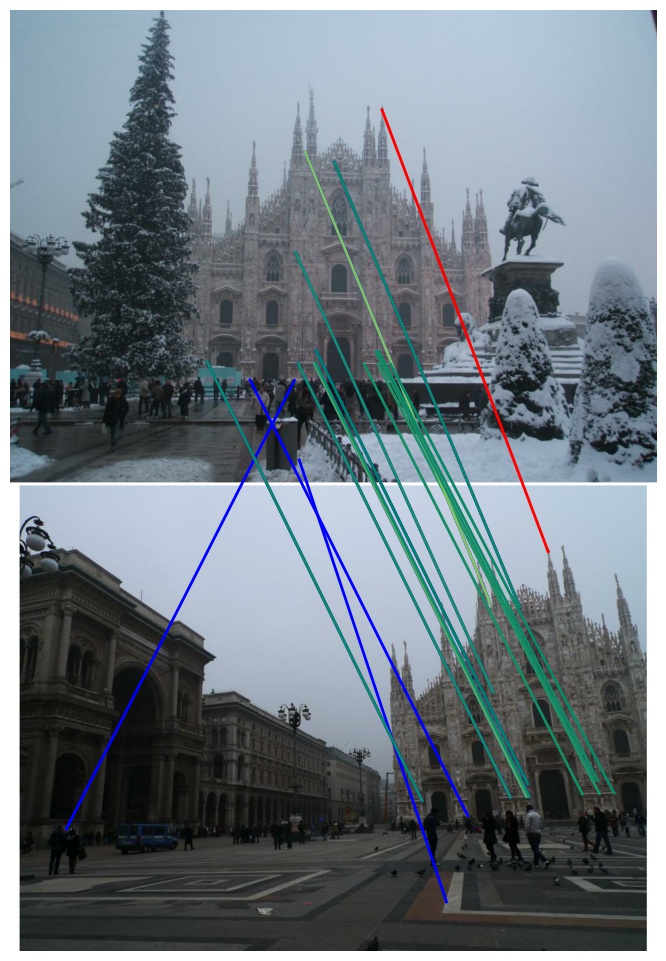

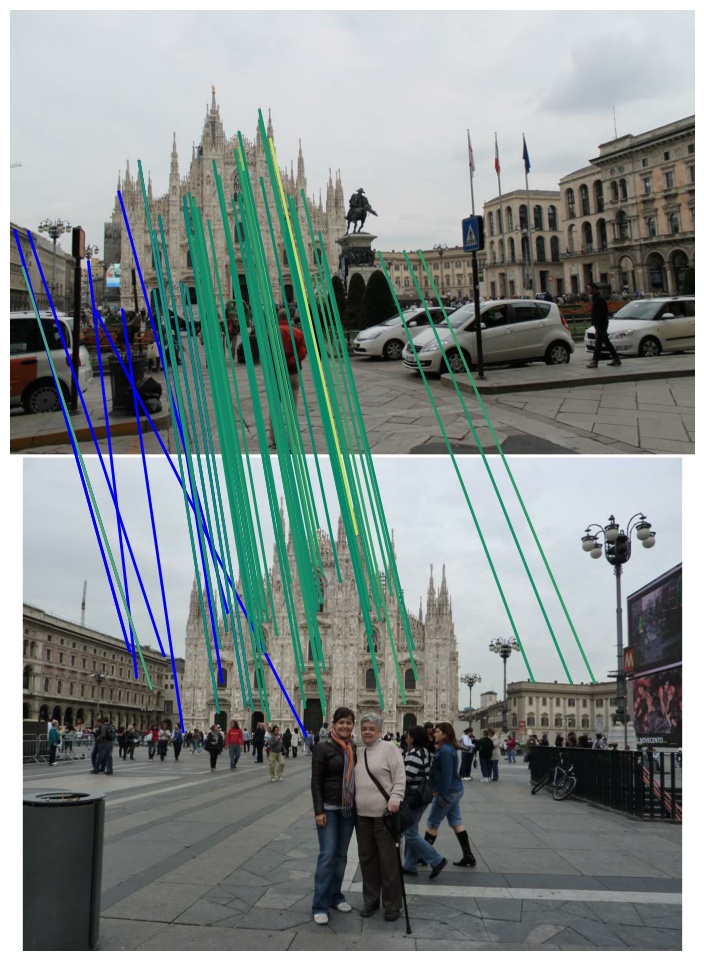

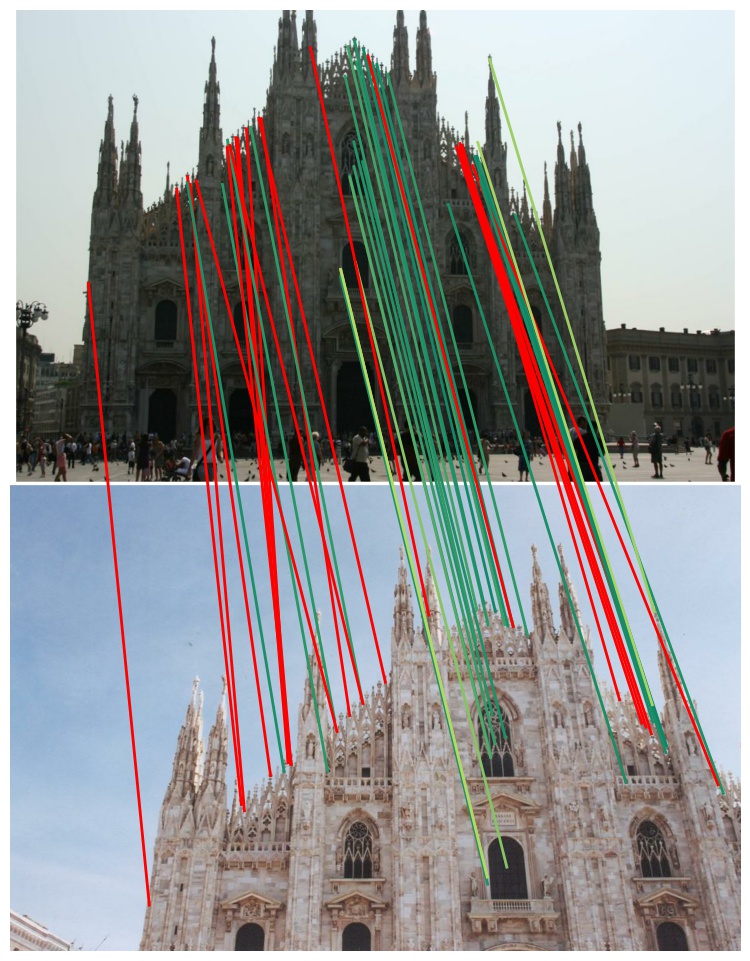

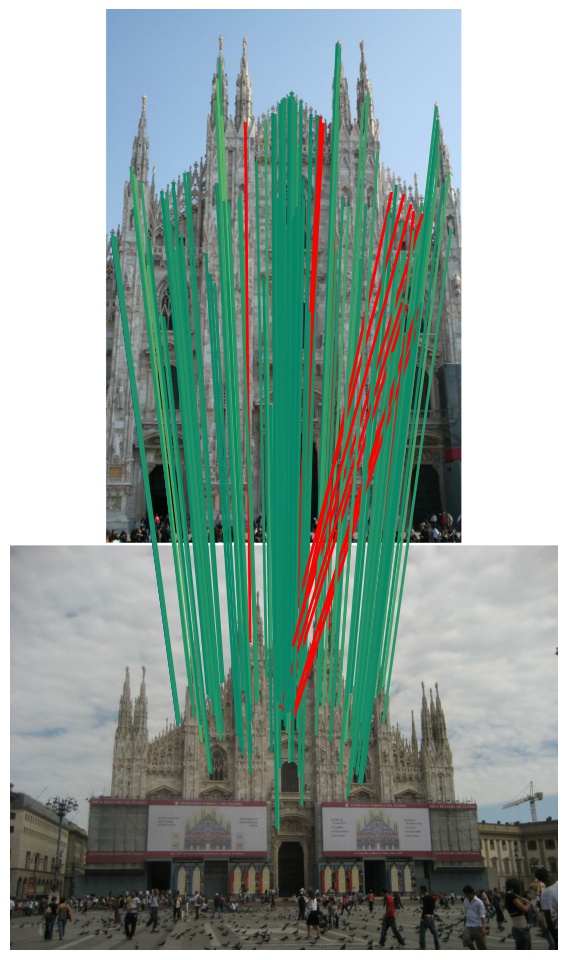

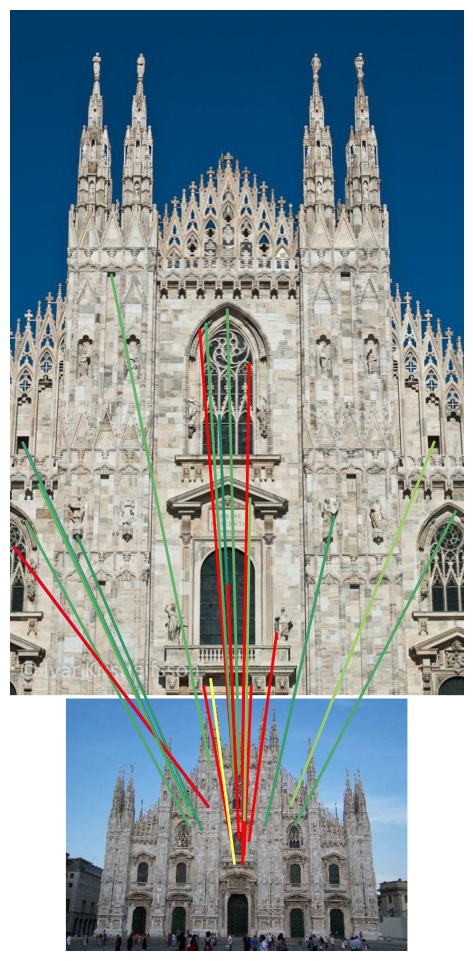

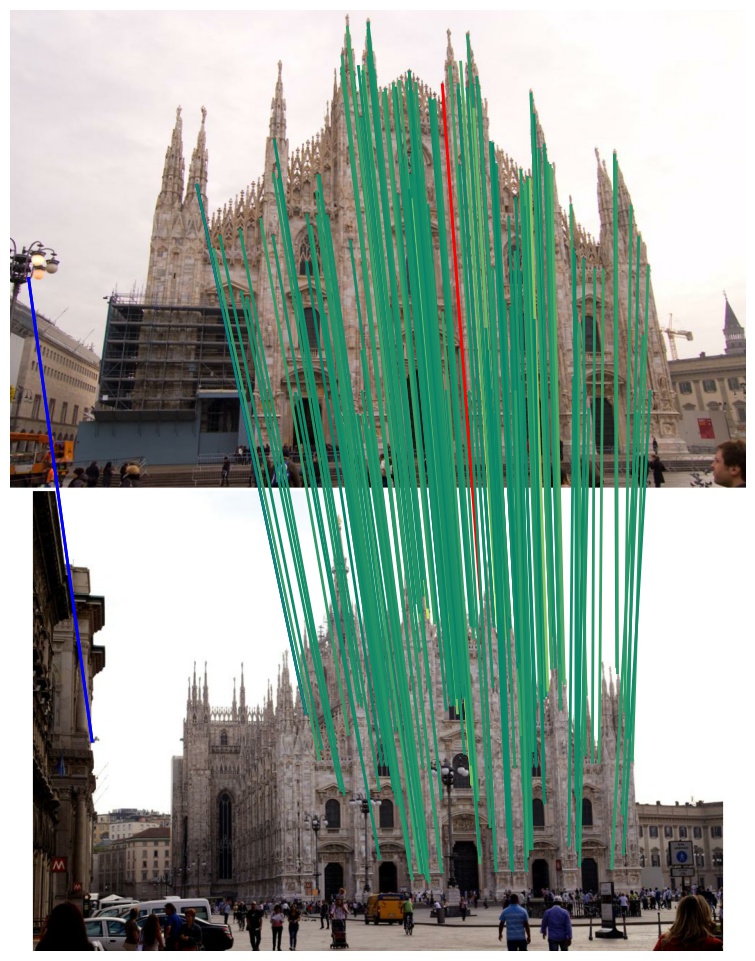

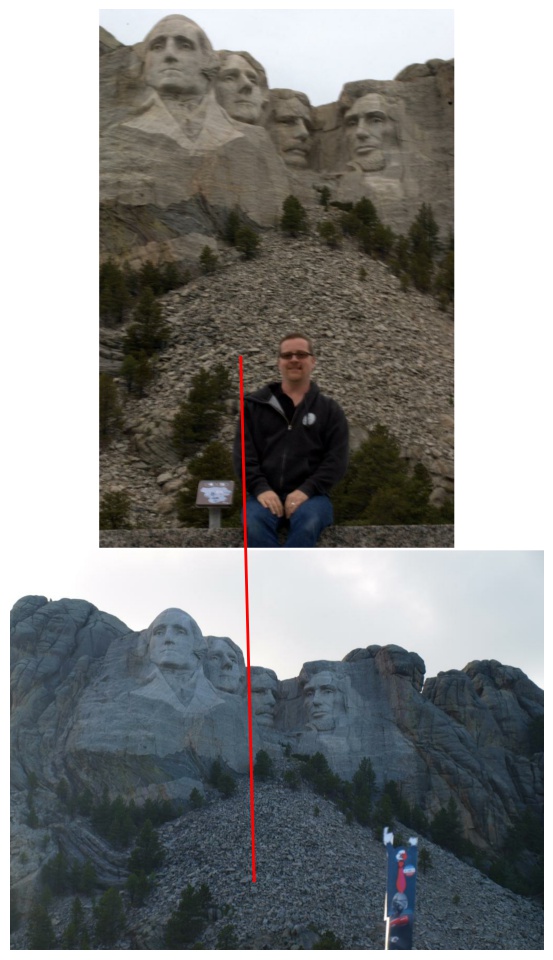

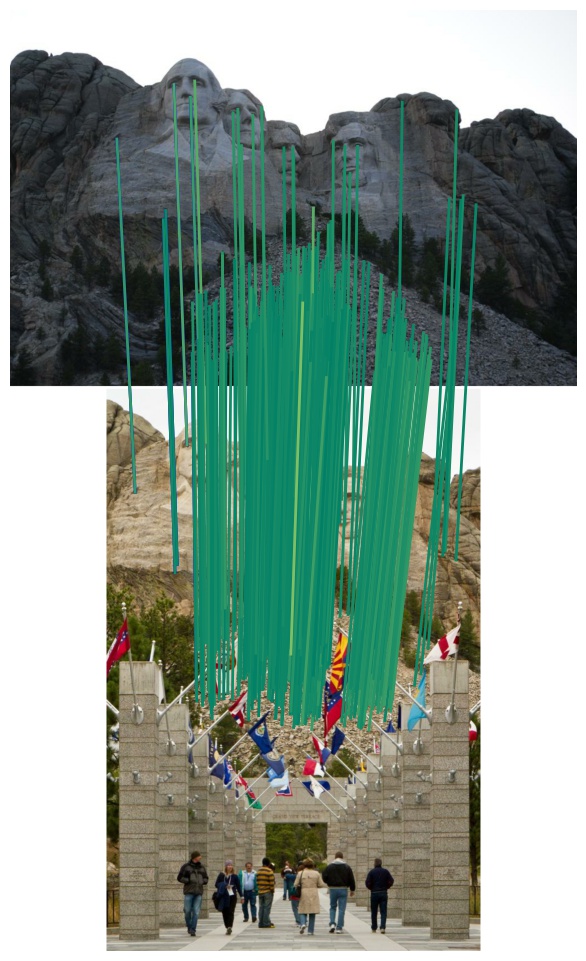

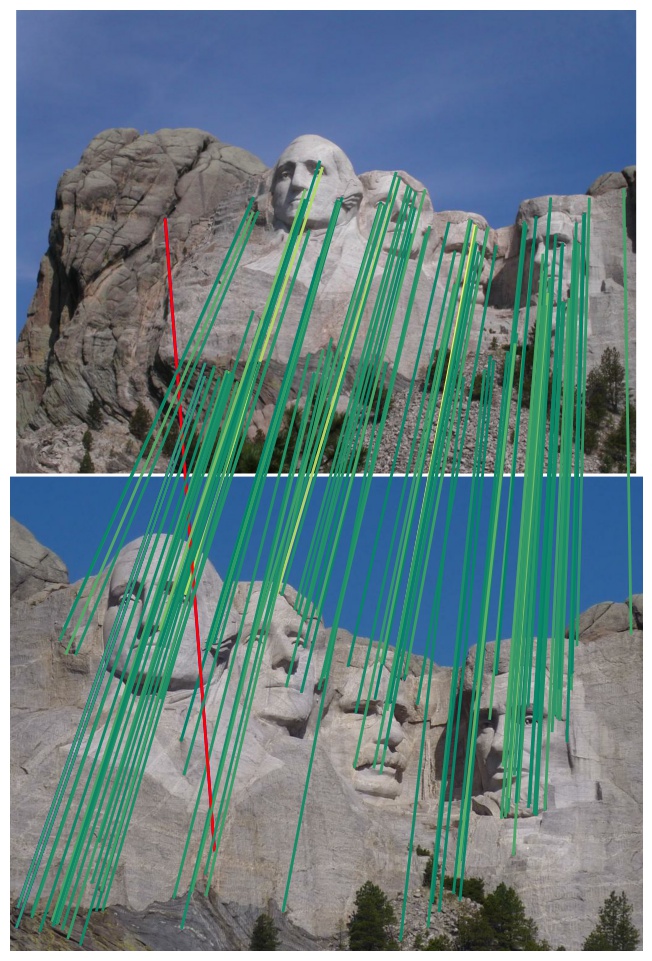

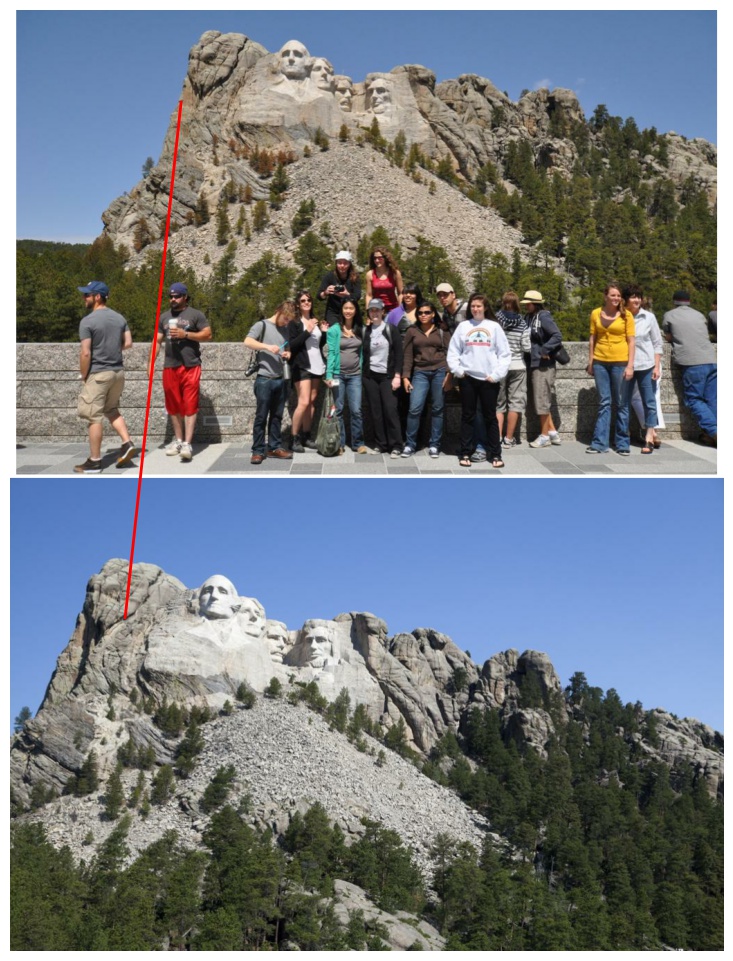

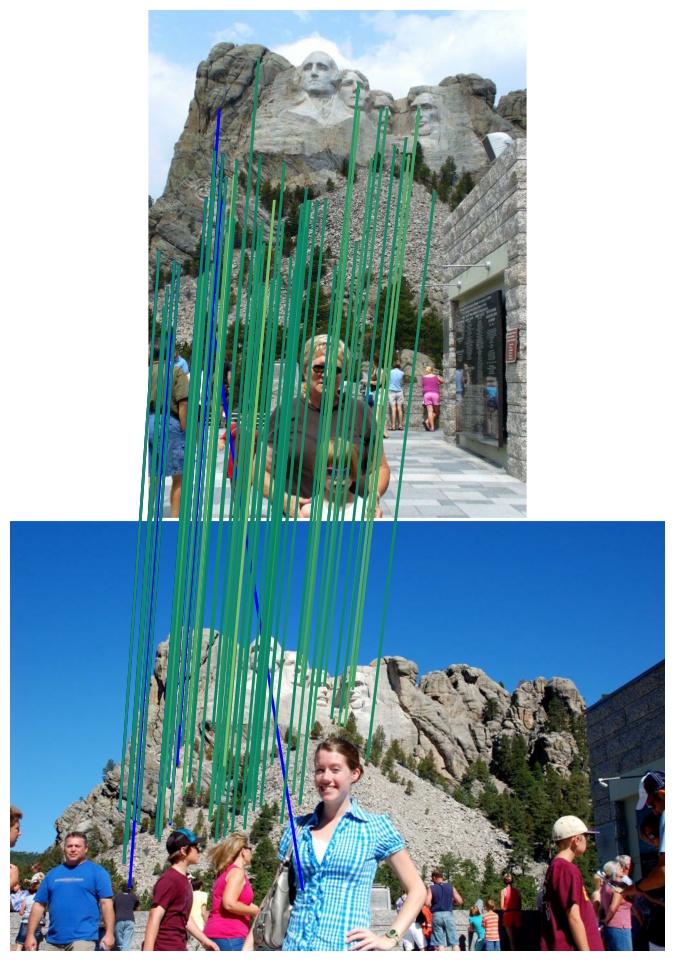

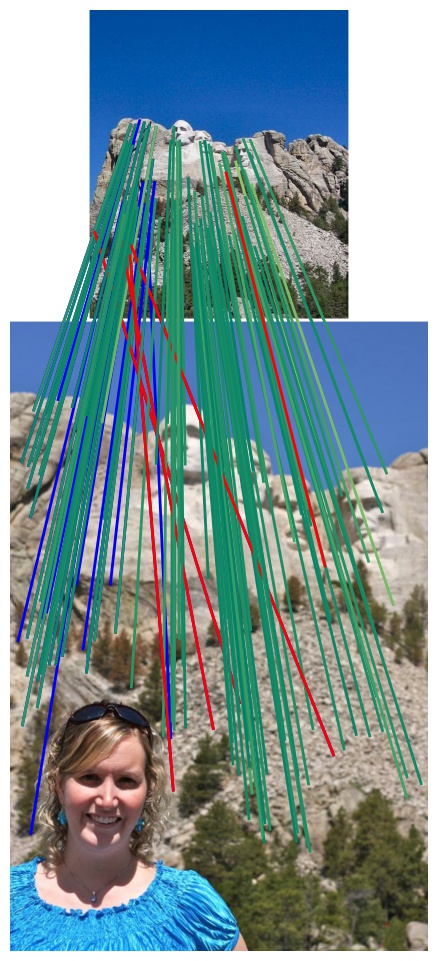

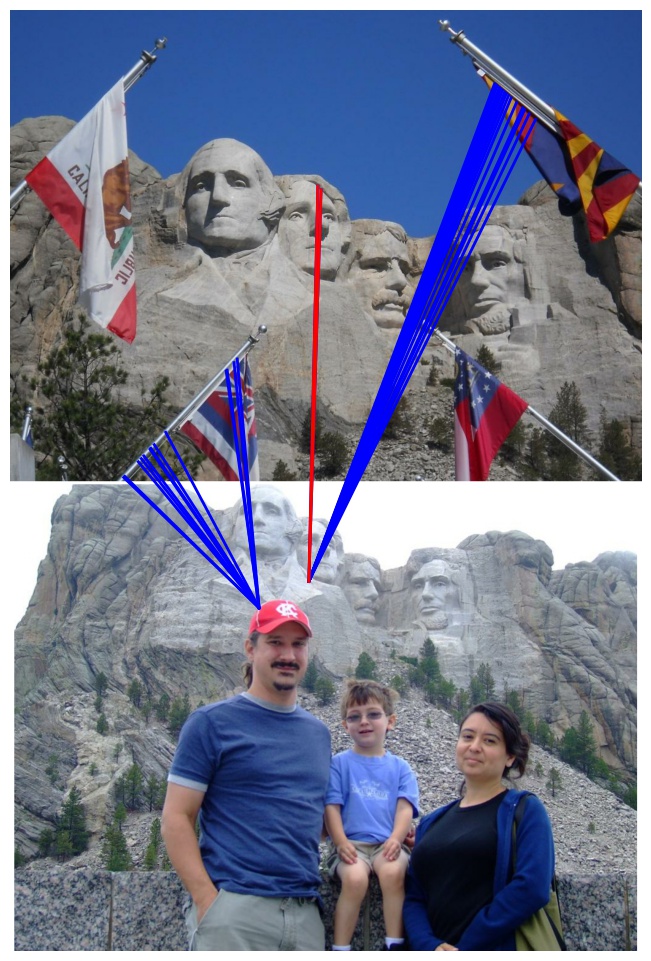

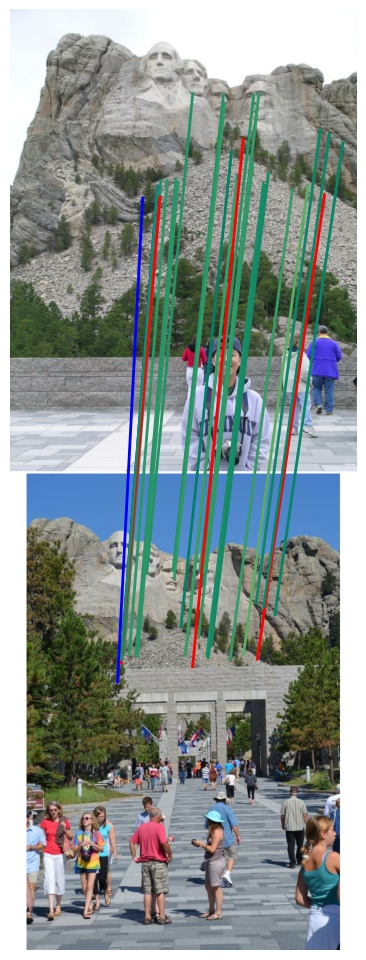

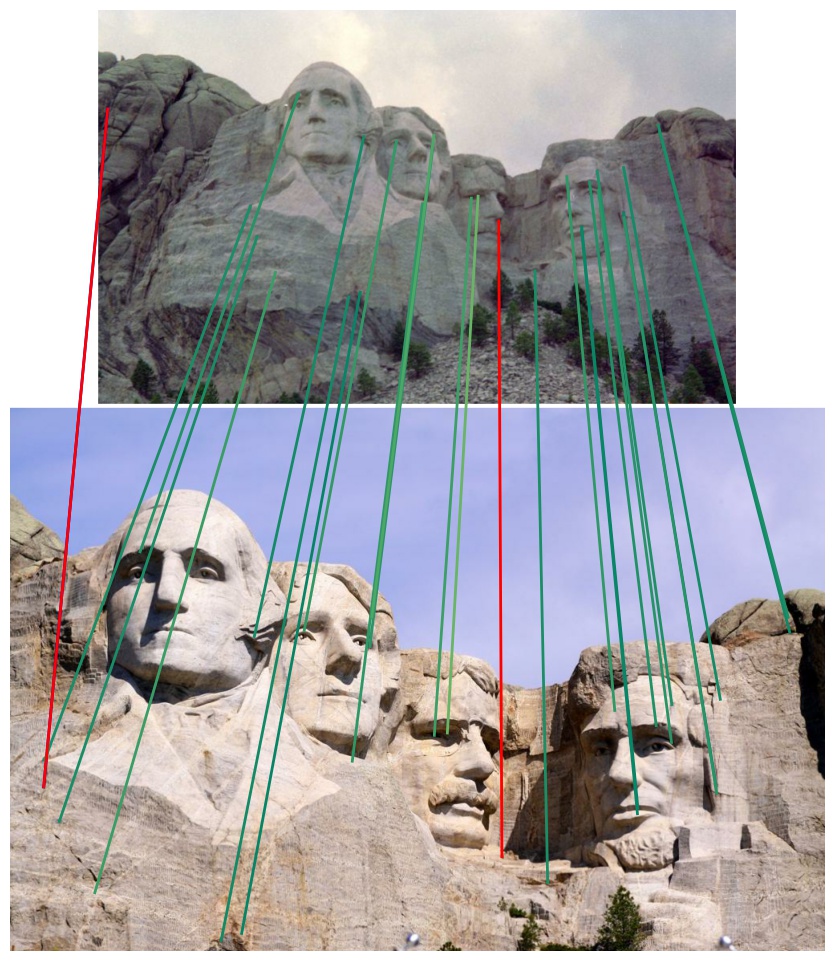

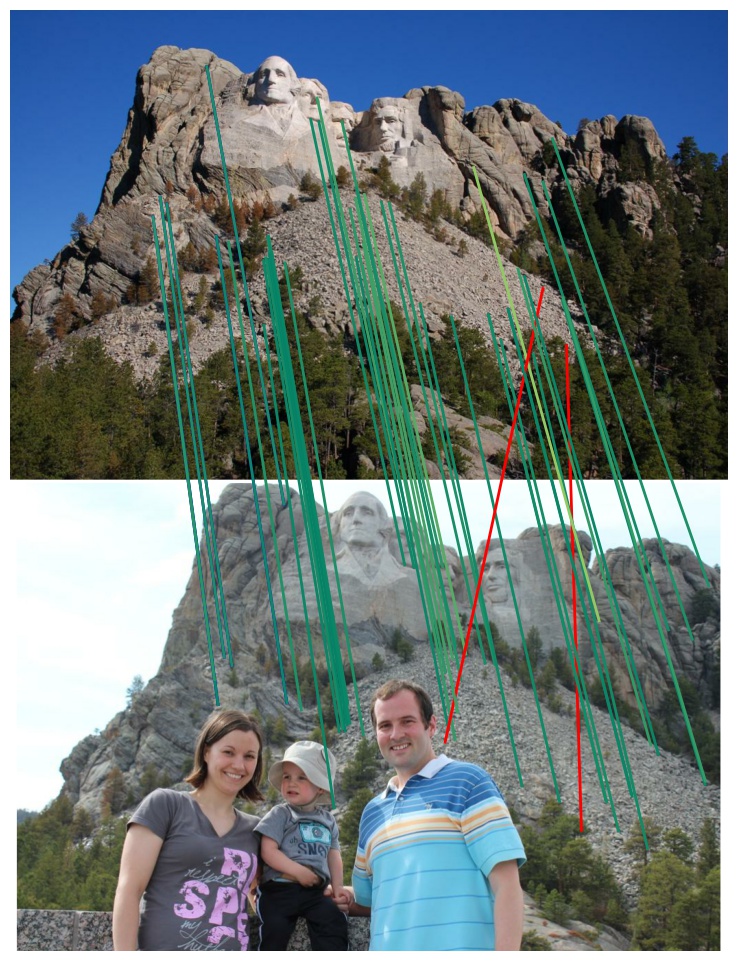

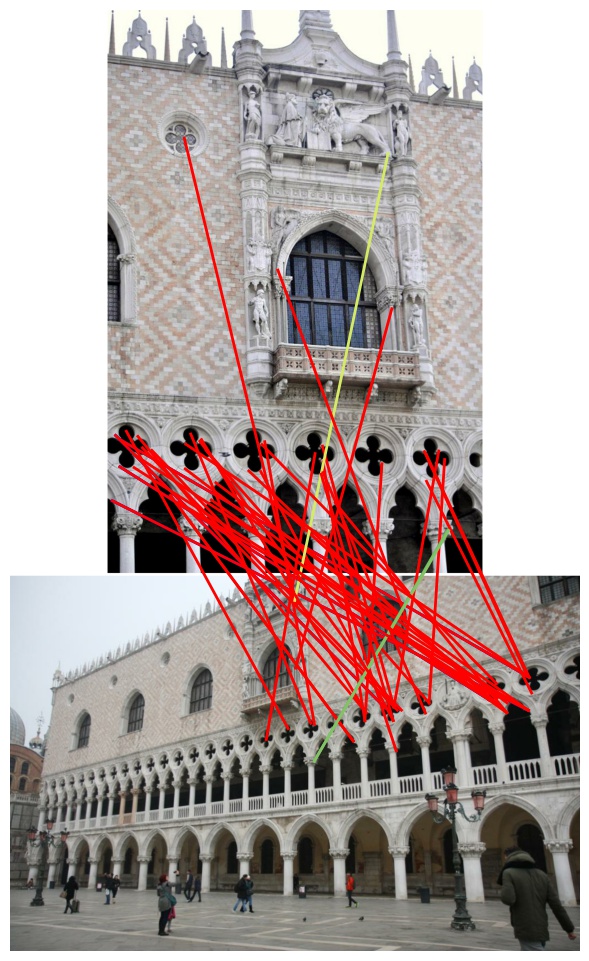

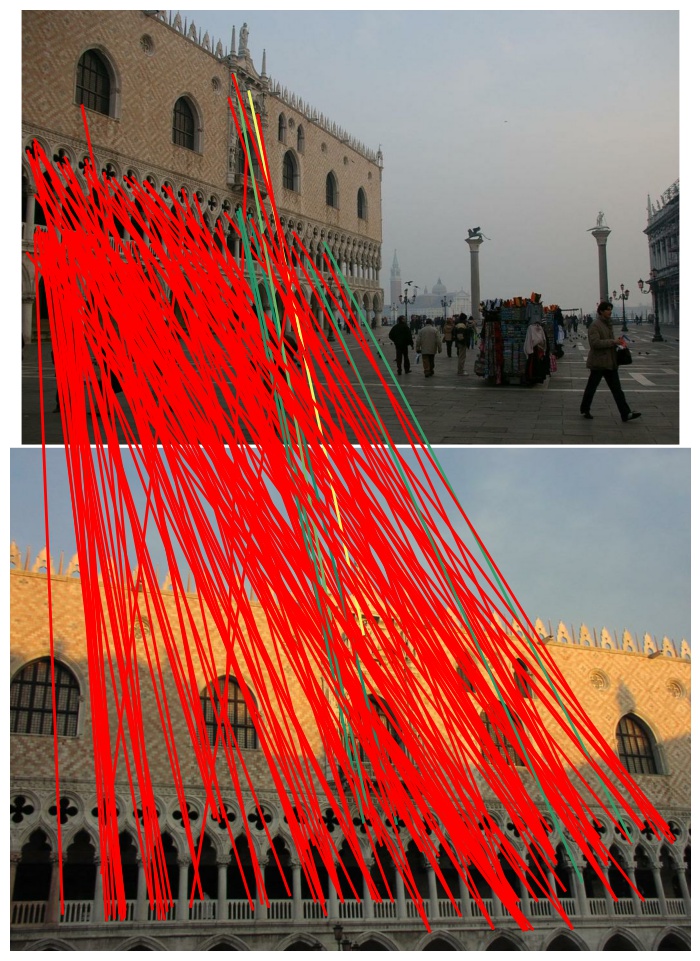

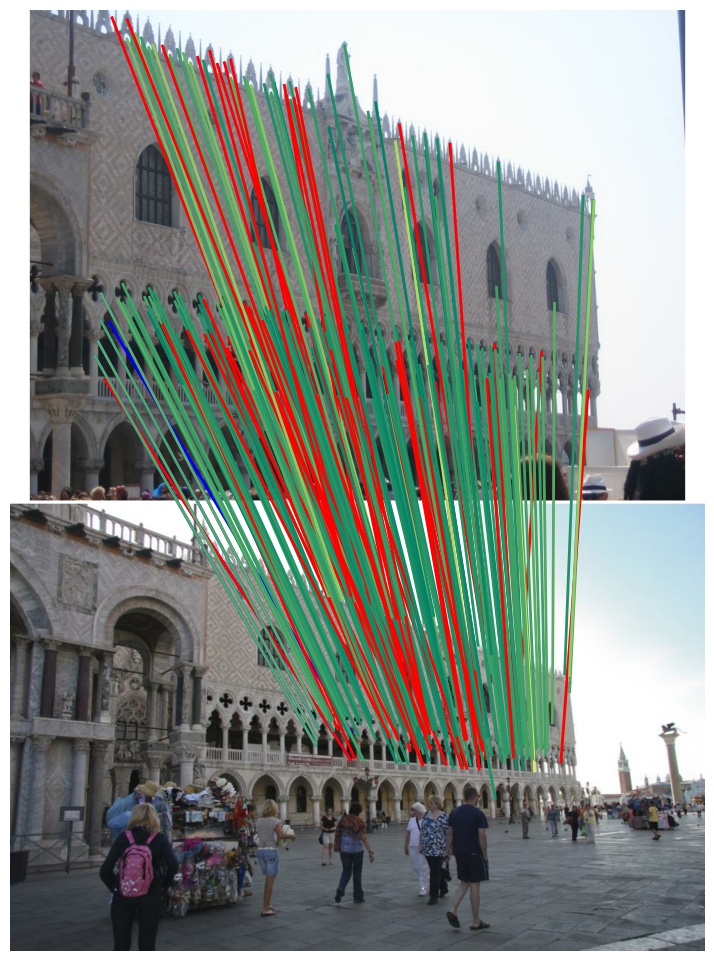

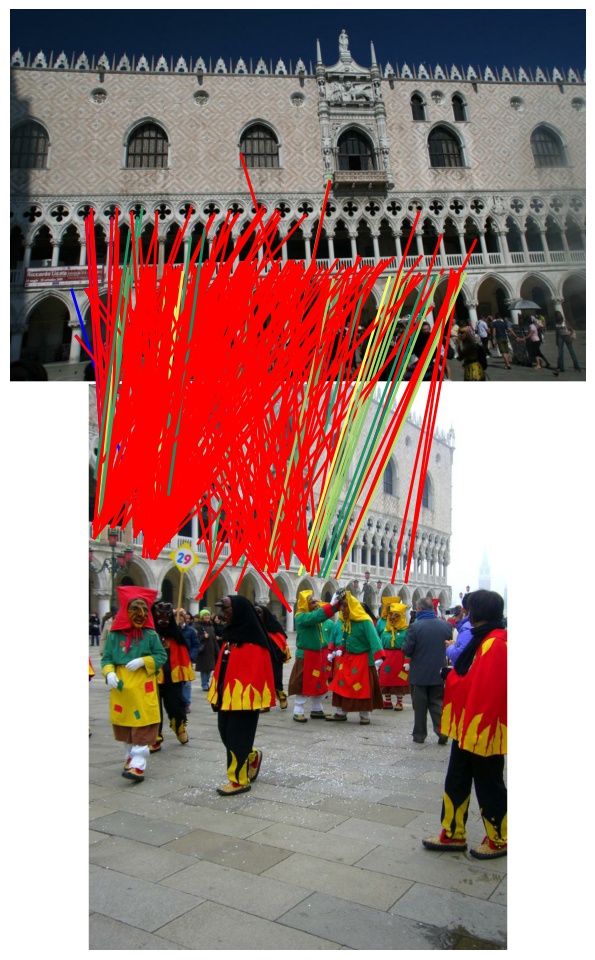

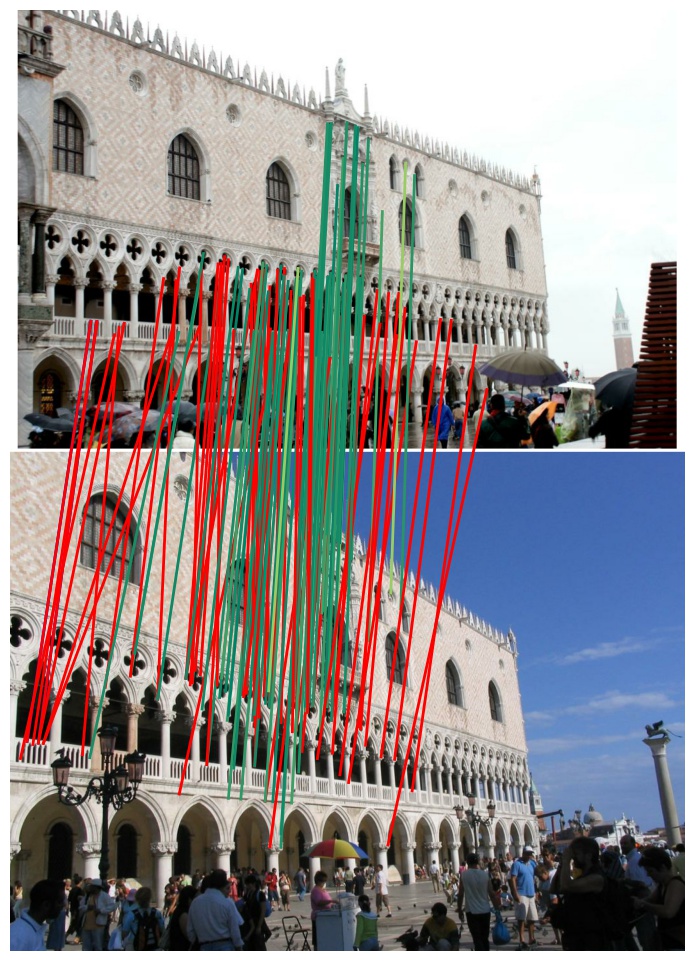

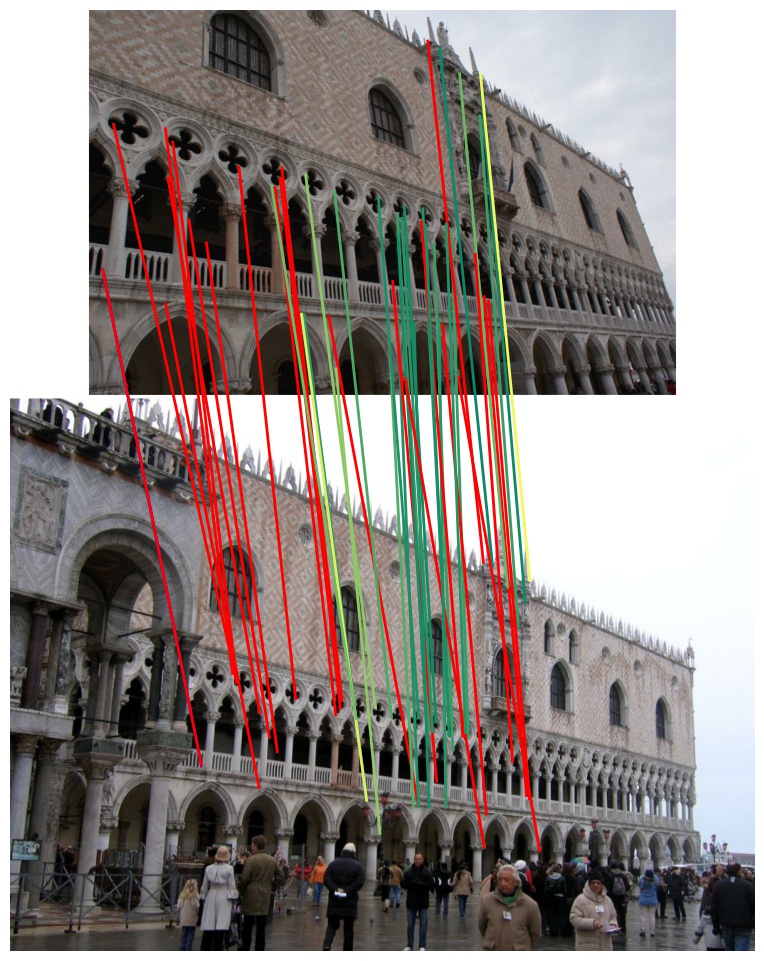

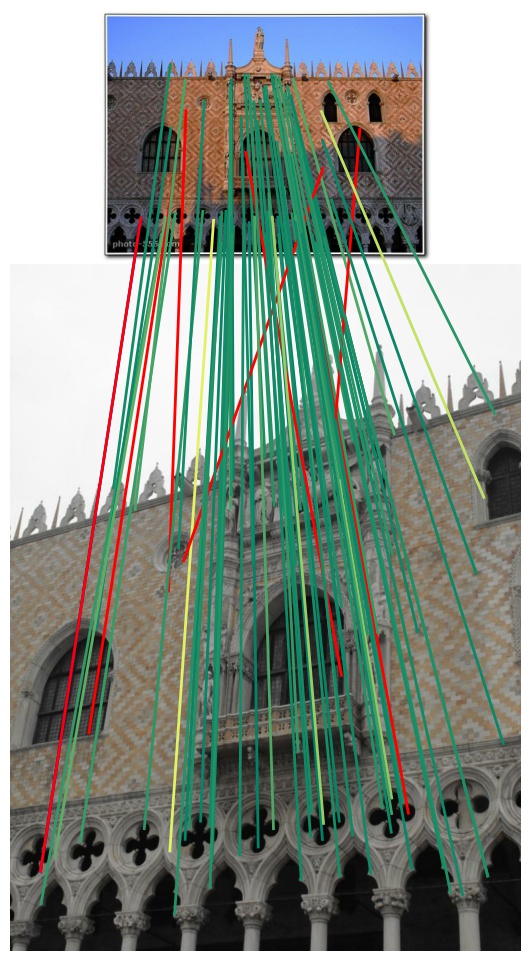

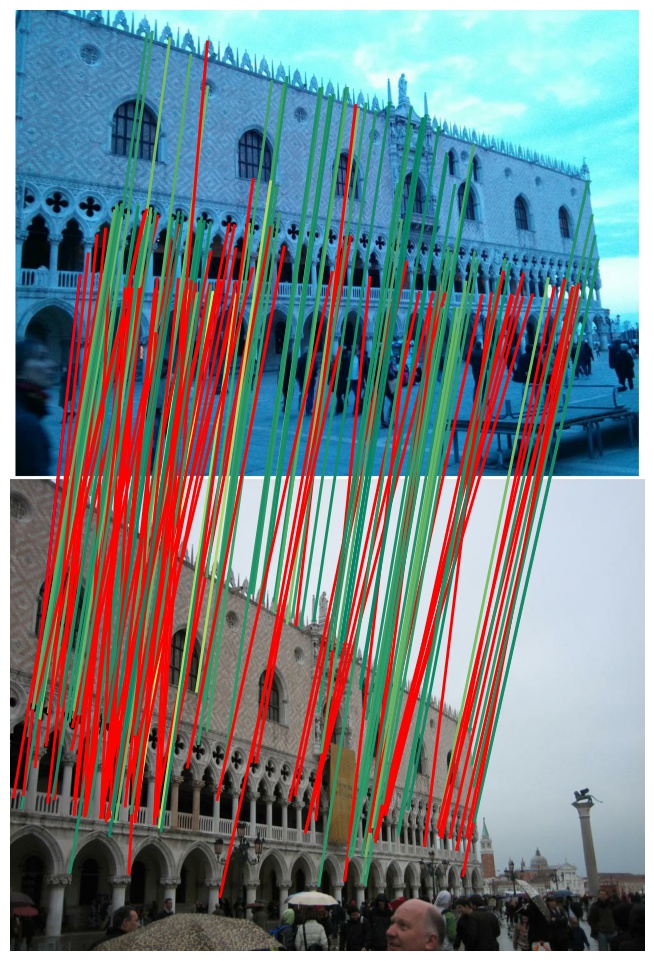

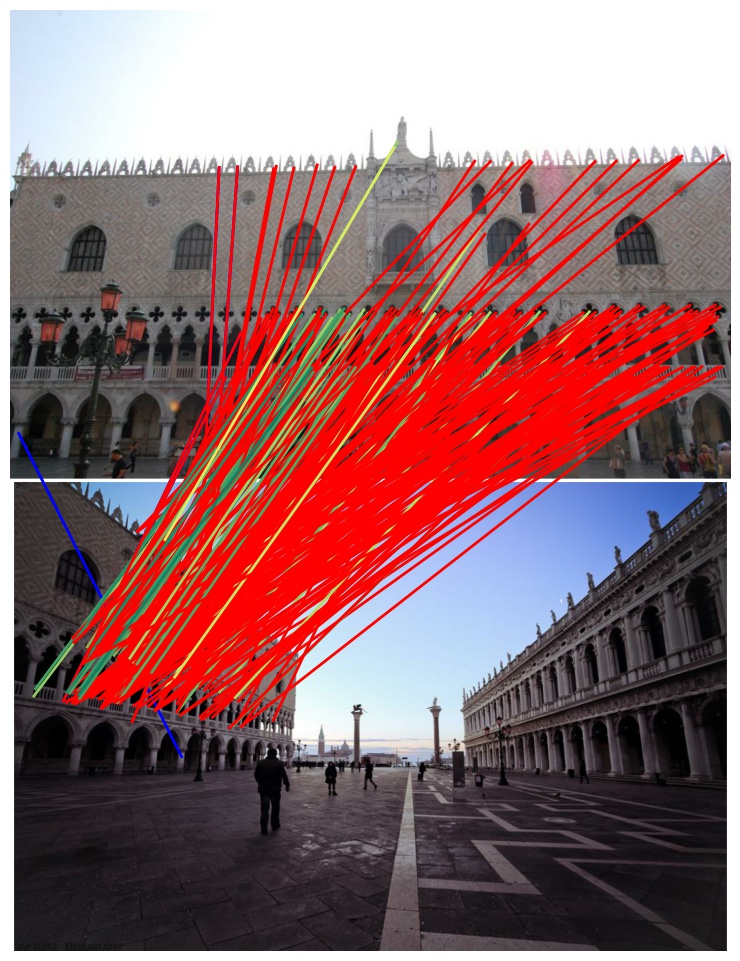

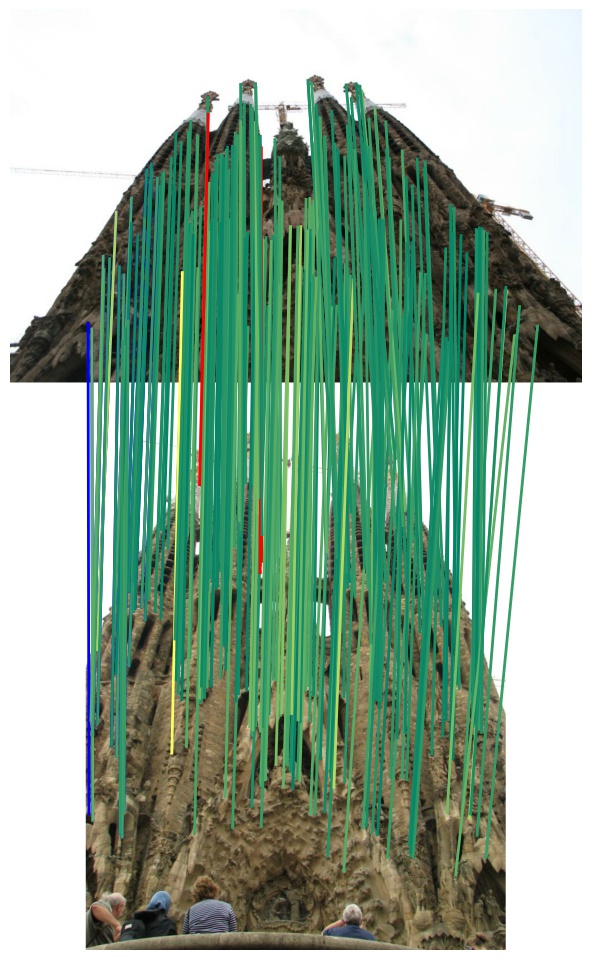

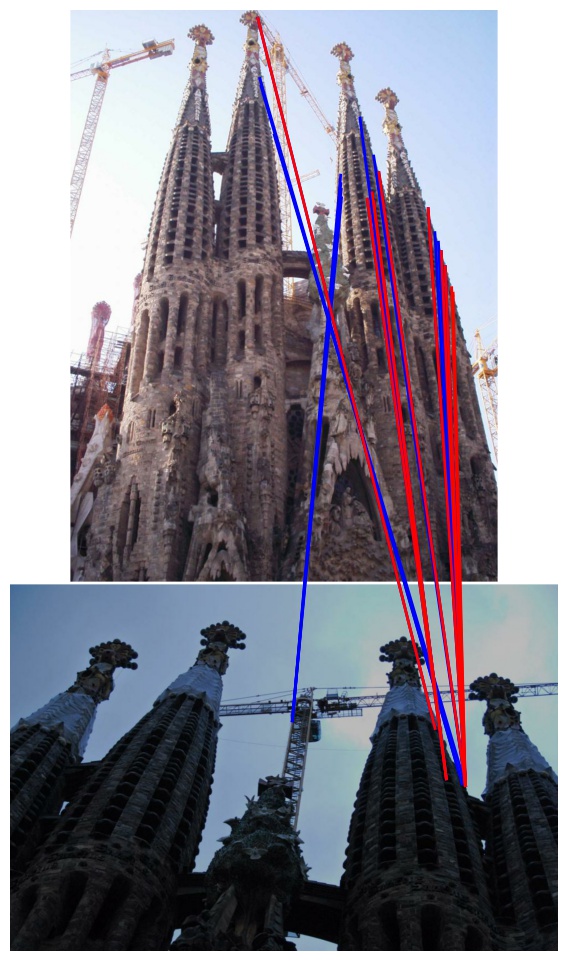

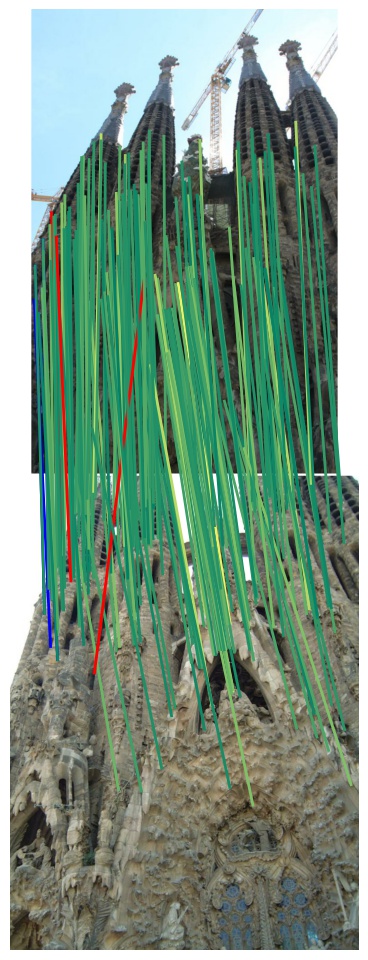

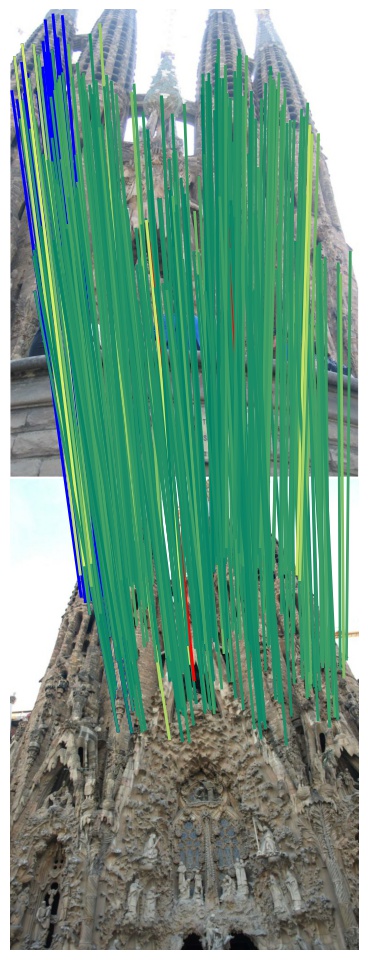

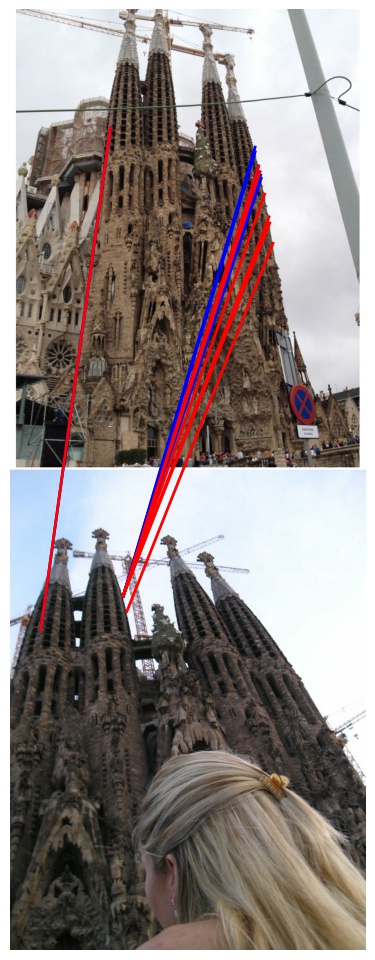

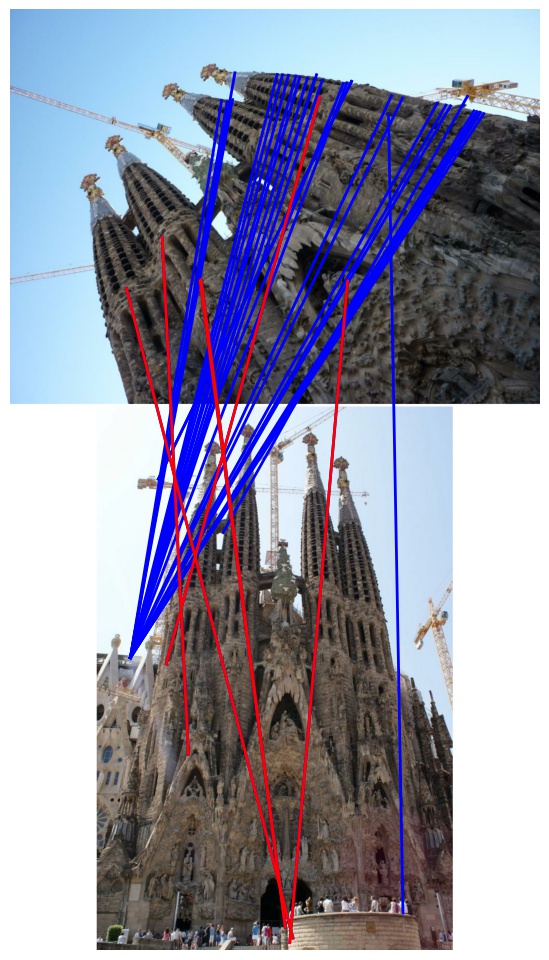

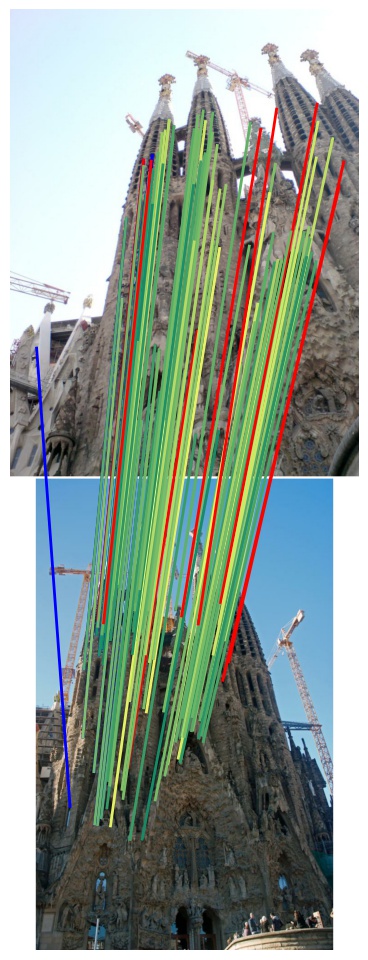

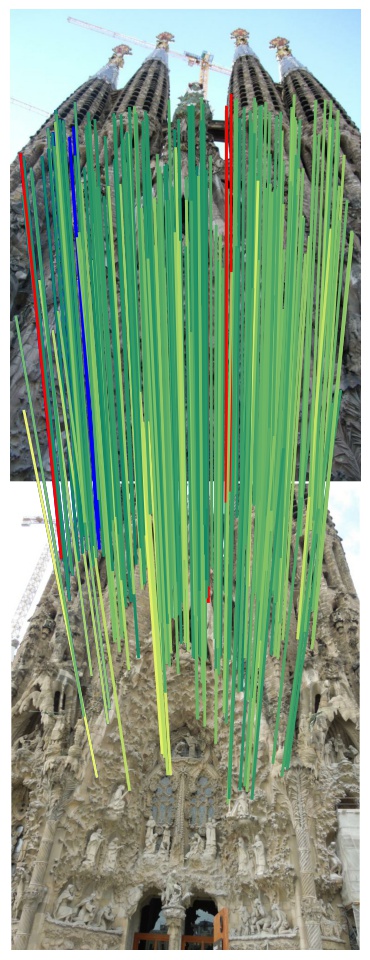

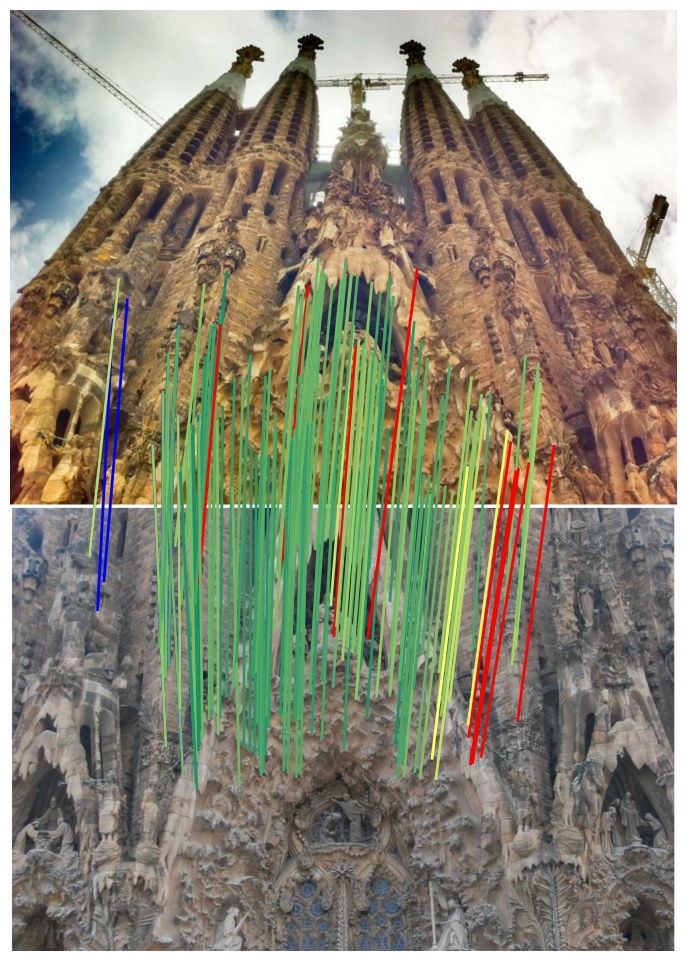

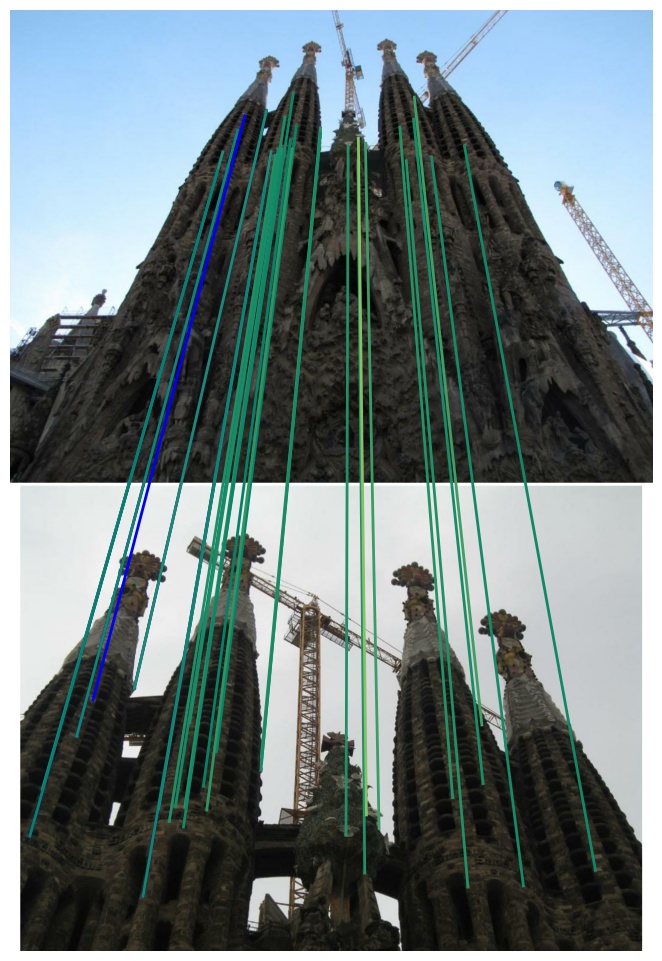

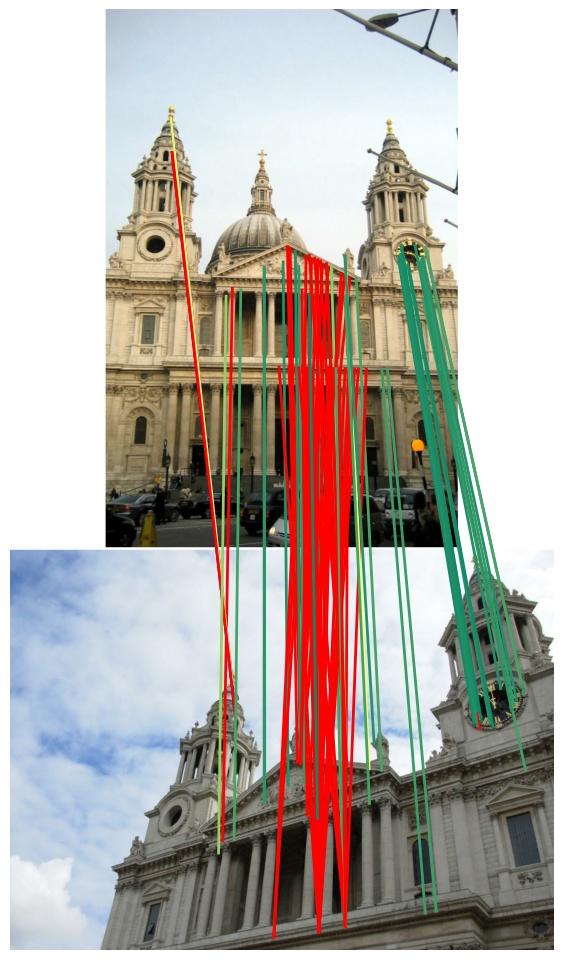

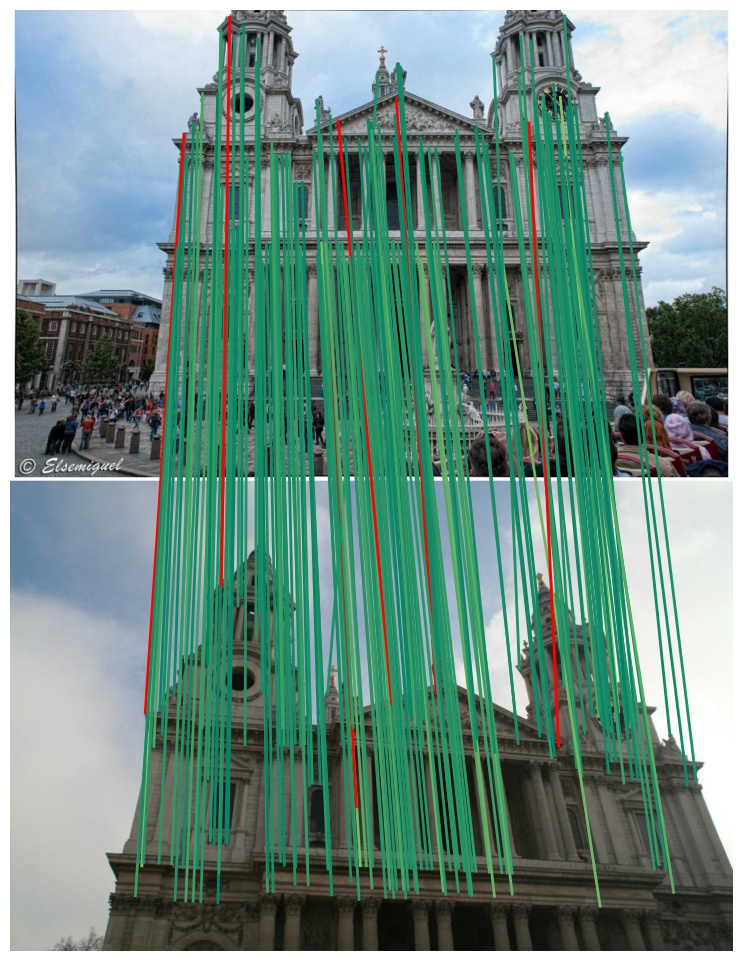

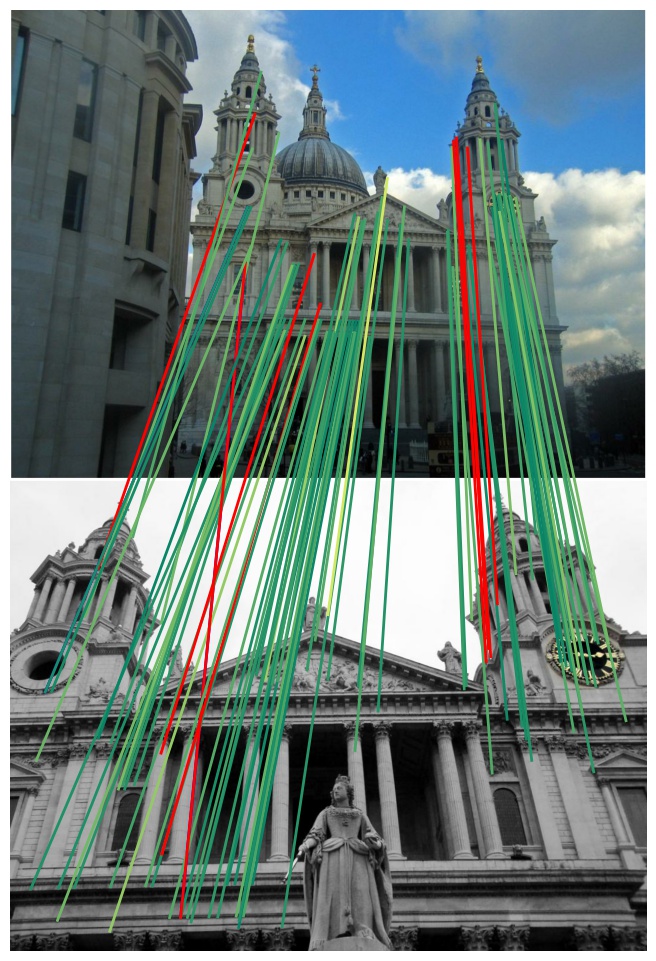

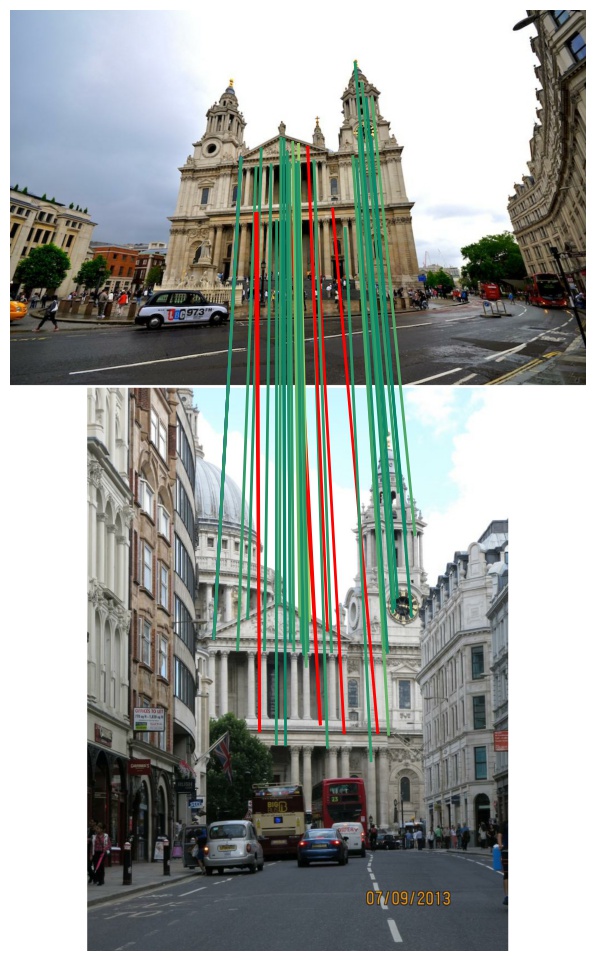

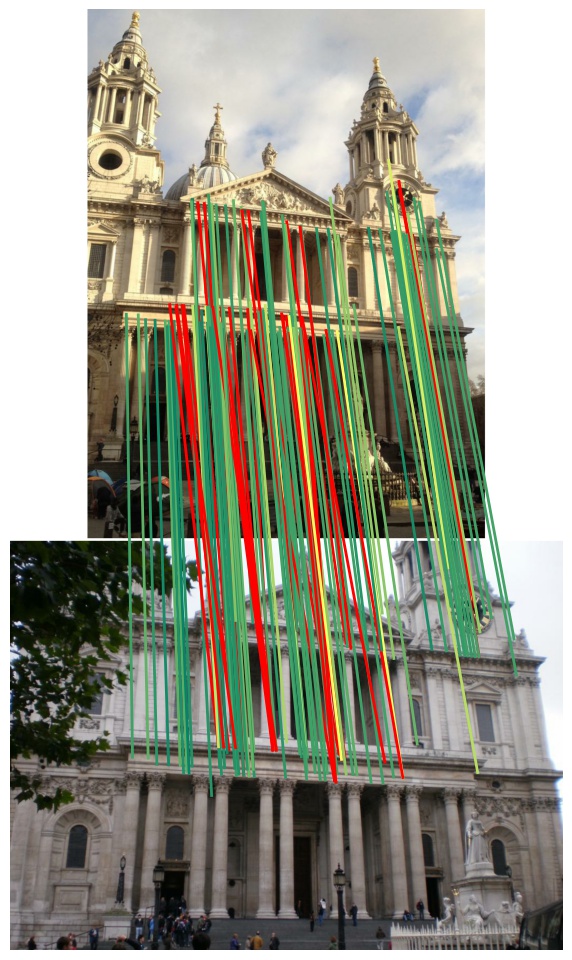

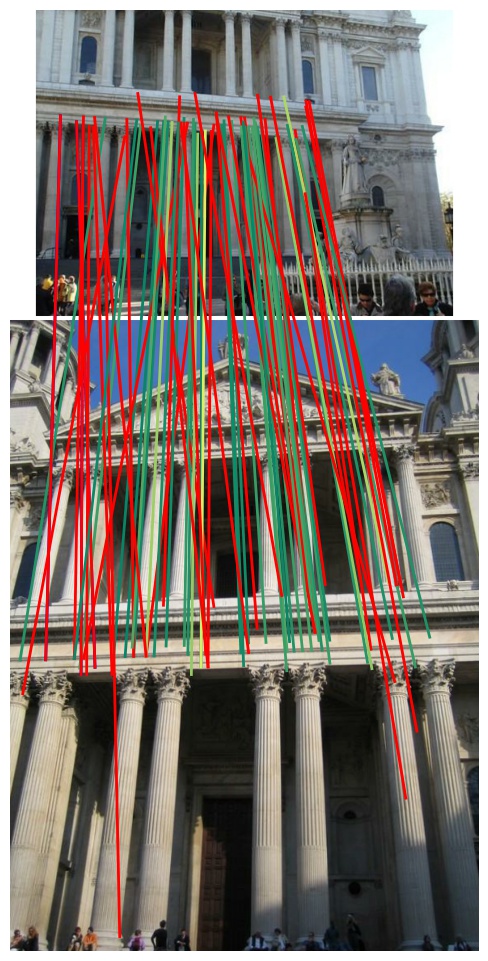

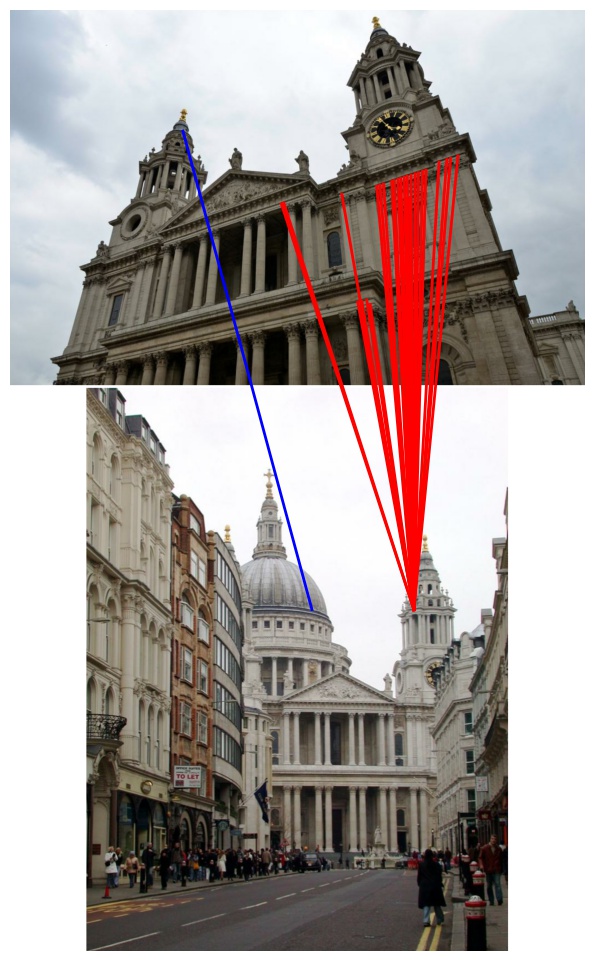

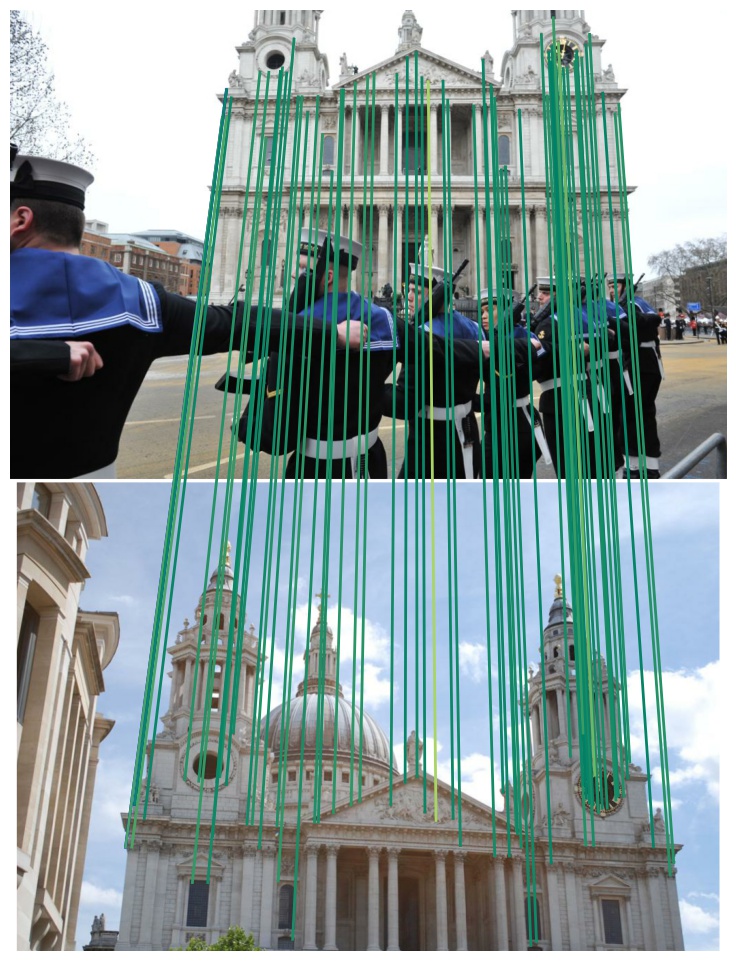

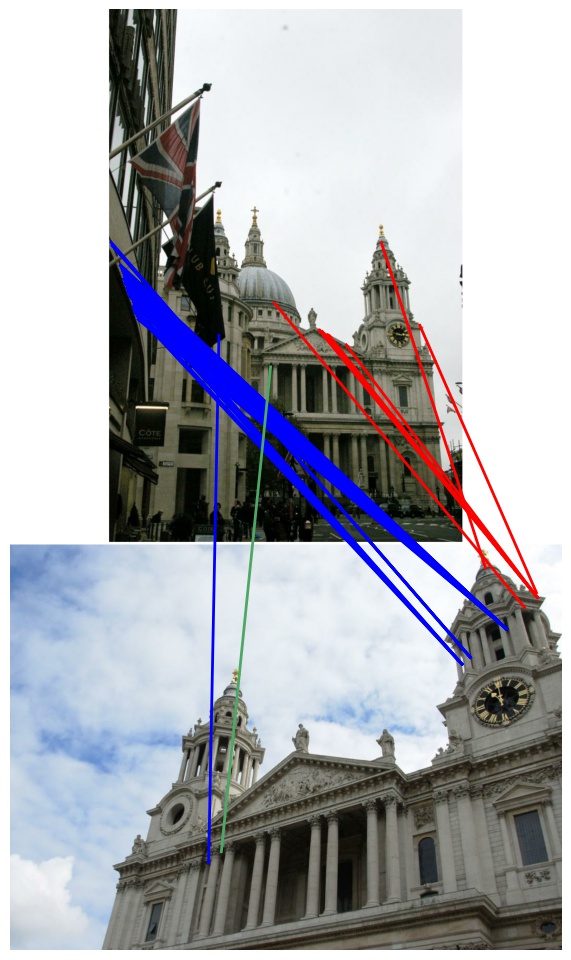

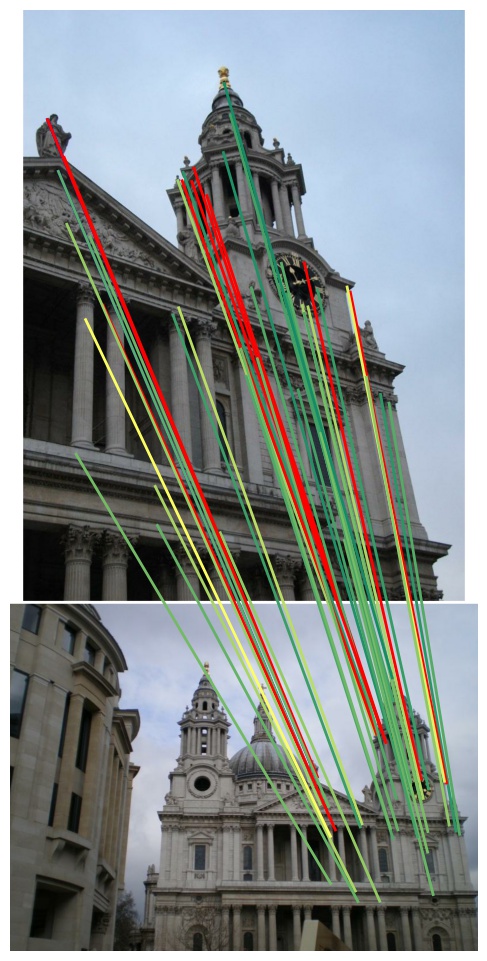

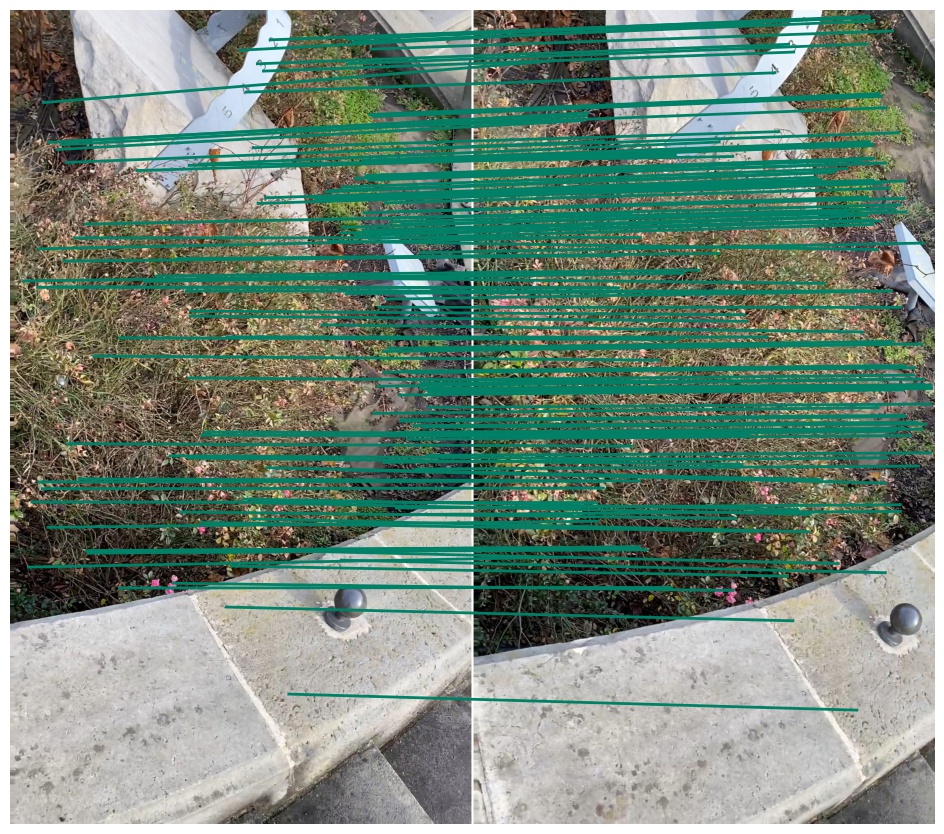

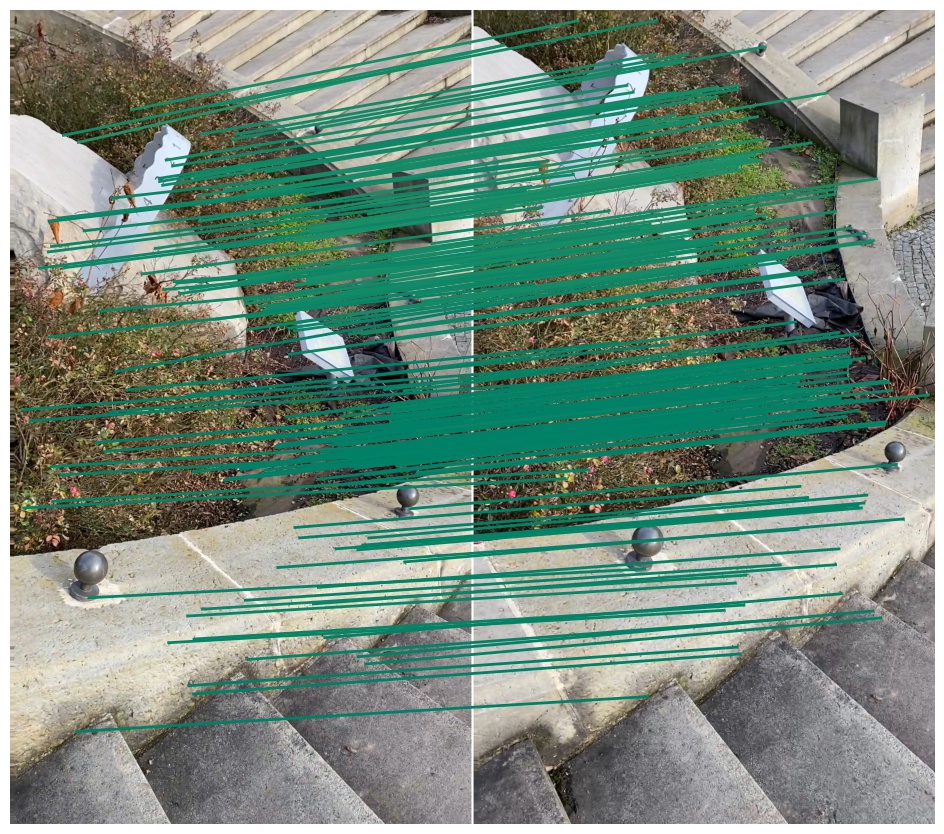

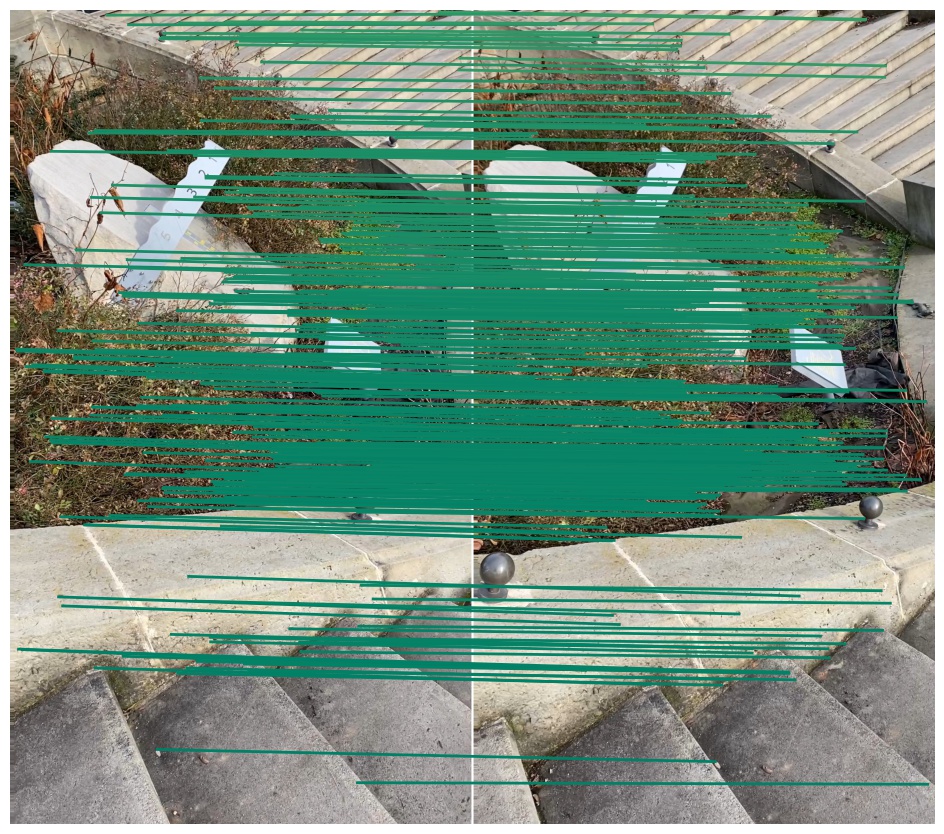

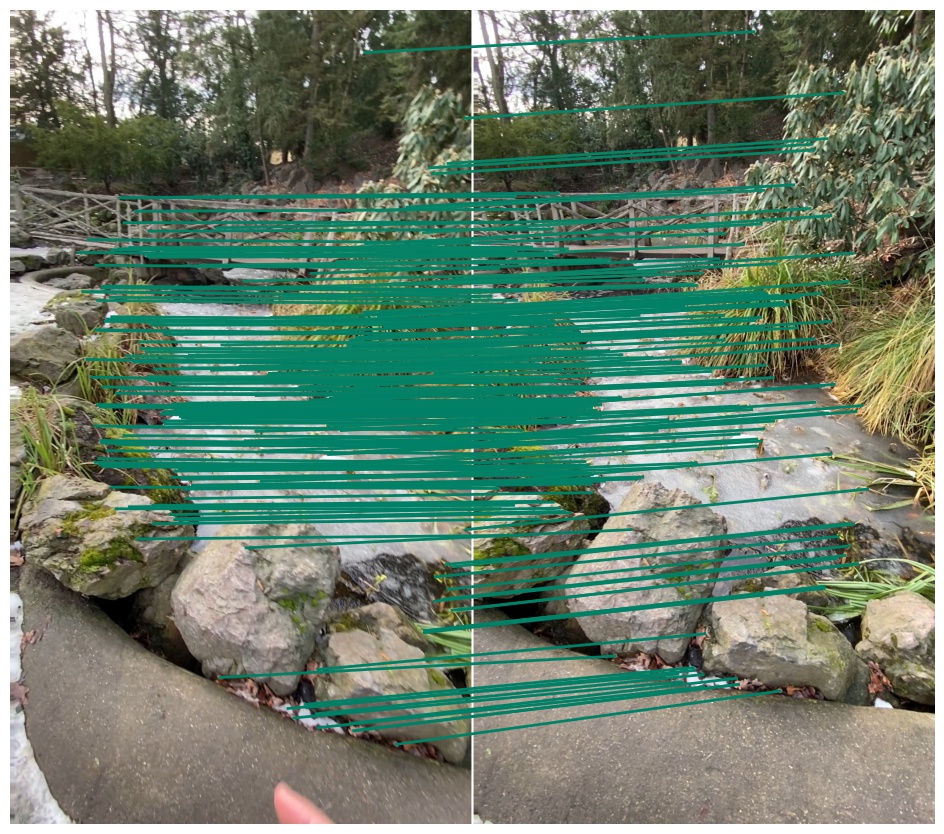

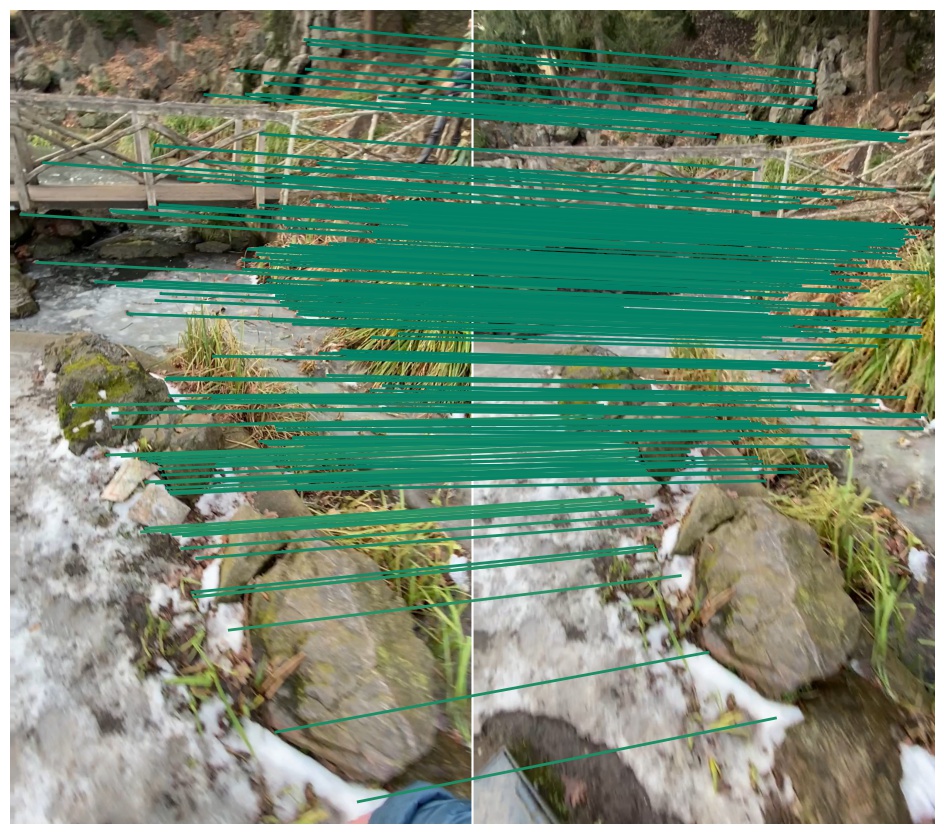

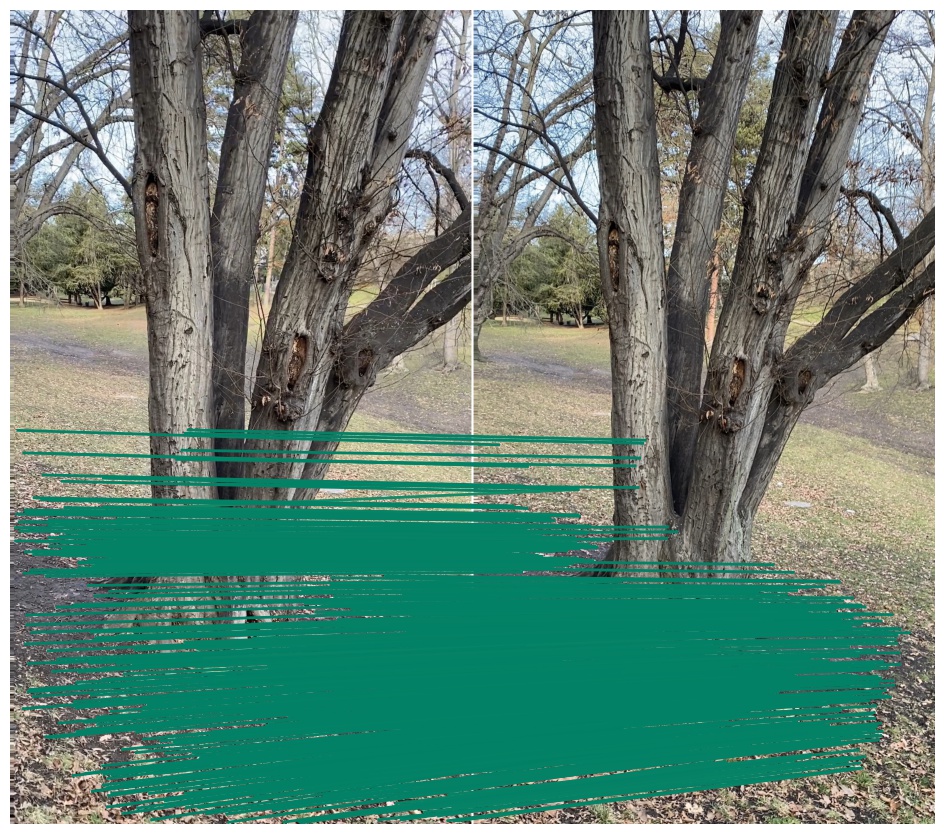

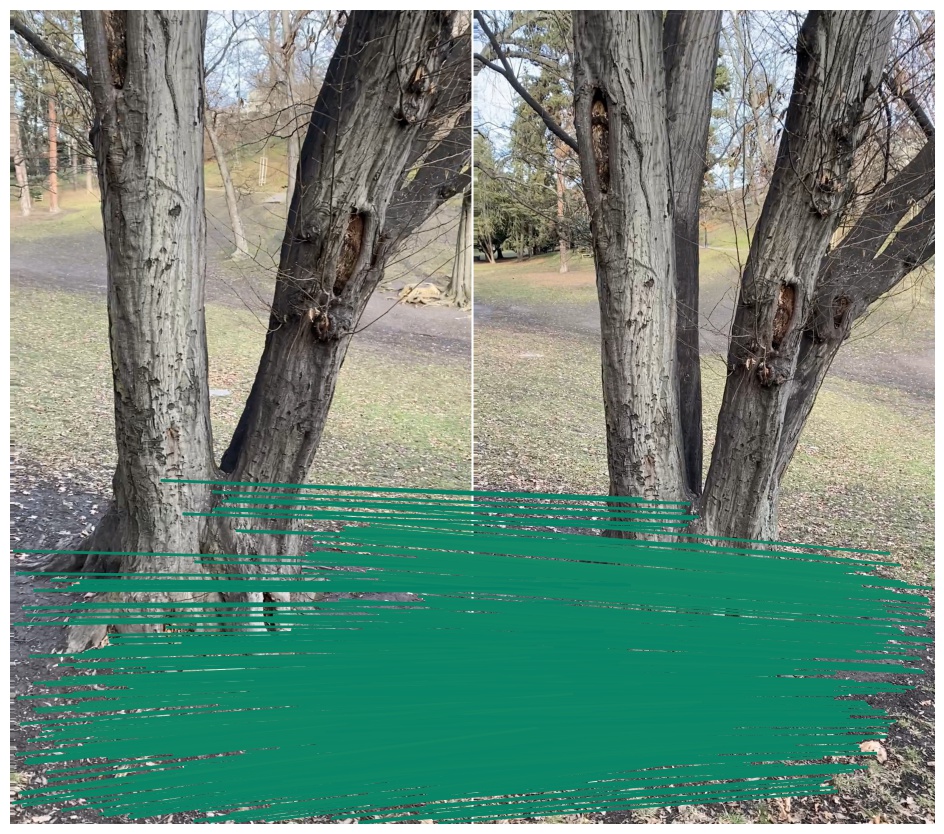

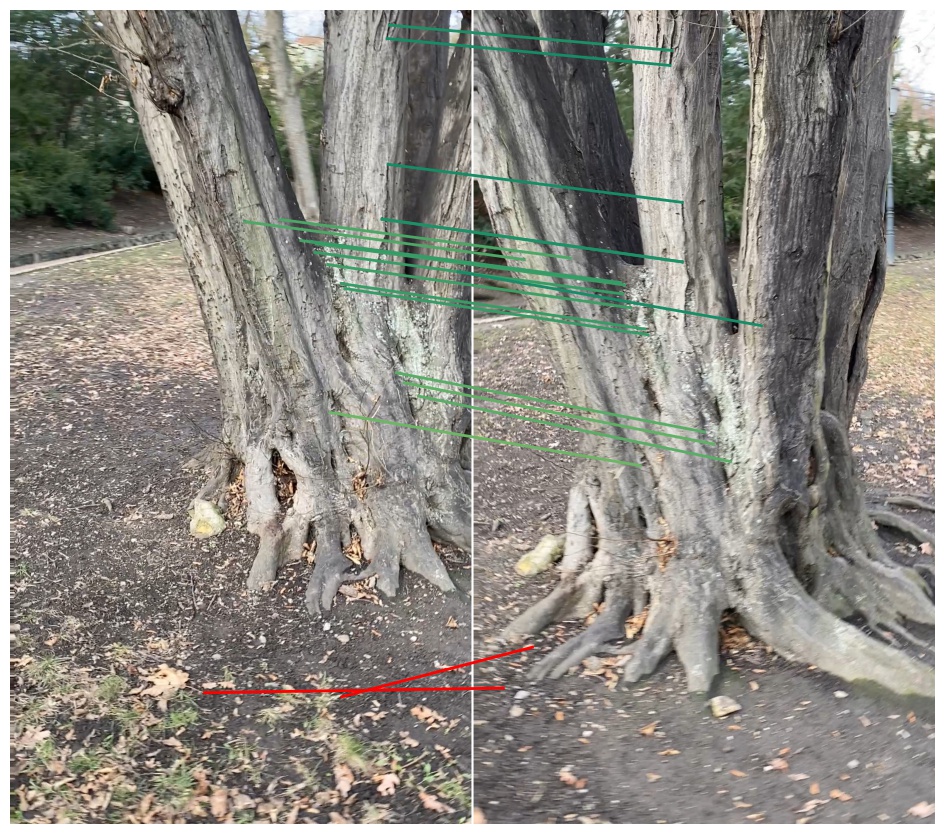

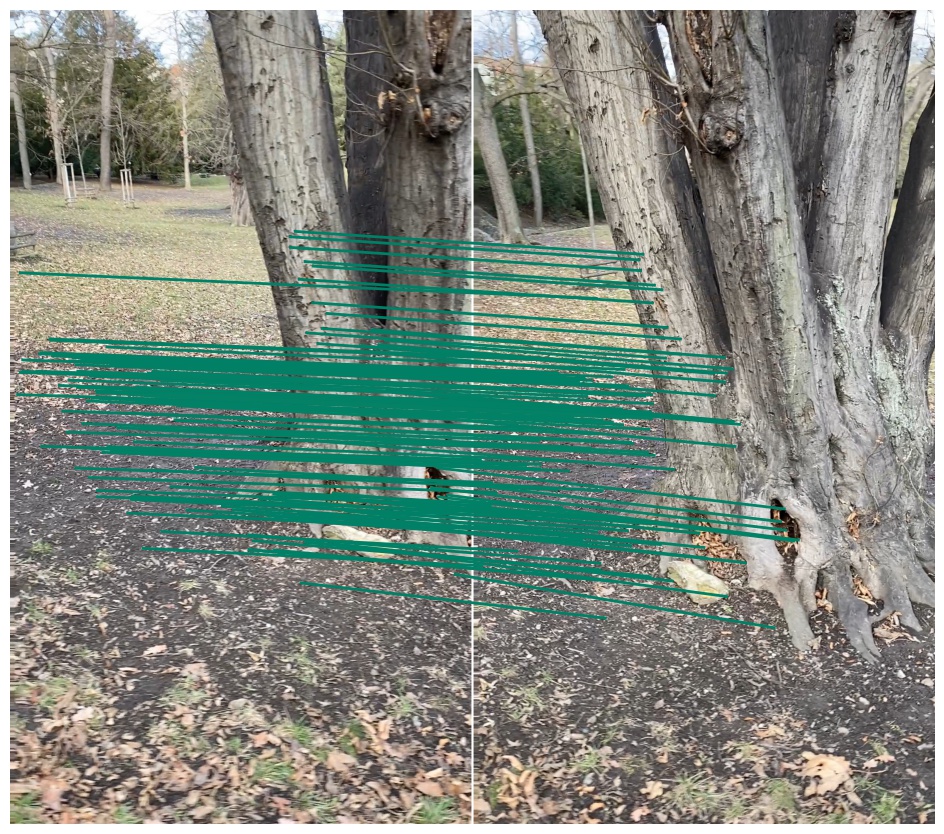

We show the inliers that survive the robust estimation loop (i.e. RANSAC), or those supplied with the submission if using custom matches, and use the depth estimates to determine whether they are correct. We draw matches above a 5-pixel error threshold in red, and those below are color-coded by their error, from 0 (green) to 5 pixels (yellow). Matches for which we do not have depth estimates are drawn in blue. Please note that the depth maps are estimates and may contain errors.

— British Museum —

— Florence Cathedral Side —

— Lincoln Memorial Statue —

— London Bridge —

— Milan Cathedral —

— Mount Rushmore —

— Piazza San Marco —

— Sagrada Familia —

— Saint Paul's Cathedral —

Phototourism dataset / Multiview track

mAA at 10 degrees: N/A (±N/A over N/A run(s) / ±N/A over 9 scenes)

Rank (per category): (of 86)

No results for this dataset.

Prague Parks dataset / Stereo track

mAA at 10 degrees: 0.72837 (±0.00000 over 1 run(s) / ±0.05122 over 3 scenes)

Rank (per category): 33 (of 87)

| Scene | Features | Matches (raw) |

Matches (final) |

Rep. @ 3 px. | MS @ 3 px. | mAA(5o) | mAA(10o) |

| Lizard | 2048.0 | 2048.0 | 124.1 | 0.074 Rank: 3/87 |

0.016 Rank: 1/87 |

0.51795 (±0.00000) Rank: 40/87 |

0.65769 (±0.00000) Rank: 40/87 |

| Pond | 2048.0 | 2048.0 | 86.4 | 0.117 Rank: 1/87 |

0.118 Rank: 1/87 |

0.69677 (±0.00000) Rank: 1/87 |

0.77742 (±0.00000) Rank: 2/87 |

| Tree | 2048.0 | 2048.0 | 86.5 | 0.118 Rank: 1/87 |

0.093 Rank: 1/87 |

0.68000 (±0.00000) Rank: 27/87 |

0.75000 (±0.00000) Rank: 41/87 |

| Avg | 2048.0 | 2048.0 | 99.0 | 0.103 Rank: 1/87 |

0.076 Rank: 1/87 |

0.63157 (±0.00000) Rank: 18/87 |

0.72837 (±0.00000) Rank: 33/87 |

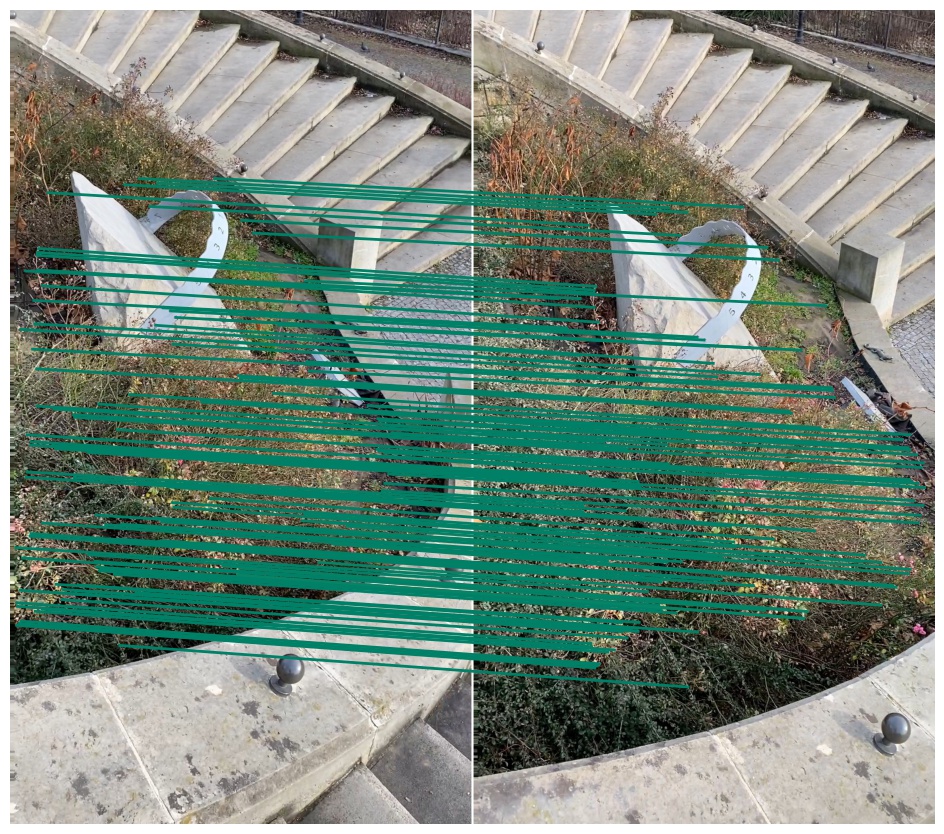

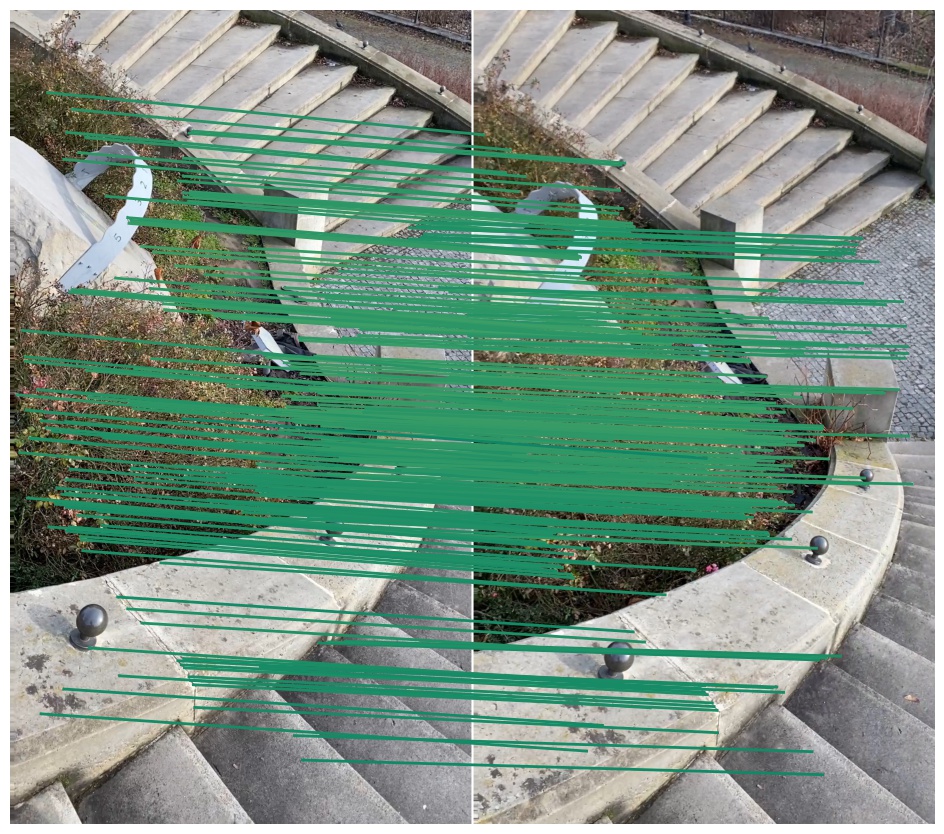

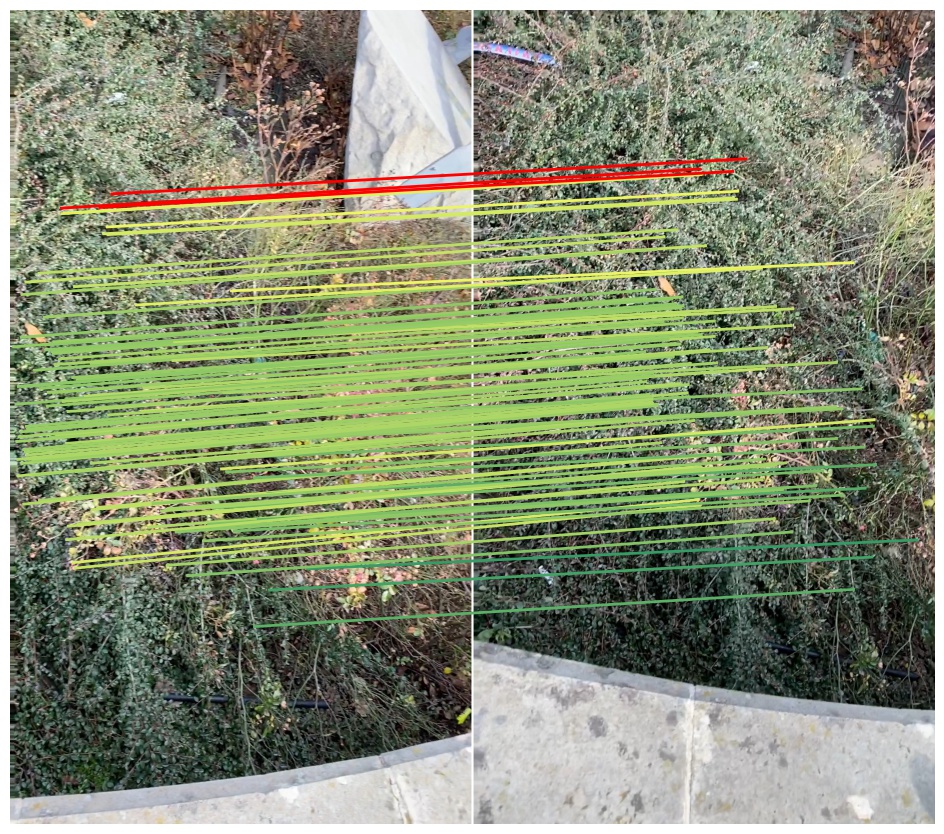

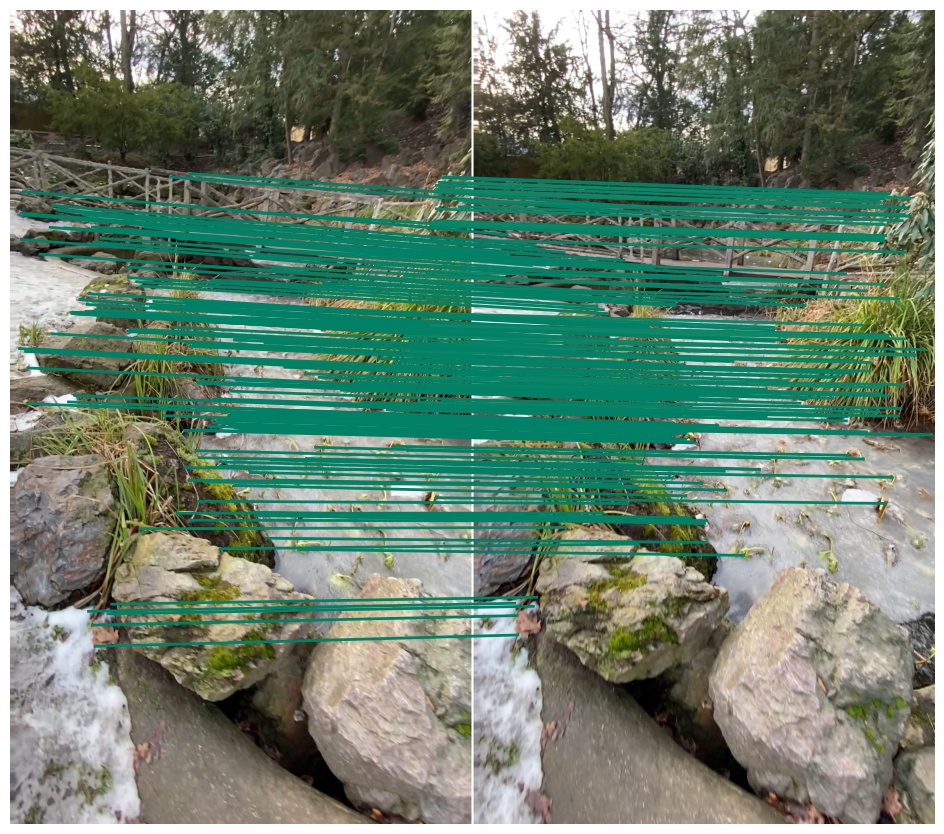

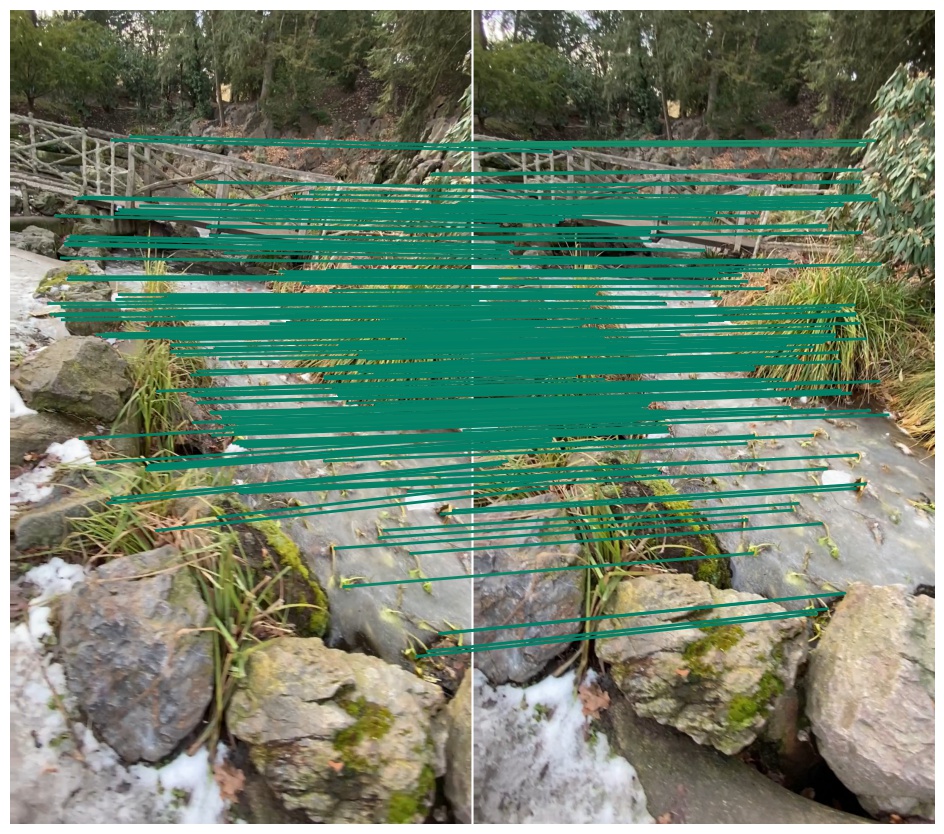

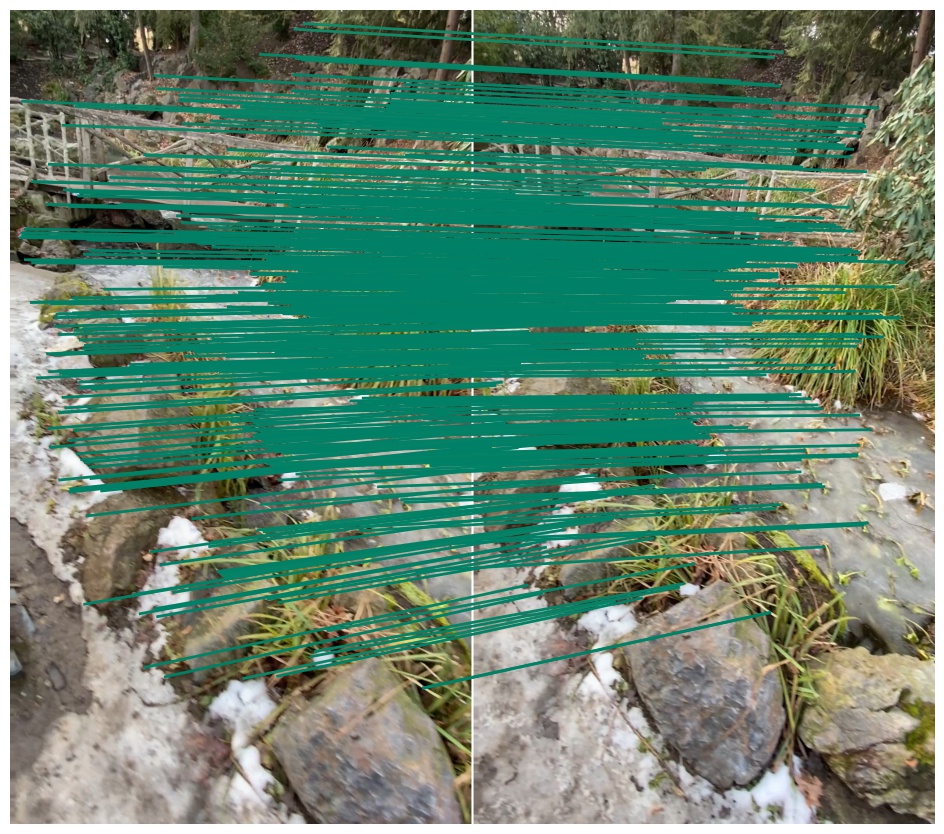

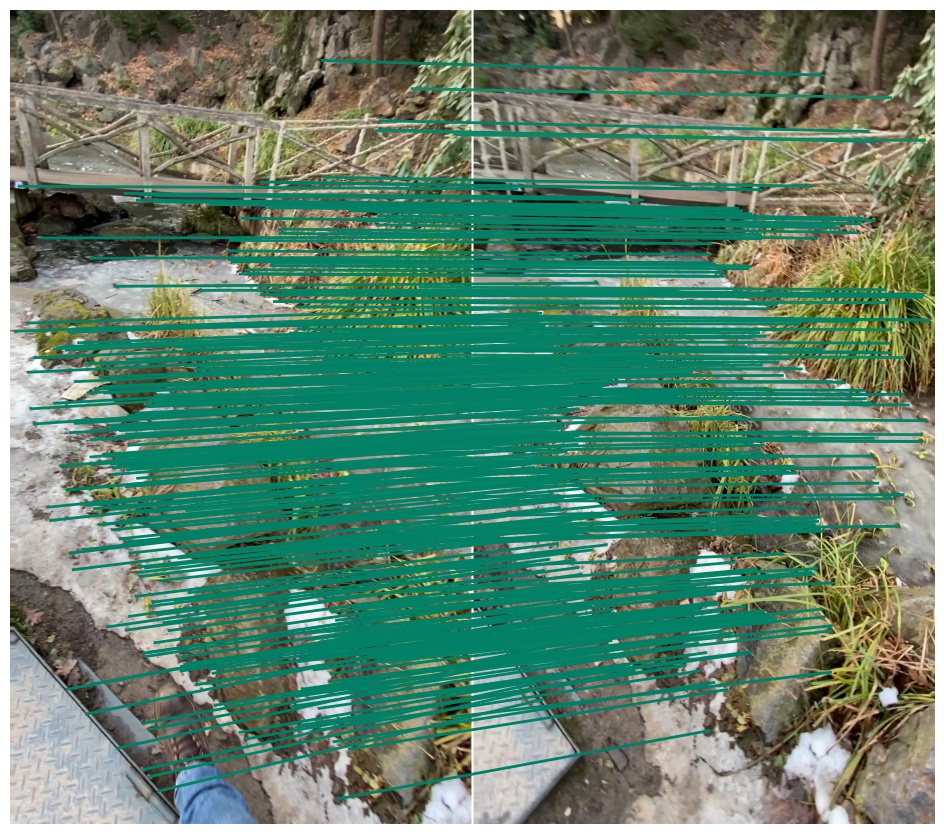

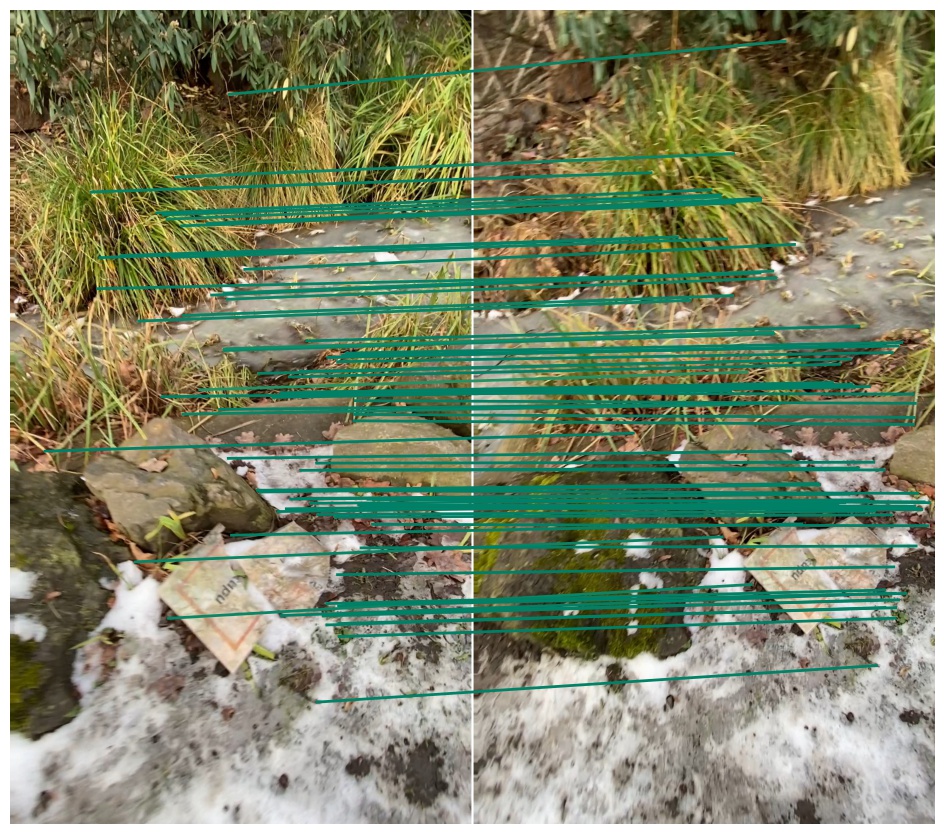

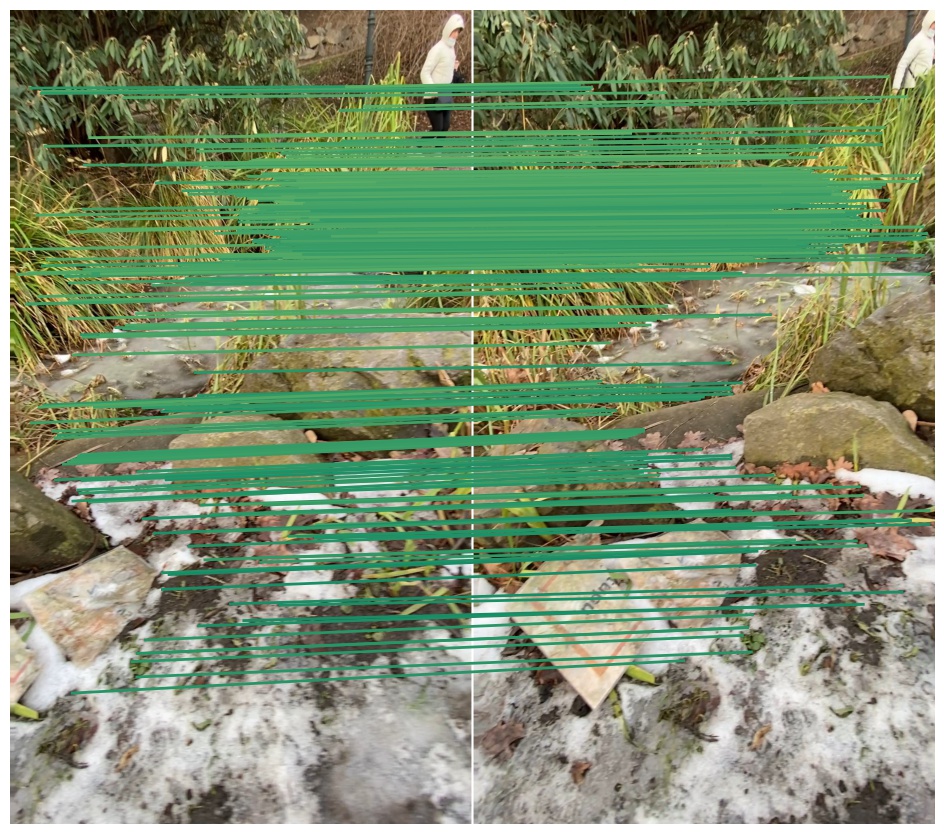

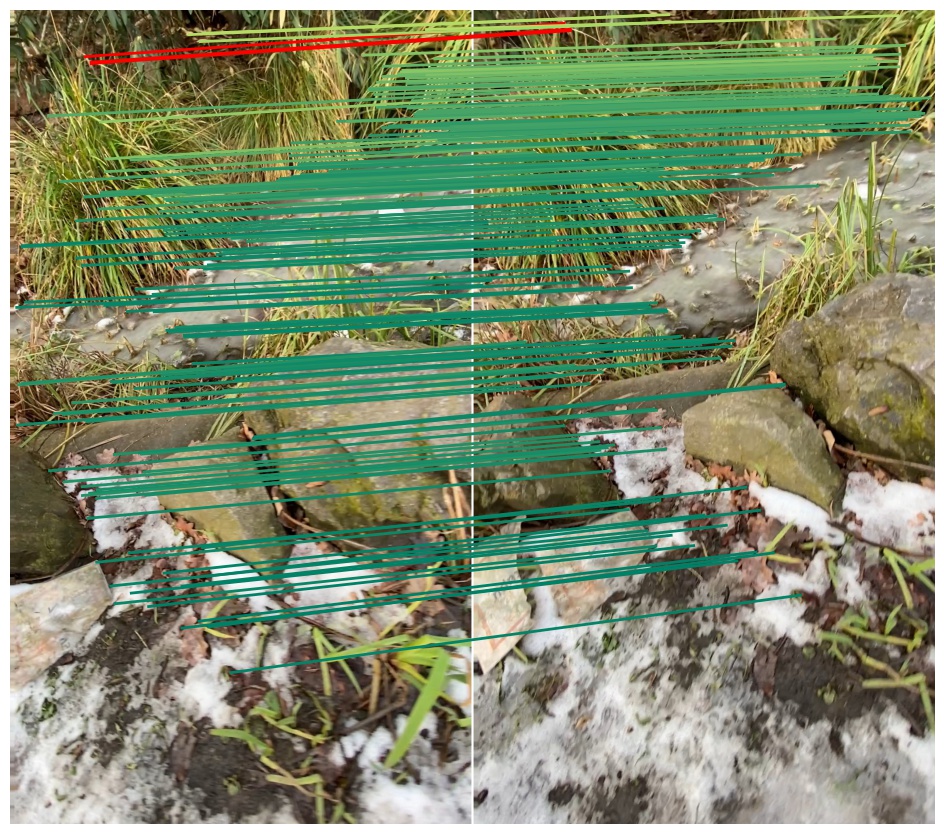

We show the inliers that survive the robust estimation loop (i.e. RANSAC), or those supplied with the submission if using custom matches, and use the depth estimates to determine whether they are correct. We draw matches above a 5-pixel error threshold in red, and those below are color-coded by their error, from 0 (green) to 5 pixels (yellow). Matches for which we do not have depth estimates are drawn in blue. Please note that the depth maps are estimates and may contain errors.

— Lizard —

— Pond —

— Tree —

Prague Parks dataset / Multiview track

mAA at 10 degrees: N/A (±N/A over N/A run(s) / ±N/A over 3 scenes)

Rank (per category): (of 86)

No results for this dataset.

Google Urban dataset / Stereo track

mAA at 10 degrees: N/A (±N/A over N/A run(s) / ±N/A over 17 scenes)

Rank (per category): (of 86)

No results for this dataset.

Google Urban dataset / Multiview track

mAA at 10 degrees: N/A (±N/A over N/A run(s) / ±N/A over 17 scenes)

Rank (per category): (of 86)

No results for this dataset.