Difference: BikramAdhikariProjectIter3 (1 vs. 7)

Revision 72014-04-15 - TWikiGuest

Revision 62014-04-14 - BikramAdhikari

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

CPSC 543 Project - Third Iteration | ||||||||

| Line: 9 to 9 | ||||||||

Shared ControlThe context of shared control involves a control signal generated by combining real-time signals from multiple agents in a system. In context of our PWC, we have the PWC driver and an embedded controller form these two agents. For our study, we replace the embedded controller with a teleoperator. | ||||||||

| Changed: | ||||||||

| < < | Shared control have been used in applications involving remote operation such as surgery and pilot training systems which assume users to be trained professionals. Literature in shared control for lane assistance for passenger cars appear more closely related to our application as these systems also assume users to be novice drivers and the degrees of freedom of motion is also restricted to a plane. [2] describes three approaches to shared road departure prevention (RDP) for simulated emergency manoeuvre. They experimented haptic feedback (HF), drive by wire (DBW) and combination of HF and DBW with normal driving. In HF, given a likelihood of a road departure, the RDP applied an advisory steering torque such that the two agents would carry out the emergency manoeuvre cooperatively. In DBW, given a likelihood of road departure, the RDP adjusted the front-wheels angle to keep the vehicle on the road. In this mode, the users steering signal is completely overridden by the RDP. Their experiments on 30 participants in a vehicle simulator suggested that HF had no significant effect on the vehicle's path or the likelihood of road departure.The authors describe that, users perceived strong haptic feedback was authoritarian where as they generated more torque on the steering wheel against HF and overrode the RDP system if the HF was not strong. DBW and DBW+HF reduced the likelihood of departure. However the authors report degraded stimulus-response compatibility with DBW systems because when the DBW system took over control, the users would not steering wheel turning, which lead to confuse their internal perception of the vehicle. | |||||||

| > > | Shared control have been used in applications involving remote operation such as surgery and pilot training systems. These applications assume users to be trained professionals. Literature in shared control for lane assistance for passenger cars appear more closely related to our application as these systems also assume users to be novice drivers and the degrees of freedom of motion is also restricted to a plane. Katzourakis et al [2] describe three approaches to shared road departure prevention (RDP) for simulated emergency manoeuvre. They experimented haptic feedback (HF), drive by wire (DBW) and combination of HF and DBW with normal driving. In HF, given a likelihood of a road departure, the RDP applied an advisory steering torque such that the two agents would carry out the emergency manoeuvre cooperatively. In DBW, given a likelihood of road departure, the RDP adjusted the front-wheels angle to keep the vehicle on the road. In this mode, the user's steering signal was completely overridden by the RDP. Their experiments on 30 participants in a vehicle simulator suggested that HF had no significant effect on the vehicle's path or the likelihood of road departure.The authors describe that, users perceived strong haptic feedback as authoritarian where as they generated more torque on the steering wheel against HF and overrode the RDP system if the HF was not strong. DBW and DBW+HF reduced the likelihood of departure. However the authors report degraded stimulus-response compatibility with DBW systems because when the DBW system took over control, the users would not steering wheel turning, which lead to confuse their internal perception of the vehicle. | |||||||

| Changed: | ||||||||

| < < | Taking inspiration from their work, our system uses an approach similar to DBW. Force-feedback haptic joysticks have been used in smart PWCs [3,4] but they are bulky, expensive and/or lack sufficient torque. Vibration feedback on seat [5] and steering wheel [6] have shown to have positive effect on performance,learning of a lane keeping task and reduction in reaction time and frontal collision. Without totally discarding haptic channel, our previous study used simple vibration actuator mounted below the joystick to inform user if the joystick signal is being modified or not. However, it was not suggestive for users regarding the direction they would have to move in case of an obstacle on their way. We propose to render shear force on palm of users driving hand as advisory direction guidance under these circumstances. | |||||||

| > > | Taking inspiration from their work, our system uses an approach similar to DBW. Force-feedback haptic joysticks have been used in smart PWCs [3,4] but they are bulky, expensive and/or lack sufficient torque. Vibration feedback on seat [5] and steering wheel [6] have shown to have positive effect on performance,learning of a lane keeping task and reduction in reaction time and frontal collision. Without totally discarding the haptic channel, our previous study used simple vibration actuator mounted below the joystick to inform user if the joystick signal is being modified or not. However, it was not suggestive for users regarding the direction they would have to move in case of an obstacle on their way. We propose to render shear force on palm of users driving hand as advisory direction guidance under these circumstances. | |||||||

Shared Control in PWCSmart PWCs systems literature have been extensively covered in [7],[8]. We explore the systems that have been tested with cognitively and/or mobility impaired users. [1],[8] did not use the concept of shared control. These systems would either provide higher level supervisory guidance with visual and audio cues or used switch control policies such that either the system or the user would have complete control over the PWC. | ||||||||

Revision 52014-04-14 - TWikiGuest

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

CPSC 543 Project - Third Iteration | ||||||||

| Line: 54 to 54 | ||||||||

| Wizard of Oz interfaces have drawn interest in research community as a tool to train novice elderly PWC users. Problems associated with unintuitive guidance (in speed control policy) and switching discontinuity (in direction control) make it difficult for novice elderly PWC user to comprehend PWC behavior. In this project, we intend to bridge this discontinuity in direction control policy and lack of direction guidance in speed control policy. We integrate, speed control with steering guidance rendered as shear force on a custom designed low cost joystick handle. This shear force rendering does not require the joystick to be bulky to have a high torque. We rather position the shear force actuator at a suitable location requiring minimal torque. This shear force form of guidance has not been tested before on PWCs. We hypothesize that, our proposed shared control strategy will keep the user in control at all times and provide assistance when the user is unclear about suitable driving direction. | ||||||||

| Changed: | ||||||||

| < < | Our first and second design iterations focused on exploring the problem space and possible technical solution space respectively. We leave the discussion on findings from these iterations on our final report. In the next section, we describe an experiment we propose to test our joystick interface and the proposed control strategy. | |||||||

| > > | Our first and second design iterations focused on exploring the problem space and possible technical solution space respectively. We leave the discussion on findings from these iterations on our final report. In the next section, we describe an experiment we propose to test our shear force joystick interface and the proposed control strategy. | |||||||

Experimental Setup | ||||||||

| Deleted: | ||||||||

| < < | Divided attention | |||||||

| It is difficult to recruit a large number of wheelchair users on the target demographics which makes it difficult to produce statistically significant results [11],[12]. As [10] and [11], we will use able-bodied users to evaluate the performance of our system. Future work will include a case study with an user from the target population. | ||||||||

| Changed: | ||||||||

| < < | Egocentric ViewPowered wheelchairs are usually driven by joystick interface modified as per users needs. Our user study from previous iteration suggests that users prefer joystick that has larger surface area as it provides more sense of control and comfort. We use this opportunity to design an embedded navigation assistive physical user interface that would fit into the joysticks on these wheelchairs. We use egocentric view representation on a dome shaped physical interface as a visual sense of collision free direction. This visual guidance is supported by haptic rendering as a sub-additive sensory modal to stimulate user reaction. Similar work has been done in [1], where they use haptic display to block motion of joystick in certain direction, LED display around the joystick knob to indicate direction free from obstacles and an audio prompt to prompt user towards certain direction. Auditory percept requires user to process and execute the percept. It would be useful to provide a natural guidance that would direct the user towards a suitable trajectory. Here we extend to using shear force and vibratory haptic guidance instead of audio prompts with the hypothesis that it can provide a sense of natural guidance towards a safely projected trajectory.Visual DisplayWe use a RGB LED ring comprising of 24 serially controllable red, green and blue channels. We found this display suitable (see Fig [1]) for the size of the user interface we are considering to build. Figure [1] Egocentric LED display around wheelchair joystick

Our first attempt was to use egocentric LED display to point in the direction towards the which the intelligent system wants to guide. Figure [2] shows the brightest green as the direction of possible heading.

Figure [1] Egocentric LED display around wheelchair joystick

Our first attempt was to use egocentric LED display to point in the direction towards the which the intelligent system wants to guide. Figure [2] shows the brightest green as the direction of possible heading.

Figure [2] LED display showing green head in the direction away from obstacle

Another form of display would be suggestive of direction towards which the joystick could turn. We simulated this using trailing LEDs. This video

Figure [2] LED display showing green head in the direction away from obstacle

Another form of display would be suggestive of direction towards which the joystick could turn. We simulated this using trailing LEDs. This video | |||||||

| > > | For our experiment, we choose one scenario representative of the activities of daily living of our target population. The Power-Moblity Indoor Driving Assessment (PIDA) is an assessment tool designed to describe an individuals indoor mobility status. This assesment is conducted in their own environment rather than an isolated obstacle course. Out of thirty tasks specified on the PIDA, we are particularly interested in back-in parking task as it involves driving in all directions, under limited visibility and apparently complicated/confusion joystick motion. | |||||||

| Changed: | ||||||||

| < < | Haptic RenderingWe use a similar approach to haptic rendering as to that of visual display. We use a servo motor with an arrow like shaft on top to point in the direction of guidance. We mounted this motor into a hemispherical surface in such a way that the palm surface would be in contact with the pointer. This video | |||||||

| > > | We will test three shared control policies; speed control, direction control, speed control with direction guidance to determine which policy is most effective from users point of view and how each control policy effect quantitative measures such as completion time and trajectory smoothness. | |||||||

| Changed: | ||||||||

| < < | This method has its challenges mainly because the motor we are using is not strong enough to produce any torque when the palm solidly rests on it. Using a bigger motor would be another option which we will explore in the next iteration. | |||||||

| > > | Each control policy will be tested three times with three different randomized initial conditions. The order of the control policy will also be randomized. | |||||||

| Deleted: | ||||||||

| < < | For now, we could possibly not bring the motor in touch with user's hand surface but generate a vibration either to represent position or direction.This video | |||||||

| Changed: | ||||||||

| < < | To make the vibration localized within a region, the vibration motor was made to touch the inner surface of the surrounding inner wall around the joystick. This transferred the vibration on to that surface. On removing some more material from the styrofoam on the sides, we were able to perceive change in vibration as the serve shaft turned around the joystick.This video | |||||||

| > > | Iteration #3 : A technical perspective | |||||||

| Changed: | ||||||||

| < < | As vibration was hard to localize, we removed vibration at the end of the servo motor shaft and replaced it with an eccentric wheel. This eccentric wheel protruded right enough outside the surface of the joystick to bring a sensation of guiding movement. This example video | |||||||

| > > | In this iteration, we planned to refine the haptic display using shear force. We also planned to integrate visual and haptic displays together into a single system. Our first version of assembled visual, haptic display was presented last week during our meeting. We rendered trajectory displayed on a processing gui on to the joystick handle through serial interface in Arduino. The rendered steering guidance feature was perceivable and hence offered some proof of concept. Next, we would like to implement the Since Robot Operating System (ROS) also uses serial interface to communicate with the Arduino, communicating between processing, arduino and ROS was not possible. As suggested during last week meeting, we spent sometime exploring shared memory to interface between these three nodes but were not successful at achieving that within the time frame. Hence, we pursued to understanding and identifying an experimental setup which would be achievable. Hence, we came up with the experimental setup as described above. We are currently working on integrating the Joystick handle to the PWC via ROS. We expect to have an experimental setup ready before presentation. | |||||||

| Deleted: | ||||||||

| < < | Egocentric InputAs input, we also calibrated the circular potentiometer against LED display. This feature can be used to control the pan-tilt unit on which the camera of the wheelchair is mounted. In this video Figure [3]: Touch sensors (circular and point pressure type touch sensors)used in this project

Figure [3]: Touch sensors (circular and point pressure type touch sensors)used in this project

ReflectionsIn this iteration, we focused on generating basic visual and tactile patterns showing target position and target heading. We used egocentric approach to representing the environment around the wheelchair. We demonstrated some of the patterns using a servo motor, a vibration motor and circular LED display. We achieved position and direction guidance like behaviour using LED display. Using a vibration motor was challenging to control and localize vibration. Our simple experiment showed some promise on displaying direction guidance using vibration feedback. We experimented with some simple shear force mechanisms to observe, if we can realize position and direction guidance. Our eccentric wheel based shear force display appears to be able to render both position and direction. We will explore this domain in our next iteration. The input section experimented in this iteration is merely an illustration of a working sensory system. We intend to use this sensory input to control the pan-tilt of the wheelchair camera so that the user can obtain vision based system's assistance. Right now, we have only used the circular touch sensor. This sensor could be used to turn the camera around by mapping sensor to the camera position (similar to mapping LED display with sensor position). We intend to incorporate controllability of tilt as well using combination of circular and point touch sensor with gestures such as swiping up and down to tilt the camera up and down.Next IterationIn next iteration, we plan to refine the haptic displays using shear force. We will integrate visual and haptic displays together into a single system. If time schedule aligns with my user interview, I will conduct a final interview with the user to get feedback on the designed interface. We also plan to integrate pan-tilt control using the touch interface. | |||||||

| Reference: | ||||||||

| Line: 141 to 107 | ||||||||

| [16] I. M. Mitchell, P. Viswanathan, B. Adhikari, E. Rothfels and A. K. Mackworth, "Shared control policies for safe wheelchair navigation of elderly adults with cognitive and mobility impairments: designing a Wizard of oz study", in American Control Conference, 2014. | ||||||||

| Added: | ||||||||

| > > | [17] D. R. Dawson, R. Chan, and E. Kaiserman, “Development of the power-mobility indoor driving assessment for residents of long term care facilities,” Canadian Journal of Occupational Therapy, vol. 61, no. 5, pp. 269–276, 1994. | |||||||

| -- BikramAdhikari - 24 Mar 2014 | ||||||||

Revision 42014-04-14 - TWikiGuest

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

CPSC 543 Project - Third Iteration | ||||||||

| Line: 52 to 52 | ||||||||

| Fig[3] Shared Control using direction control (left: occupancy map showing PWC trajectory; right: time series plot showing control signals) | ||||||||

| Changed: | ||||||||

| < < | Wizard of Oz interfaces have drawn interest in research community as a tool to train novice elderly PWC users. Problems associated with unintuitive guidance (in speed control policy) and switching discontinuity (in direction control) make it difficult for novice elderly PWC user to comprehend PWC behavior. In this project, we intend to bridge this discontinuity in direction control policy and lack of direction guidance in speed control policy. We integrate, speed control with steering guidance using shear force on a custom designed low cost joystick handle. This form of guidance has not been tested before. We hypothesize that, our proposed shared control strategy will keep the user in control at all times and provide assistance when the user is unclear about suitable driving direction. | |||||||

| > > | Wizard of Oz interfaces have drawn interest in research community as a tool to train novice elderly PWC users. Problems associated with unintuitive guidance (in speed control policy) and switching discontinuity (in direction control) make it difficult for novice elderly PWC user to comprehend PWC behavior. In this project, we intend to bridge this discontinuity in direction control policy and lack of direction guidance in speed control policy. We integrate, speed control with steering guidance rendered as shear force on a custom designed low cost joystick handle. This shear force rendering does not require the joystick to be bulky to have a high torque. We rather position the shear force actuator at a suitable location requiring minimal torque. This shear force form of guidance has not been tested before on PWCs. We hypothesize that, our proposed shared control strategy will keep the user in control at all times and provide assistance when the user is unclear about suitable driving direction. | |||||||

| Changed: | ||||||||

| < < | Our first and second design iterations focused on exploring the problem space and possible technical solution space. We leave the discussion on findings from the iteration for final report. In the next section, we describe an experiment we propose to conduct to test the | |||||||

| > > | Our first and second design iterations focused on exploring the problem space and possible technical solution space respectively. We leave the discussion on findings from these iterations on our final report. In the next section, we describe an experiment we propose to test our joystick interface and the proposed control strategy. | |||||||

Experimental Setup | ||||||||

Revision 32014-04-14 - TWikiGuest

| Line: 1 to 1 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

CPSC 543 Project - Third Iteration | |||||||||||

| Line: 16 to 16 | |||||||||||

Shared Control in PWCSmart PWCs systems literature have been extensively covered in [7],[8]. We explore the systems that have been tested with cognitively and/or mobility impaired users. [1],[8] did not use the concept of shared control. These systems would either provide higher level supervisory guidance with visual and audio cues or used switch control policies such that either the system or the user would have complete control over the PWC. | |||||||||||

| Changed: | |||||||||||

| < < | Collaborative wheelchair assistant (CWA) [9],[10] modified users input by computing motion perpendicular to the desired path. Perpendicular motion away from the desired path increased the elastic path controllers output and force the PWC to return to the path. The amount of guidance was determined by varying a parameter that controlled the elastic path controllers gain. Collaborative wheelchair control (CWC) [3] used smoothness, directness and safety measures to compute local efficiencies of human and robot control signals. The system blended the control signals based on current and past average relative efficiencies. | ||||||||||

| > > | Collaborative wheelchair assistant (CWA) [9],[10] modified users input by computing motion perpendicular to the desired path. Perpendicular motion away from the desired path increased the elastic path controllers output and force the PWC to return to the path. The amount of guidance was determined by varying a parameter that controlled the elastic path controllers gain. Urdiales et al [3] used smoothness, directness and safety measures to compute local efficiencies of human and robot control signals. The system blended the control signals based on current and past average relative efficiencies. Li et al [14] used safety, comfort and obedience measures (similar to [3]) to blend user's and autonomous controllers signal along with an online optimization procedure to maximize the minimum of these measures. Their experiment with able bodied users and cognitively intact mobility impaired older adults showed that the system improved smoothness of wheelchair trajectory and reduced likelihood of collision. Carlson and Demeris [13] implemented shared control scheme by first identifying users intended destination based on joystick heading and autonomous systems trajectory. Secondly, they implemented an obstacle avoidance algorithm to find traversable direction close to the user's input. Their experiment with able bodied users and an experienced end-user with mobility impairment showed improved user safety with a little additional time cost. It also allowed users to perform secondary task by decreasing cognitive workload, visual attention and manual dexterity demands. | ||||||||||

| Added: | |||||||||||

| > > | In [15] and [16] we describe our recent user study of shared control policies in PWCs with cogntively impaired older adults. We experimented with three shared control policies. Speed Control: In this policy, the wizard restricts maximum magnitude of control signal based on proximity of nearby obstacles. Direction Control: In this policy, the wizard takes control of the steering if the PWC crosses a threshold distance from an obstacle, steers the PWC to the nearest free space and releases control back to the user. The user has control of magnitude of speed at all times. Autonomous Driving: In this policy, the wizard takes full control of the PWC to perform a task. In the following section, we describe an example scenario showing the first two control policies in action from our previous work in [16]. We highlight the limitations of the control policies and describe our proposed intermediate control policy. | ||||||||||

Our contribution | |||||||||||

| Added: | |||||||||||

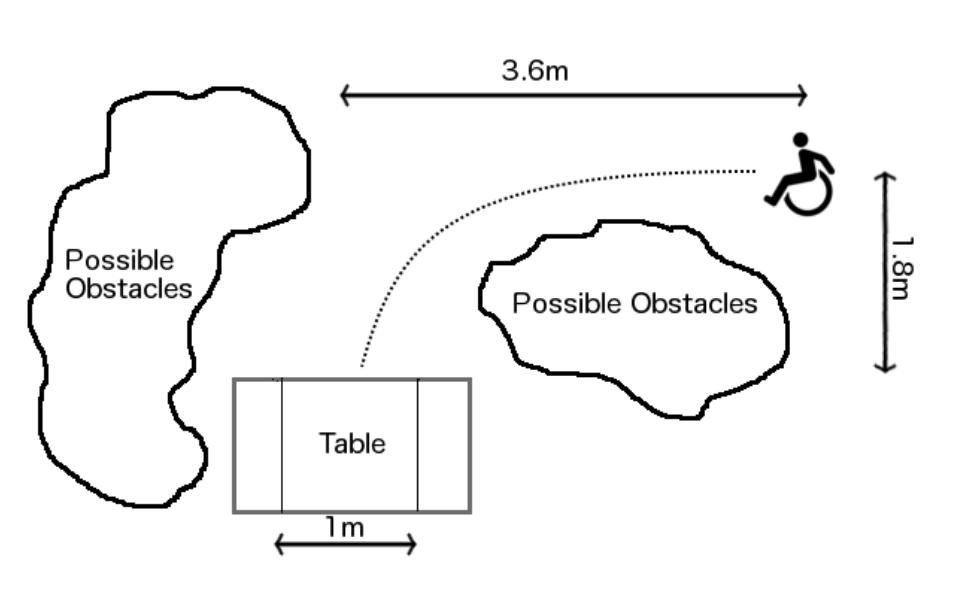

| > > |  Fig[1] An example scenario of parking at a table task

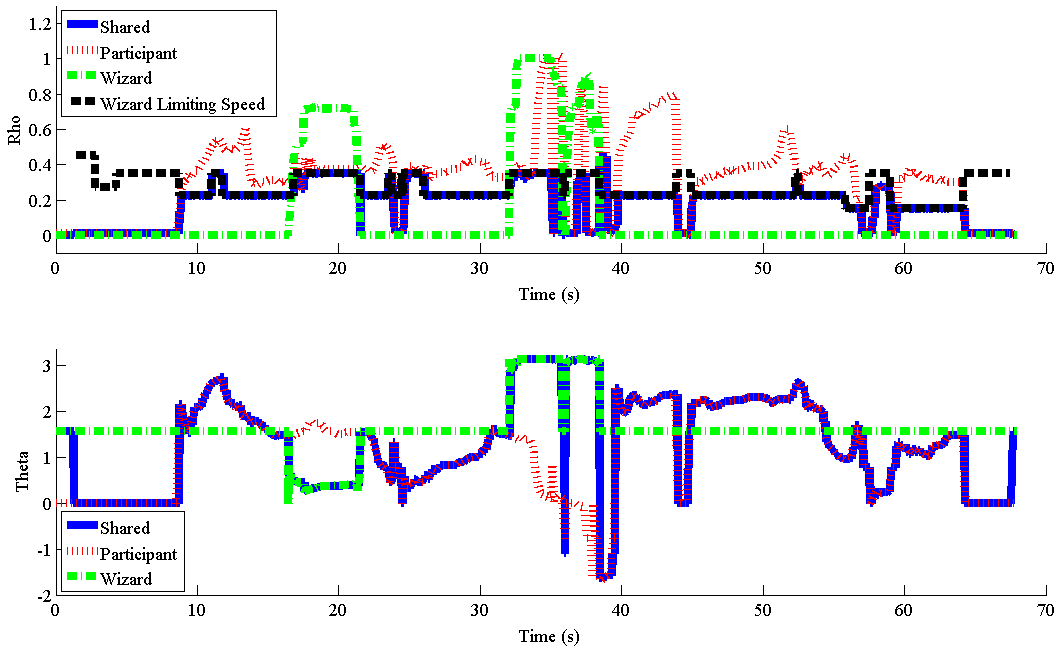

Given a parking task at a table, the PWC user is required to manoeuvre the PWC to park at the table while avoiding possible obstacles on the way (see Fig. 1). The following plots describe shared control executed using combination of participant, wizard and effective shared control. The values plotted are polar magnitude and angle of joystick which maps to wheelchair motion. The top plot shows the magnitude of joystick position in polar coordinate that represents speed of the PWC and bottom plot show the direction (theta) of the joystick that represents the steering of the PWC.

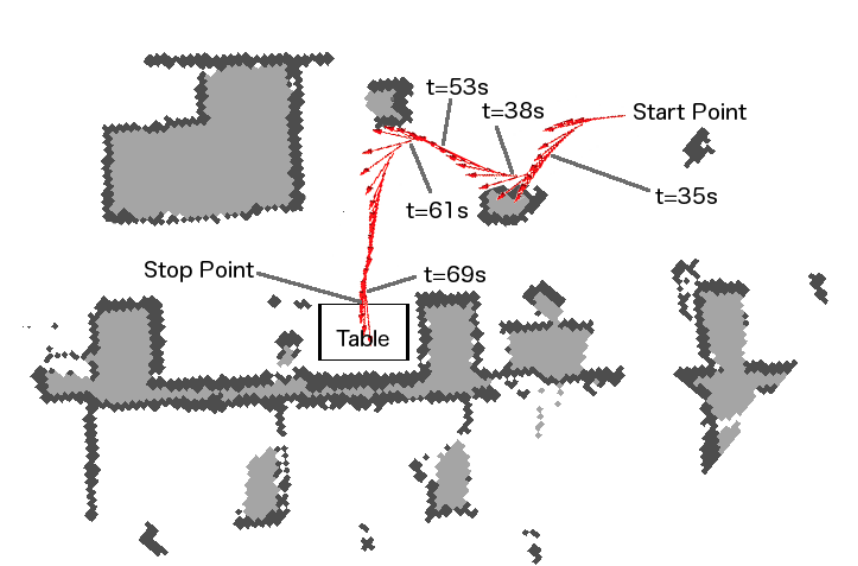

In speed control policy (see Fig. 2), we observe that the steering (theta) from the user follows the shared controlled trajectory as long as the wizard limiting speed is non-zero. When the wizard's limiting speed was zero (eg. 40sec - 45 sec), the shared steering does not follow participant steering. During these conditions, the user experienced lack of guidance from the system for suitable obstacle free navigation.

Fig[1] An example scenario of parking at a table task

Given a parking task at a table, the PWC user is required to manoeuvre the PWC to park at the table while avoiding possible obstacles on the way (see Fig. 1). The following plots describe shared control executed using combination of participant, wizard and effective shared control. The values plotted are polar magnitude and angle of joystick which maps to wheelchair motion. The top plot shows the magnitude of joystick position in polar coordinate that represents speed of the PWC and bottom plot show the direction (theta) of the joystick that represents the steering of the PWC.

In speed control policy (see Fig. 2), we observe that the steering (theta) from the user follows the shared controlled trajectory as long as the wizard limiting speed is non-zero. When the wizard's limiting speed was zero (eg. 40sec - 45 sec), the shared steering does not follow participant steering. During these conditions, the user experienced lack of guidance from the system for suitable obstacle free navigation.

Fig[2] Shared Control using speed control (left: occupancy map showing PWC trajectory; right: time series plot showing control signals)

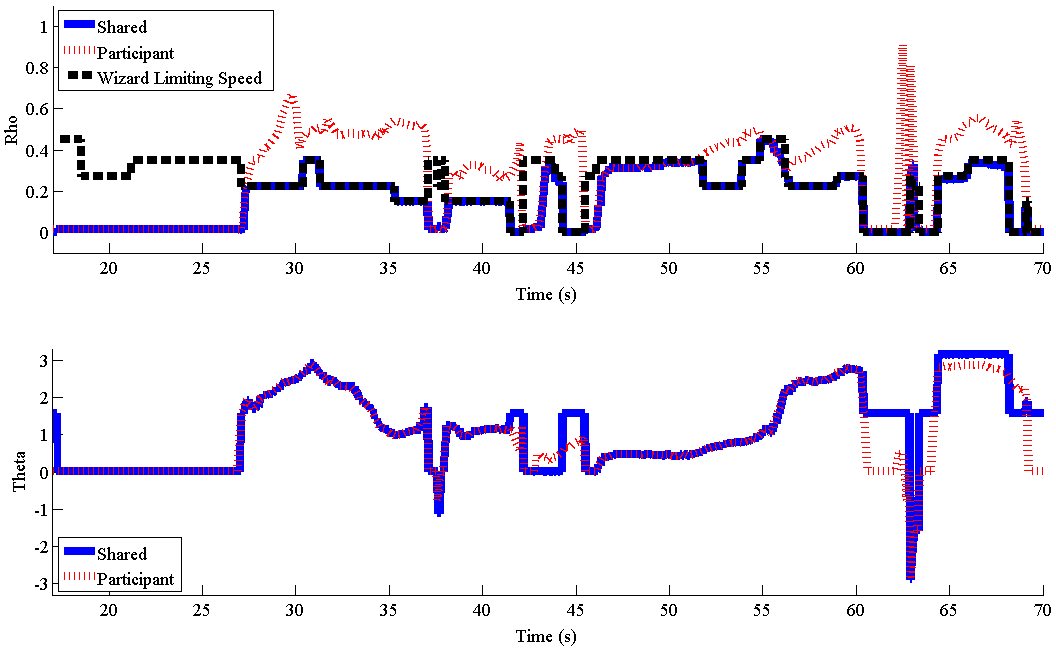

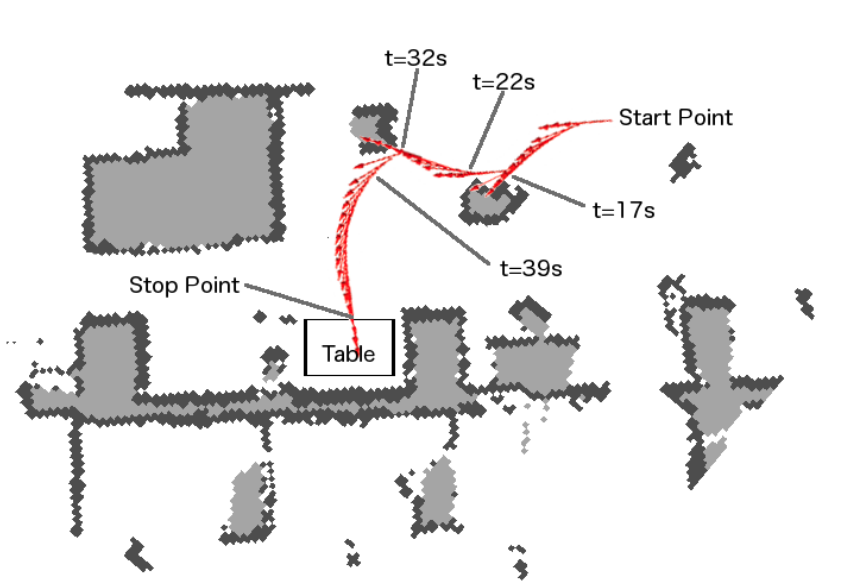

In direction control policy (see Fig. 2), we observe that the steering (theta) from the user follows the shared controlled trajectory when the teleoperator is not overriding the user's joystick. During override, the user's joystick motion is actually pointing in the reverse direction of actual shared steering of the PWC (eg. 15 sec - 22 sec and 30 sec - 40 sec).

During the transition of steering control from PWC user to the teleoperator and vice versa we observed a discontinuity similar to one described in [2]. This discontinuity led to user getting confused about their expectation from joystick.

Fig[2] Shared Control using speed control (left: occupancy map showing PWC trajectory; right: time series plot showing control signals)

In direction control policy (see Fig. 2), we observe that the steering (theta) from the user follows the shared controlled trajectory when the teleoperator is not overriding the user's joystick. During override, the user's joystick motion is actually pointing in the reverse direction of actual shared steering of the PWC (eg. 15 sec - 22 sec and 30 sec - 40 sec).

During the transition of steering control from PWC user to the teleoperator and vice versa we observed a discontinuity similar to one described in [2]. This discontinuity led to user getting confused about their expectation from joystick.

Fig[3] Shared Control using direction control (left: occupancy map showing PWC trajectory; right: time series plot showing control signals)

Wizard of Oz interfaces have drawn interest in research community as a tool to train novice elderly PWC users. Problems associated with unintuitive guidance (in speed control policy) and switching discontinuity (in direction control) make it difficult for novice elderly PWC user to comprehend PWC behavior. In this project, we intend to bridge this discontinuity in direction control policy and lack of direction guidance in speed control policy. We integrate, speed control with steering guidance using shear force on a custom designed low cost joystick handle. This form of guidance has not been tested before. We hypothesize that, our proposed shared control strategy will keep the user in control at all times and provide assistance when the user is unclear about suitable driving direction.

Our first and second design iterations focused on exploring the problem space and possible technical solution space. We leave the discussion on findings from the iteration for final report. In the next section, we describe an experiment we propose to conduct to test the

Fig[3] Shared Control using direction control (left: occupancy map showing PWC trajectory; right: time series plot showing control signals)

Wizard of Oz interfaces have drawn interest in research community as a tool to train novice elderly PWC users. Problems associated with unintuitive guidance (in speed control policy) and switching discontinuity (in direction control) make it difficult for novice elderly PWC user to comprehend PWC behavior. In this project, we intend to bridge this discontinuity in direction control policy and lack of direction guidance in speed control policy. We integrate, speed control with steering guidance using shear force on a custom designed low cost joystick handle. This form of guidance has not been tested before. We hypothesize that, our proposed shared control strategy will keep the user in control at all times and provide assistance when the user is unclear about suitable driving direction.

Our first and second design iterations focused on exploring the problem space and possible technical solution space. We leave the discussion on findings from the iteration for final report. In the next section, we describe an experiment we propose to conduct to test the | ||||||||||

Experimental SetupDivided attention | |||||||||||

| Line: 98 to 133 | |||||||||||

| [12] H. A. Yanco, “Shared user-computer control of a robotic wheelchair system,” Ph.D. dissertation, MIT, Cambridge, MA, 2000. | |||||||||||

| Added: | |||||||||||

| > > | [13] T. Carlson and Y. Demiris, “Collaborative control for a robotic wheelchair: evaluation of performance, attention, and workload,” IEEE Trans. Systems, Man, and Cybernetics, Part B: Cybernetics,, vol. 42, no. 3, pp. 876–888, 2012. [14] Q. Li, W. Chen, and J. Wang, “Dynamic shared control for human-wheelchair cooperation,” in Proc. IEEE Int. Conf. on Robotics and Automation (ICRA), 2011, pp. 4278–4283. [15] P. Viswanathan, R. Wang, and A. Mihailidis, “Wizard-of-Oz and mixed-methods studies to inform intelligent wheelchair design for older adults with dementia,” in Association for the Advancement of Assistive Technology in Europe, 2013. [16] I. M. Mitchell, P. Viswanathan, B. Adhikari, E. Rothfels and A. K. Mackworth, "Shared control policies for safe wheelchair navigation of elderly adults with cognitive and mobility impairments: designing a Wizard of oz study", in American Control Conference, 2014. | ||||||||||

| -- BikramAdhikari - 24 Mar 2014 | |||||||||||

| Added: | |||||||||||

| > > |

| ||||||||||

Revision 22014-04-14 - TWikiGuest

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

CPSC 543 Project - Third IterationIn this iteration, we justify our shared control approach in context of existing literature in shared control of passenger cars and smart Powered Wheelchair (PWC) research with shared control that has been tested on users with cognitive and/or mobility impairments. Following it, we describe our current progress towards experimental setup and experiment design. | ||||||||

| Deleted: | ||||||||

| < < | In this iteration, We use ideas about possible shapes and size of the joystick interface from previous iteration. Circular egocentric view around the wheelchair joystick can be realized using combination of visual percept and tactile sub-addition. We describe the exploratory technology implementation phase of our physical user interface design project in the following section. | |||||||

Shared Control | ||||||||

| Added: | ||||||||

| > > | The context of shared control involves a control signal generated by combining real-time signals from multiple agents in a system. In context of our PWC, we have the PWC driver and an embedded controller form these two agents. For our study, we replace the embedded controller with a teleoperator. Shared control have been used in applications involving remote operation such as surgery and pilot training systems which assume users to be trained professionals. Literature in shared control for lane assistance for passenger cars appear more closely related to our application as these systems also assume users to be novice drivers and the degrees of freedom of motion is also restricted to a plane. [2] describes three approaches to shared road departure prevention (RDP) for simulated emergency manoeuvre. They experimented haptic feedback (HF), drive by wire (DBW) and combination of HF and DBW with normal driving. In HF, given a likelihood of a road departure, the RDP applied an advisory steering torque such that the two agents would carry out the emergency manoeuvre cooperatively. In DBW, given a likelihood of road departure, the RDP adjusted the front-wheels angle to keep the vehicle on the road. In this mode, the users steering signal is completely overridden by the RDP. Their experiments on 30 participants in a vehicle simulator suggested that HF had no significant effect on the vehicle's path or the likelihood of road departure.The authors describe that, users perceived strong haptic feedback was authoritarian where as they generated more torque on the steering wheel against HF and overrode the RDP system if the HF was not strong. DBW and DBW+HF reduced the likelihood of departure. However the authors report degraded stimulus-response compatibility with DBW systems because when the DBW system took over control, the users would not steering wheel turning, which lead to confuse their internal perception of the vehicle. Taking inspiration from their work, our system uses an approach similar to DBW. Force-feedback haptic joysticks have been used in smart PWCs [3,4] but they are bulky, expensive and/or lack sufficient torque. Vibration feedback on seat [5] and steering wheel [6] have shown to have positive effect on performance,learning of a lane keeping task and reduction in reaction time and frontal collision. Without totally discarding haptic channel, our previous study used simple vibration actuator mounted below the joystick to inform user if the joystick signal is being modified or not. However, it was not suggestive for users regarding the direction they would have to move in case of an obstacle on their way. We propose to render shear force on palm of users driving hand as advisory direction guidance under these circumstances. | |||||||

Shared Control in PWC | ||||||||

| Added: | ||||||||

| > > | Smart PWCs systems literature have been extensively covered in [7],[8]. We explore the systems that have been tested with cognitively and/or mobility impaired users. [1],[8] did not use the concept of shared control. These systems would either provide higher level supervisory guidance with visual and audio cues or used switch control policies such that either the system or the user would have complete control over the PWC. Collaborative wheelchair assistant (CWA) [9],[10] modified users input by computing motion perpendicular to the desired path. Perpendicular motion away from the desired path increased the elastic path controllers output and force the PWC to return to the path. The amount of guidance was determined by varying a parameter that controlled the elastic path controllers gain. Collaborative wheelchair control (CWC) [3] used smoothness, directness and safety measures to compute local efficiencies of human and robot control signals. The system blended the control signals based on current and past average relative efficiencies. | |||||||

Our contributionExperimental Setup | ||||||||

| Added: | ||||||||

| > > | Divided attention It is difficult to recruit a large number of wheelchair users on the target demographics which makes it difficult to produce statistically significant results [11],[12]. As [10] and [11], we will use able-bodied users to evaluate the performance of our system. Future work will include a case study with an user from the target population. | |||||||

Egocentric ViewPowered wheelchairs are usually driven by joystick interface modified as per users needs. Our user study from previous iteration suggests that users prefer joystick that has larger surface area as it provides more sense of control and comfort. We use this opportunity to design an embedded navigation assistive physical user interface that would fit into the joysticks on these wheelchairs. We use egocentric view representation on a dome shaped physical interface as a visual sense of collision free direction. This visual guidance is supported by haptic rendering as a sub-additive sensory modal to stimulate user reaction. Similar work has been done in [1], where they use haptic display to block motion of joystick in certain direction, LED display around the joystick knob to indicate direction free from obstacles and an audio prompt to prompt user towards certain direction. Auditory percept requires user to process and execute the percept. It would be useful to provide a natural guidance that would direct the user towards a suitable trajectory. Here we extend to using shear force and vibratory haptic guidance instead of audio prompts with the hypothesis that it can provide a sense of natural guidance towards a safely projected trajectory. | ||||||||

| Line: 64 to 75 | ||||||||

| [1] Wang, R. H., Mihailidis, A., Dutta, T. and Fernie, G. R. (2011). Usability testing of multimodal feedback interface and simulated collision-avoidance power wheelchair for long-term-care home residents with cognitive impairments. Journal of Rehabilitation Research and Development, 48(6), 801-22. doi:10.1682/JRRD.2010.08.0147 | ||||||||

| Added: | ||||||||

| > > | [2] D. I. Katzourakis, J. C. F. de Winter, M. Alirezaei, M. Corno, and R. Happee, “Road-departure prevention in an emergency obstacle avoidance situation,” IEEE Trans. Systems, Man, and Cybernetics: Systems, vol. Early Access, 2013. [3] C. Urdiales, J. Peula, M. Fernandez-Carmona, C. Barru ́ , E. P ́ rez,S ́ nchez-Tato, J. del Toro, F. Galluppi, U. Cort ́ s, R. Annichiaricco,C. Caltagirone, and F. Sandoval, “A new multi-criteria optimization strategy for shared control in wheelchair assisted navigation,” Autonomous Robots, vol. 30, no. 2, pp. 179–197, 2011. [4] E. B. Vander Poorten, E. Demeester, E. Reekmans, J. Philips, A. Huntemann, and J. De Schutter, “Powered wheelchair navigation assistance through kinematically correct environmental haptic feedback,” in Proc. IEEE Int. Conf. on Robotics and Automation (ICRA), 2012, pp. 3706–3712. [5] S. de Groot, J. C. F. de Winter, J. M. L ́ pez Garc ́a, M. Mulder,and P. A. Wieringa, “The effect of concurrent bandwidth feedback on learning the lane-keeping task in a driving simulator.” Human Factors,vol. 53, no. 1, pp. 50 – 62, 2011. [6] J. Chun, S. H. Han, G. Park, J. Seo, I. Lee, and S. Choi, “Evaluation of vibrotactile feedback for forward collision warning on the steering wheel and seatbelt,” Int. Journal of Industrial Ergonomics, vol. 42, no. 5, pp. 443 – 448, 2012. [7] R. C. Simpson, “Smart wheelchairs: A literature review,” Journal of Rehabilitation Research and Development, vol. 42, no. 4, pp. 423–438, 2005. [8] P. Viswanathan, J. J. Little, A. K. Mackworth, and A. Mihailidis, “Navigation and obstacle avoidance help (NOAH) for older adults with cognitive impairment: a pilot study,” in Proc. Int. ACM SIGACCESS Conf. on Computers and Accessibility (ASSETS), 2011, pp. 43–50. [9] Q. Zeng, C. L. Teo, B. Rebsamen, and E. Burdet, “A collaborative wheelchair system,” IEEE Trans. Neural Systems and Rehabilitation Engineering, vol. 16, no. 2, pp. 161–170, 2008. [10] Q. Zeng, E. Burdet, and C. L. Teo, “Evaluation of a collaborative wheelchair system in cerebral palsy and traumatic brain injury users,” Neurorehabilitation and Neural Repair, vol. 23, no. 5, pp. 494–504, 2009. [11] T. Carlson and Y. Demiris, “Collaborative control for a robotic wheelchair: evaluation of performance, attention, and workload,” IEEE Trans. Systems, Man, and Cybernetics, Part B: Cybernetics,, vol. 42, no. 3, pp. 876–888, 2012. [12] H. A. Yanco, “Shared user-computer control of a robotic wheelchair system,” Ph.D. dissertation, MIT, Cambridge, MA, 2000. | |||||||

| -- BikramAdhikari - 24 Mar 2014 | ||||||||

Revision 12014-04-14 - TWikiGuest

| Line: 1 to 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Added: | ||||||||

| > > |

CPSC 543 Project - Third IterationIn this iteration, we justify our shared control approach in context of existing literature in shared control of passenger cars and smart Powered Wheelchair (PWC) research with shared control that has been tested on users with cognitive and/or mobility impairments. Following it, we describe our current progress towards experimental setup and experiment design. In this iteration, We use ideas about possible shapes and size of the joystick interface from previous iteration. Circular egocentric view around the wheelchair joystick can be realized using combination of visual percept and tactile sub-addition. We describe the exploratory technology implementation phase of our physical user interface design project in the following section.Shared ControlShared Control in PWCOur contributionExperimental SetupEgocentric ViewPowered wheelchairs are usually driven by joystick interface modified as per users needs. Our user study from previous iteration suggests that users prefer joystick that has larger surface area as it provides more sense of control and comfort. We use this opportunity to design an embedded navigation assistive physical user interface that would fit into the joysticks on these wheelchairs. We use egocentric view representation on a dome shaped physical interface as a visual sense of collision free direction. This visual guidance is supported by haptic rendering as a sub-additive sensory modal to stimulate user reaction. Similar work has been done in [1], where they use haptic display to block motion of joystick in certain direction, LED display around the joystick knob to indicate direction free from obstacles and an audio prompt to prompt user towards certain direction. Auditory percept requires user to process and execute the percept. It would be useful to provide a natural guidance that would direct the user towards a suitable trajectory. Here we extend to using shear force and vibratory haptic guidance instead of audio prompts with the hypothesis that it can provide a sense of natural guidance towards a safely projected trajectory.Visual DisplayWe use a RGB LED ring comprising of 24 serially controllable red, green and blue channels. We found this display suitable (see Fig [1]) for the size of the user interface we are considering to build. Figure [1] Egocentric LED display around wheelchair joystick

Our first attempt was to use egocentric LED display to point in the direction towards the which the intelligent system wants to guide. Figure [2] shows the brightest green as the direction of possible heading.

Figure [1] Egocentric LED display around wheelchair joystick

Our first attempt was to use egocentric LED display to point in the direction towards the which the intelligent system wants to guide. Figure [2] shows the brightest green as the direction of possible heading.

Figure [2] LED display showing green head in the direction away from obstacle

Another form of display would be suggestive of direction towards which the joystick could turn. We simulated this using trailing LEDs. This video

Figure [2] LED display showing green head in the direction away from obstacle

Another form of display would be suggestive of direction towards which the joystick could turn. We simulated this using trailing LEDs. This videoHaptic RenderingWe use a similar approach to haptic rendering as to that of visual display. We use a servo motor with an arrow like shaft on top to point in the direction of guidance. We mounted this motor into a hemispherical surface in such a way that the palm surface would be in contact with the pointer. This videoEgocentric InputAs input, we also calibrated the circular potentiometer against LED display. This feature can be used to control the pan-tilt unit on which the camera of the wheelchair is mounted. In this video Figure [3]: Touch sensors (circular and point pressure type touch sensors)used in this project

Figure [3]: Touch sensors (circular and point pressure type touch sensors)used in this project

ReflectionsIn this iteration, we focused on generating basic visual and tactile patterns showing target position and target heading. We used egocentric approach to representing the environment around the wheelchair. We demonstrated some of the patterns using a servo motor, a vibration motor and circular LED display. We achieved position and direction guidance like behaviour using LED display. Using a vibration motor was challenging to control and localize vibration. Our simple experiment showed some promise on displaying direction guidance using vibration feedback. We experimented with some simple shear force mechanisms to observe, if we can realize position and direction guidance. Our eccentric wheel based shear force display appears to be able to render both position and direction. We will explore this domain in our next iteration. The input section experimented in this iteration is merely an illustration of a working sensory system. We intend to use this sensory input to control the pan-tilt of the wheelchair camera so that the user can obtain vision based system's assistance. Right now, we have only used the circular touch sensor. This sensor could be used to turn the camera around by mapping sensor to the camera position (similar to mapping LED display with sensor position). We intend to incorporate controllability of tilt as well using combination of circular and point touch sensor with gestures such as swiping up and down to tilt the camera up and down.Next IterationIn next iteration, we plan to refine the haptic displays using shear force. We will integrate visual and haptic displays together into a single system. If time schedule aligns with my user interview, I will conduct a final interview with the user to get feedback on the designed interface. We also plan to integrate pan-tilt control using the touch interface. Reference: [1] Wang, R. H., Mihailidis, A., Dutta, T. and Fernie, G. R. (2011). Usability testing of multimodal feedback interface and simulated collision-avoidance power wheelchair for long-term-care home residents with cognitive impairments. Journal of Rehabilitation Research and Development, 48(6), 801-22. doi:10.1682/JRRD.2010.08.0147 -- BikramAdhikari - 24 Mar 2014 | |||||||

View topic | History: r7 < r6 < r5 < r4 | More topic actions...

Ideas, requests, problems regarding TWiki? Send feedback